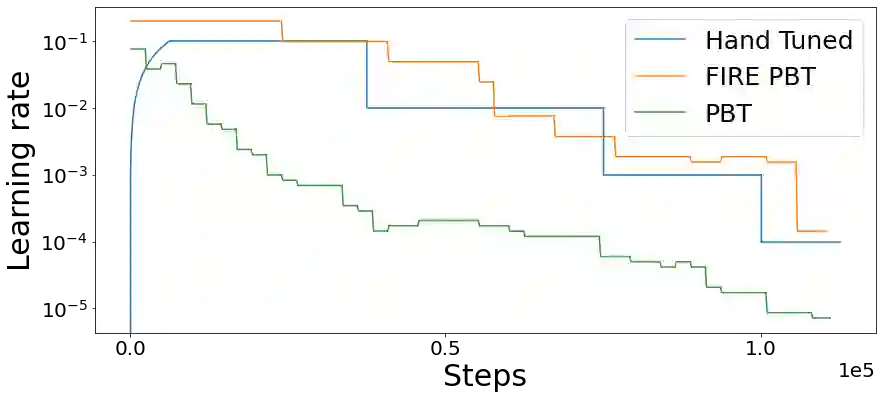

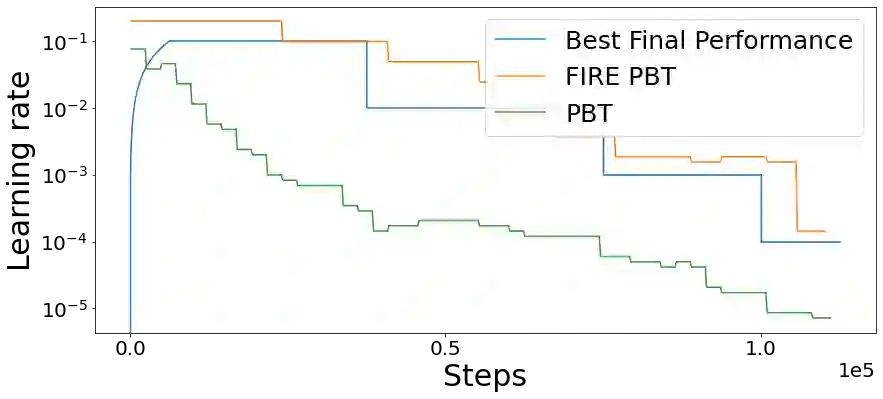

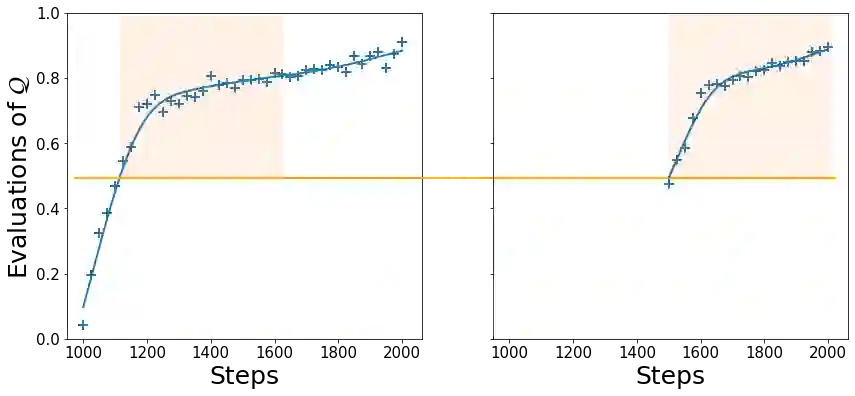

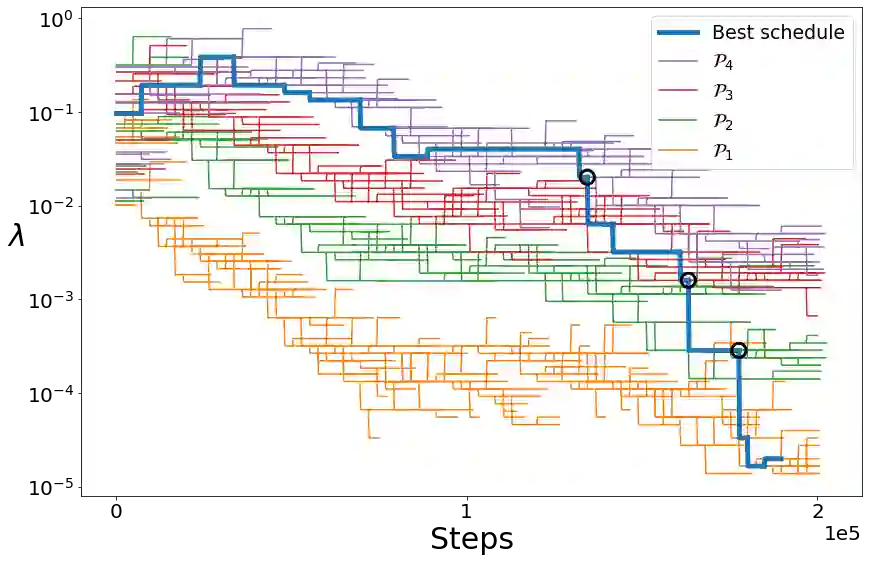

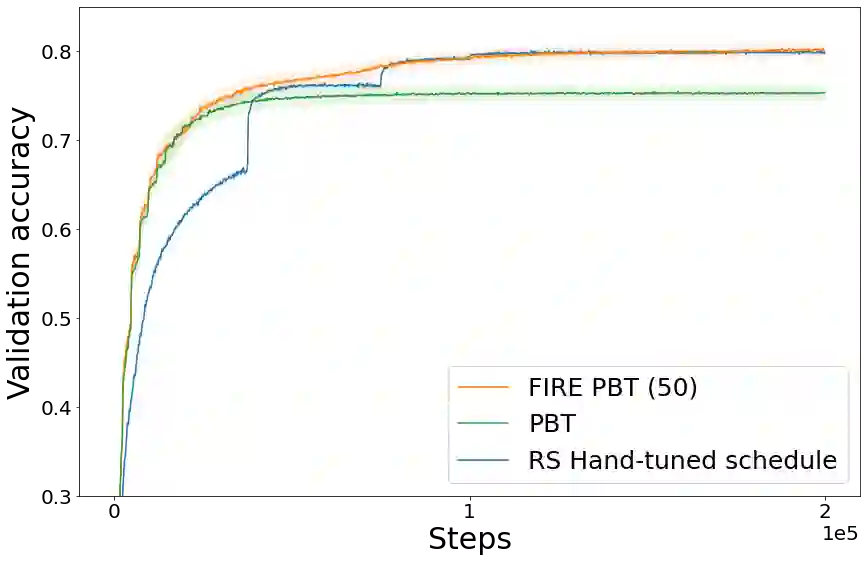

The successful training of neural networks typically involves careful and time consuming hyperparameter tuning. Population Based Training (PBT) has recently been proposed to automate this process. PBT trains a population of neural networks concurrently, frequently mutating their hyperparameters throughout their training. However, the decision mechanisms of PBT are greedy and favour short-term improvements which can, in some cases, lead to poor long-term performance. This paper presents Faster Improvement Rate PBT (FIRE PBT) which addresses this problem. Our method is guided by an assumption: given two neural networks with similar performance and training with similar hyperparameters, the network showing the faster rate of improvement will lead to a better final performance. Using this, we derive a novel fitness metric and use it to make some of the population members focus on long-term performance. Our experiments show that FIRE PBT is able to outperform PBT on the ImageNet benchmark and match the performance of networks that were trained with a hand-tuned learning rate schedule. We apply FIRE PBT to reinforcement learning tasks and show that it leads to faster learning and higher final performance than both PBT and random hyperparameter search.

翻译:对神经网络的成功培训通常需要仔细和费时的超参数调试。基于人口的培训(PBT)最近被提议使这一过程自动化。PBT同时培训神经网络人口,在培训过程中经常突变超参数。但是,PBT的决策机制贪婪,有利于短期改进,在某些情况下会导致长期性能不佳。本文展示了解决这一问题的更快改进率PBT(FIRE PBT),我们的方法以一个假设为指导:向两个具有类似性能和培训的神经网络提供类似的超参数,显示改进速度的更快的网络将带来更好的最后性能。我们利用这个假设,我们制定了新的健康度量度标准,让一些人口成员关注长期性能。我们的实验显示,FIRE PBT能够超越图像网络基准的PBT,与经过手工调校准学习进度表培训的网络的性能相匹配。我们应用FIRE PBT来强化学习任务,并显示其最终性能比PT和PB软件都快和高。