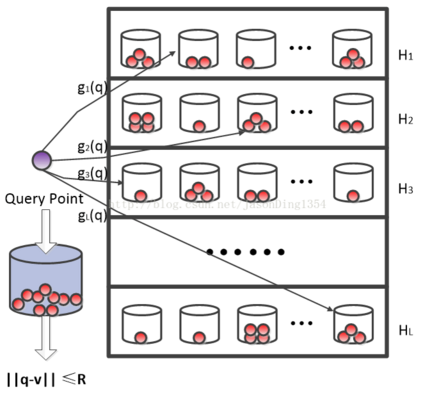

In today's data centers, personalized recommendation systems face challenges such as the need for large memory capacity and high bandwidth, especially when performing embedding operations. Previous approaches have relied on DIMM-based near-memory processing techniques or introduced 3D-stacked DRAM to address memory-bound issues and expand memory bandwidth. However, these solutions fall short when dealing with the expanding size of personalized recommendation systems. Recommendation models have grown to sizes exceeding tens of terabytes, making them challenging to run efficiently on traditional single-node inference servers. Although various algorithmic methods have been proposed to reduce embedding table capacity, they often result in increased memory access or inefficient utilization of memory resources. This paper introduces HEAM, a heterogeneous memory architecture that integrates 3D-stacked DRAM with DIMM to accelerate recommendation systems in which compositional embedding is utilized-a technique aimed at reducing the size of embedding tables. The architecture is organized into a three-tier memory hierarchy consisting of conventional DIMM, 3D-stacked DRAM with a base die-level Processing-In-Memory (PIM), and a bank group-level PIM incorporating lookup tables. This setup is specifically designed to accommodate the unique aspects of compositional embedding, such as temporal locality and embedding table capacity. This design effectively reduces bank access, improves access efficiency, and enhances overall throughput, resulting in a 6.3 times speedup and 58.9% energy savings compared to the baseline.

翻译:暂无翻译