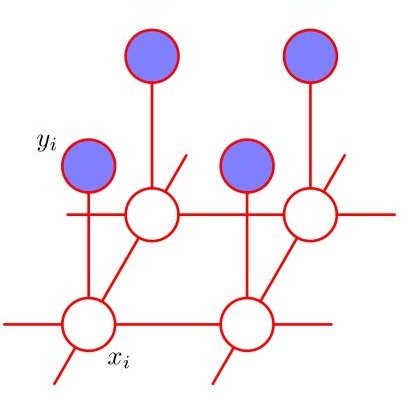

While convolutional neural networks (CNNs) trained by back-propagation have seen unprecedented success at semantic segmentation tasks, they are known to struggle on out-of-distribution data. Markov random fields (MRFs) on the other hand, encode simpler distributions over labels that, although less flexible than UNets, are less prone to over-fitting. In this paper, we propose to fuse both strategies by computing the product of distributions of a UNet and an MRF. As this product is intractable, we solve for an approximate distribution using an iterative mean-field approach. The resulting MRF-UNet is trained jointly by back-propagation. Compared to other works using conditional random fields (CRFs), the MRF has no dependency on the imaging data, which should allow for less over-fitting. We show on 3D neuroimaging data that this novel network improves generalisation to out-of-distribution samples. Furthermore, it allows the overall number of parameters to be reduced while preserving high accuracy. These results suggest that a classic MRF smoothness prior can allow for less over-fitting when principally integrated into a CNN model. Our implementation is available at https://github.com/balbasty/nitorch.

翻译:虽然经过后方分析培训的共生神经网络(CNNs)在语义分割任务方面取得了前所未有的成功,但众所周知,它们在分布数据上挣扎。Markov随机字段(MRFs)则在标签上对简单分布进行编码,虽然比UNets不那么灵活,但不太容易过度装配。在本文中,我们提议通过计算UNet和MRF的分发产品来结合这两种战略。由于这一产品是棘手的,我们用迭代中位平面方法来解决大致分布的问题。由此产生的MRF-UNet通过反向调整联合培训。与使用有条件随机字段(CRFs)的其他工作相比,MRF并不依赖成像数据,而这种成像数据应该不那么容易过度装配。我们用3D神经成像数据显示,这个新网络改进了分配外样品的普及。此外,它允许在保持高准确度的同时减少参数总数。这些结果显示,以前的经典MRFs光滑度在以前可以允许在主要可使用IMA/Basirm@orgs。