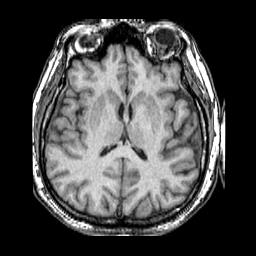

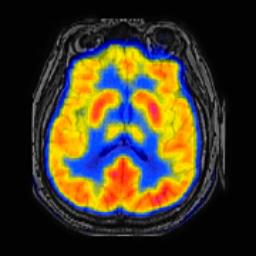

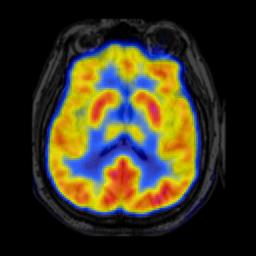

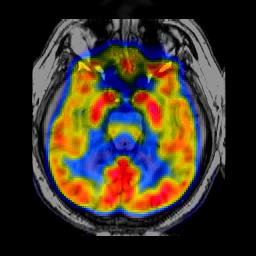

In image fusion, images obtained from different sensors are fused to generate a single image with enhanced information. In recent years, state-of-the-art methods have adopted Convolution Neural Networks (CNNs) to encode meaningful features for image fusion. Specifically, CNN-based methods perform image fusion by fusing local features. However, they do not consider long-range dependencies that are present in the image. Transformer-based models are designed to overcome this by modeling the long-range dependencies with the help of self-attention mechanism. This motivates us to propose a novel Image Fusion Transformer (IFT) where we develop a transformer-based multi-scale fusion strategy that attends to both local and long-range information (or global context). The proposed method follows a two-stage training approach. In the first stage, we train an auto-encoder to extract deep features at multiple scales. In the second stage, multi-scale features are fused using a Spatio-Transformer (ST) fusion strategy. The ST fusion blocks are comprised of a CNN and a transformer branch which capture local and long-range features, respectively. Extensive experiments on multiple benchmark datasets show that the proposed method performs better than many competitive fusion algorithms. Furthermore, we show the effectiveness of the proposed ST fusion strategy with an ablation analysis. The source code is available at: https://github.com/Vibashan/Image-Fusion-Transformer}{https://github.com/Vibashan/Image-Fusion-Transformer.

翻译:在图像融合中,从不同传感器获得的图像被结合,以生成一个带有强化信息的单一图像。近年来,最先进的方法已经采用了革命神经网络(CNN)来为图像融合编码有意义的特征。具体地说,以CNN为基础的方法通过使用本地功能来进行图像融合。然而,它们并不考虑图像中存在的远程依赖性。基于变压器的模型设计来克服这一点,办法是在自我注意机制的帮助下模拟长距离依赖性。这促使我们提出一个新的图像变异变异变异变异器(IFT),我们在那里开发一种基于变异器的多级聚合战略,既关注本地信息,也关注远程信息(或全球背景)。拟议的方法采用两阶段培训方法。但在第一阶段,我们不考虑图像中存在远程依赖的远程依赖性。在第二阶段,通过Spatio-Transharformormore (ST) 战略,将多级变异式图像变异式图像转换(IFT)构成一个基于变异式的变异式变异式变异式战略,我们分别展示了本地和远程变异性变式变式的变式变式变式变式变式变式变式变式变式变式变式变式变式变式变式变式的变式变式变式变式变式变式变式变式变式变式变式变式变式变式变式模型方法。