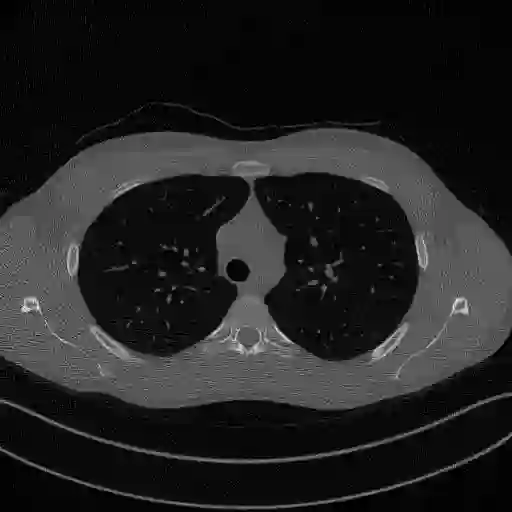

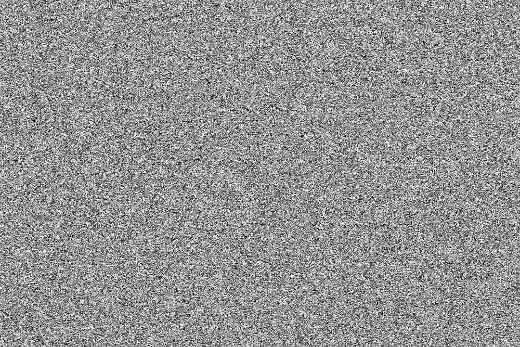

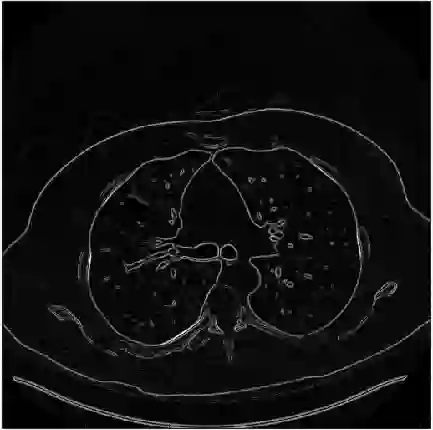

In this work, we present Eformer - Edge enhancement based transformer, a novel architecture that builds an encoder-decoder network using transformer blocks for medical image denoising. Non-overlapping window-based self-attention is used in the transformer block that reduces computational requirements. This work further incorporates learnable Sobel-Feldman operators to enhance edges in the image and propose an effective way to concatenate them in the intermediate layers of our architecture. The experimental analysis is conducted by comparing deterministic learning and residual learning for the task of medical image denoising. To defend the effectiveness of our approach, our model is evaluated on the AAPM-Mayo Clinic Low-Dose CT Grand Challenge Dataset and achieves state-of-the-art performance, $i.e.$, 43.487 PSNR, 0.0067 RMSE, and 0.9861 SSIM. We believe that our work will encourage more research in transformer-based architectures for medical image denoising using residual learning.

翻译:在这项工作中,我们展示了基于Eex - 边缘增强的变压器,这是一个利用变压器块建立编码器- 解码器网络以进行医学图像脱网的新结构。在减少计算要求的变压器块中,使用了不重叠窗口自留功能。这项工作还吸收了可学习的Sobel-Feldman操作员来提升图像的边缘,并提出了将它们融入我们结构中间层的有效方法。实验分析是通过比较确定学和剩余学习来进行医学图像脱网化任务。为了维护我们的方法的有效性,我们模型在AAPM-Mayo诊所低剂量CT大挑战数据集上进行了评估,并实现了最新性能,即43.487 PSNR、0.0067 RMSE和0.9861 SSIM。 我们相信,我们的工作将鼓励对基于变压器的模型进行更多的研究,以便利用剩余学习进行医学图像解析。