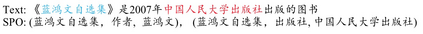

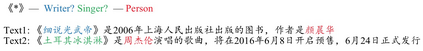

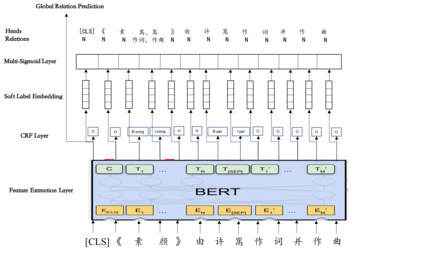

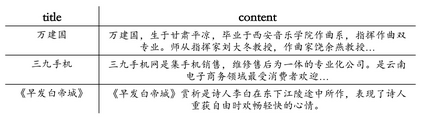

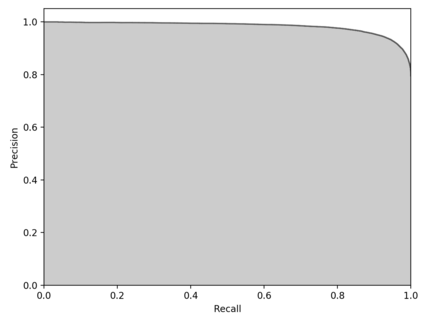

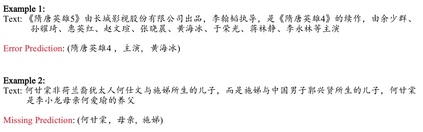

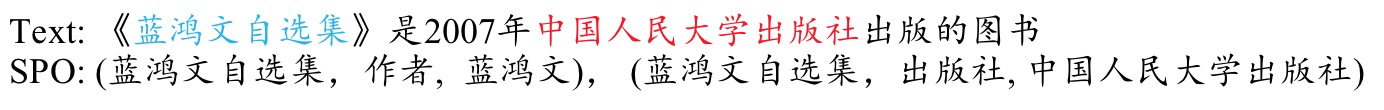

In this paper, we report our method for the Information Extraction task in 2019 Language and Intelligence Challenge. We incorporate BERT into the multi-head selection framework for joint entity-relation extraction. This model extends existing approaches from three perspectives. First, BERT is adopted as a feature extraction layer at the bottom of the multi-head selection framework. We further optimize BERT by introducing a semantic-enhanced task during BERT pre-training. Second, we introduce a large-scale Baidu Baike corpus for entity recognition pre-training, which is of weekly supervised learning since there is no actual named entity label. Third, soft label embedding is proposed to effectively transmit information between entity recognition and relation extraction. Combining these three contributions, we enhance the information extracting ability of the multi-head selection model and achieve F1-score 0.876 on testset-1 with a single model. By ensembling four variants of our model, we finally achieve F1 score 0.892 (1st place) on testset-1 and F1 score 0.8924 (2nd place) on testset-2.

翻译:在本文中,我们在2019年的语言和情报挑战中报告了我们的信息提取任务方法。我们将BERT纳入联合实体关系提取的多头选择框架。这一模式从三个角度扩展了现有办法。首先,BERT被作为多头选择框架底部的地貌提取层。我们进一步优化了BERT,在BERT的预培训期间引入了语义强化任务。第二,我们为实体认证前培训引入了大规模Baidu Baike保护伞,这是每周监督学习,因为没有实际名称的实体标签。第三,软标签嵌入提议在实体识别和关系提取之间有效传递信息。结合这三种贡献,我们加强了多头选择模式的信息提取能力,并用单一模型实现了关于测试1的F1-0.876核心0.876。通过将我们模型的四个变量组合在一起,我们最终在测试1和F1评分0.892(第1位)和F1评分(第2位)在测试2中取得了0.8924分。