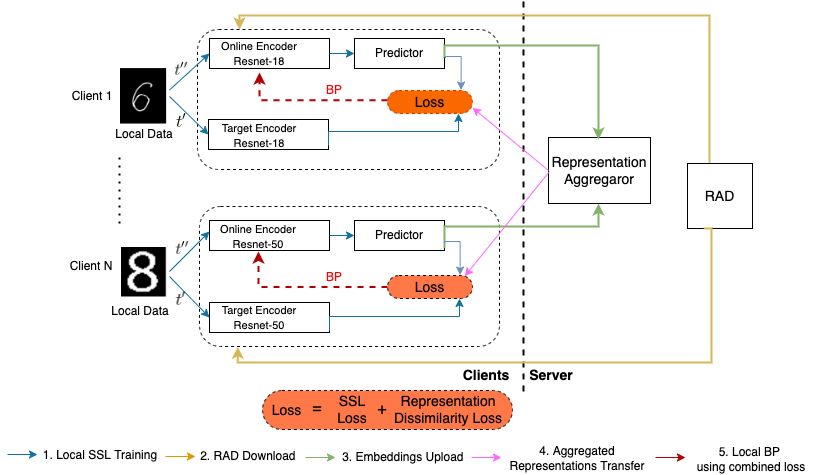

Federated Learning has become an important learning paradigm due to its privacy and computational benefits. As the field advances, two key challenges that still remain to be addressed are: (1) system heterogeneity - variability in the compute and/or data resources present on each client, and (2) lack of labeled data in certain federated settings. Several recent developments have tried to overcome these challenges independently. In this work, we propose a unified and systematic framework, \emph{Heterogeneous Self-supervised Federated Learning} (Hetero-SSFL) for enabling self-supervised learning with federation on heterogeneous clients. The proposed framework allows collaborative representation learning across all the clients without imposing architectural constraints or requiring presence of labeled data. The key idea in Hetero-SSFL is to let each client train its unique self-supervised model and enable the joint learning across clients by aligning the lower dimensional representations on a common dataset. The entire training procedure could be viewed as self and peer-supervised as both the local training and the alignment procedures do not require presence of any labeled data. As in conventional self-supervised learning, the obtained client models are task independent and can be used for varied end-tasks. We provide a convergence guarantee of the proposed framework for non-convex objectives in heterogeneous settings and also empirically demonstrate that our proposed approach outperforms the state of the art methods by a significant margin.

翻译:由于隐私和计算效益,联邦学习已成为一个重要的学习范例。随着实地进展,两个仍有待解决的重大挑战是:(1) 系统差异性——每个客户的计算和/或数据资源的差异——每个客户的计算和/或数据资源的差异,以及(2) 在某些联邦环境中缺乏贴标签的数据。最近的一些事态发展试图独立克服这些挑战。在这项工作中,我们提出了一个统一和系统的框架,即 empph{血源性自我监督的联邦学习(Hetero-SSFLL)(Hetro-SSFLL),以便能够与不同客户的联合会进行自我监督学习。拟议框架允许在所有客户之间进行协作性代表学习,而不必设置建筑限制或需要贴标签的数据。Hetero-SSFL的主要想法是让每个客户培训其独特的自我监督模式,并通过在共同数据集上调整较低维度的表述方式,使客户之间能够共同学习。整个培训程序可被视为自上和同行监督,因为当地培训和调整程序不需要存在任何标签的数据。在常规的自我监督型格式中,我们使用不统一式任务中所使用的模式是非独立模式。