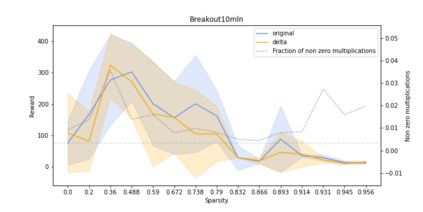

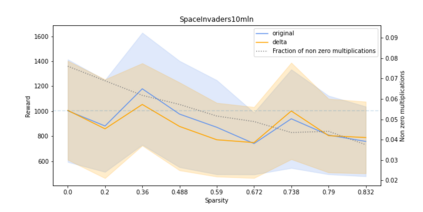

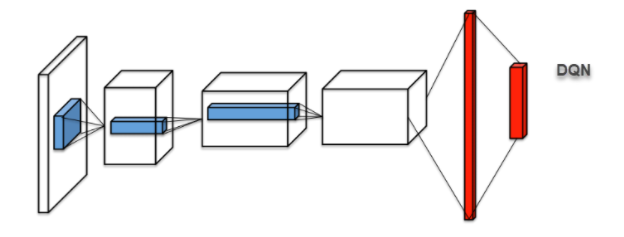

This article proposes a sparse computation-based method for optimizing neural networks for reinforcement learning (RL) tasks. This method combines two ideas: neural network pruning and taking into account input data correlations; it makes it possible to update neuron states only when changes in them exceed a certain threshold. It significantly reduces the number of multiplications when running neural networks. We tested different RL tasks and achieved 20-150x reduction in the number of multiplications. There were no substantial performance losses; sometimes the performance even improved.

翻译:本条提出了优化神经网络以优化强化学习任务(RL)的细小计算法。这种方法结合了两个想法:神经网络修剪和考虑到输入数据的相关性;只有在神经网络变化超过某一阈值时,才可能更新神经状态。在运行神经网络时,它大大减少了乘数的数量。我们测试了不同的RL任务,并实现了20-150x倍数的减少。没有出现重大性能损失;有时性能甚至得到改善。