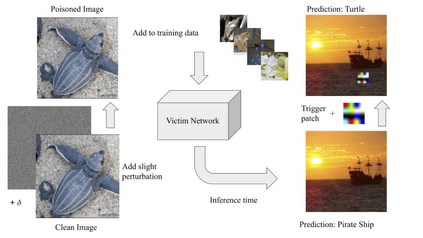

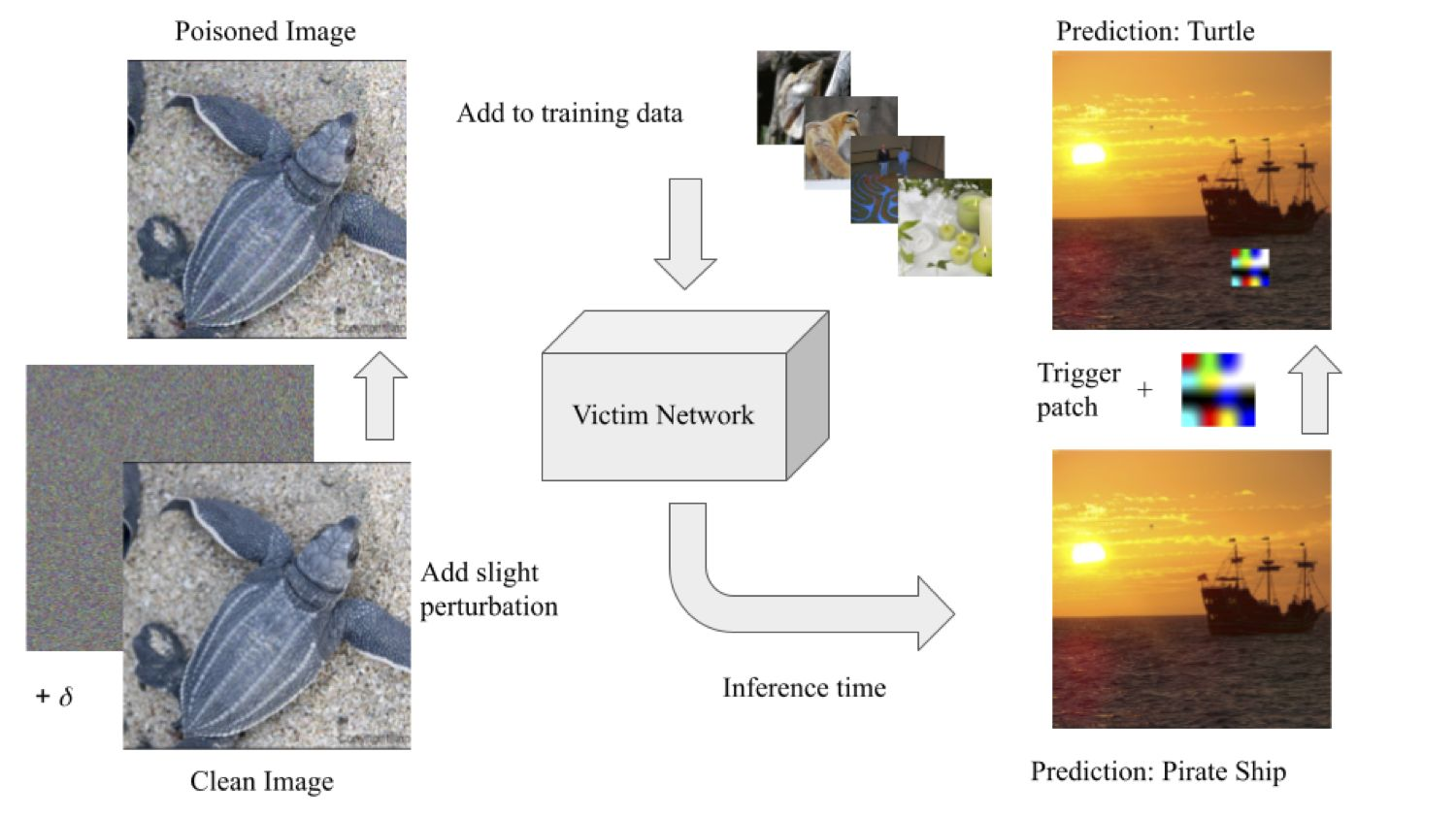

As the curation of data for machine learning becomes increasingly automated, dataset tampering is a mounting threat. Backdoor attackers tamper with training data to embed a vulnerability in models that are trained on that data. This vulnerability is then activated at inference time by placing a "trigger" into the model's input. Typical backdoor attacks insert the trigger directly into the training data, although the presence of such an attack may be visible upon inspection. In contrast, the Hidden Trigger Backdoor Attack achieves poisoning without placing a trigger into the training data at all. However, this hidden trigger attack is ineffective at poisoning neural networks trained from scratch. We develop a new hidden trigger attack, Sleeper Agent, which employs gradient matching, data selection, and target model re-training during the crafting process. Sleeper Agent is the first hidden trigger backdoor attack to be effective against neural networks trained from scratch. We demonstrate its effectiveness on ImageNet and in black-box settings. Our implementation code can be found at https://github.com/hsouri/Sleeper-Agent.

翻译:随着机器学习数据的整理日益自动化,改变数据集是一个越来越大的威胁。 后门攻击者篡改了培训数据,将脆弱性嵌入关于该数据的培训模型中。 然后,通过将“触发器”输入模型输入,这种脆弱性在推断时被触发。 典型的后门攻击将触发器直接插入培训数据, 尽管这种攻击的存在在检查时可能可见。 相比之下, 隐藏的触发器后门攻击在根本没有对培训数据设置触发器的情况下就实现了中毒。 然而, 这种隐藏的触发器攻击对从零开始训练的神经中毒网络是无效的。 我们开发了一个新的隐藏的触发器攻击, 睡眠者代理, 使用梯度匹配、 数据选择和目标模型再培训。 睡眠者是第一个隐藏的后门攻击, 以便从零开始对接受训练的神经网络有效。 我们在图像网络和黑箱环境中展示了它的功效。 我们在https://github.com/hsouri/Sleiner-Agent 上可以找到我们的执行代码。