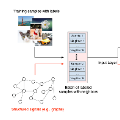

Speech emotion recognition (SER) has been a popular research topic in human-computer interaction (HCI). As edge devices are rapidly springing up, applying SER to edge devices is promising for a huge number of HCI applications. Although deep learning has been investigated to improve the performance of SER by training complex models, the memory space and computational capability of edge devices represents a constraint for embedding deep learning models. We propose a neural structured learning (NSL) framework through building synthesized graphs. An SER model is trained on a source dataset and used to build graphs on a target dataset. A relatively lightweight model is then trained with the speech samples and graphs together as the input. Our experiments demonstrate that training a lightweight SER model on the target dataset with speech samples and graphs can not only produce small SER models, but also enhance the model performance over models with speech samples only and those with classic transfer learning strategies.

翻译:由于边缘装置正在迅速涌现,对边缘装置应用SER是有希望的。尽管通过培训复杂模型对深层学习进行了深入调查,以提高SER的性能,但边缘装置的记忆空间和计算能力是嵌入深层学习模型的制约因素。我们建议通过建立综合图解来建立神经结构学习框架。SER模型在源数据集方面接受培训,并用于在目标数据集上建立图解。然后,对相对轻重的模型进行语音样本和图解相结合的培训,作为投入。我们的实验表明,用语音样本和图解对目标数据集的轻量型SER模型进行培训不仅可以产生小型SER模型,而且还可以提高模型的模型性能,只有语音样本和具有经典传输学习战略的模型。