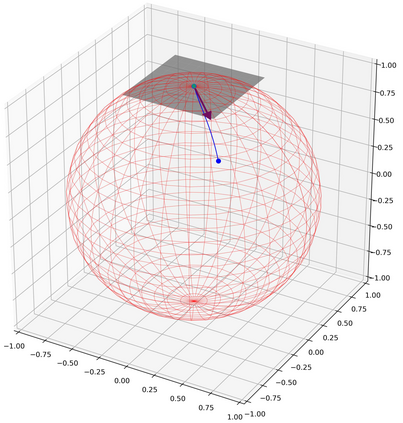

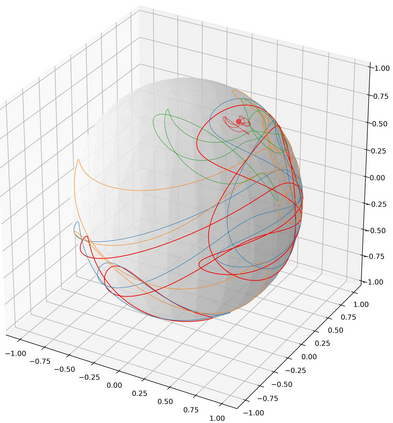

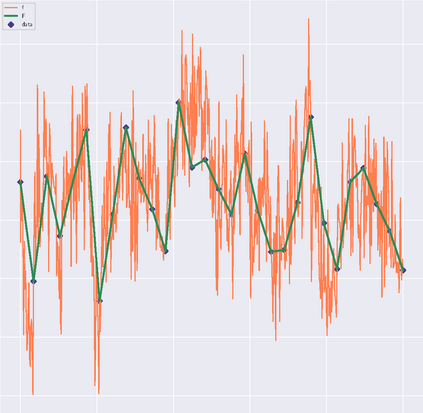

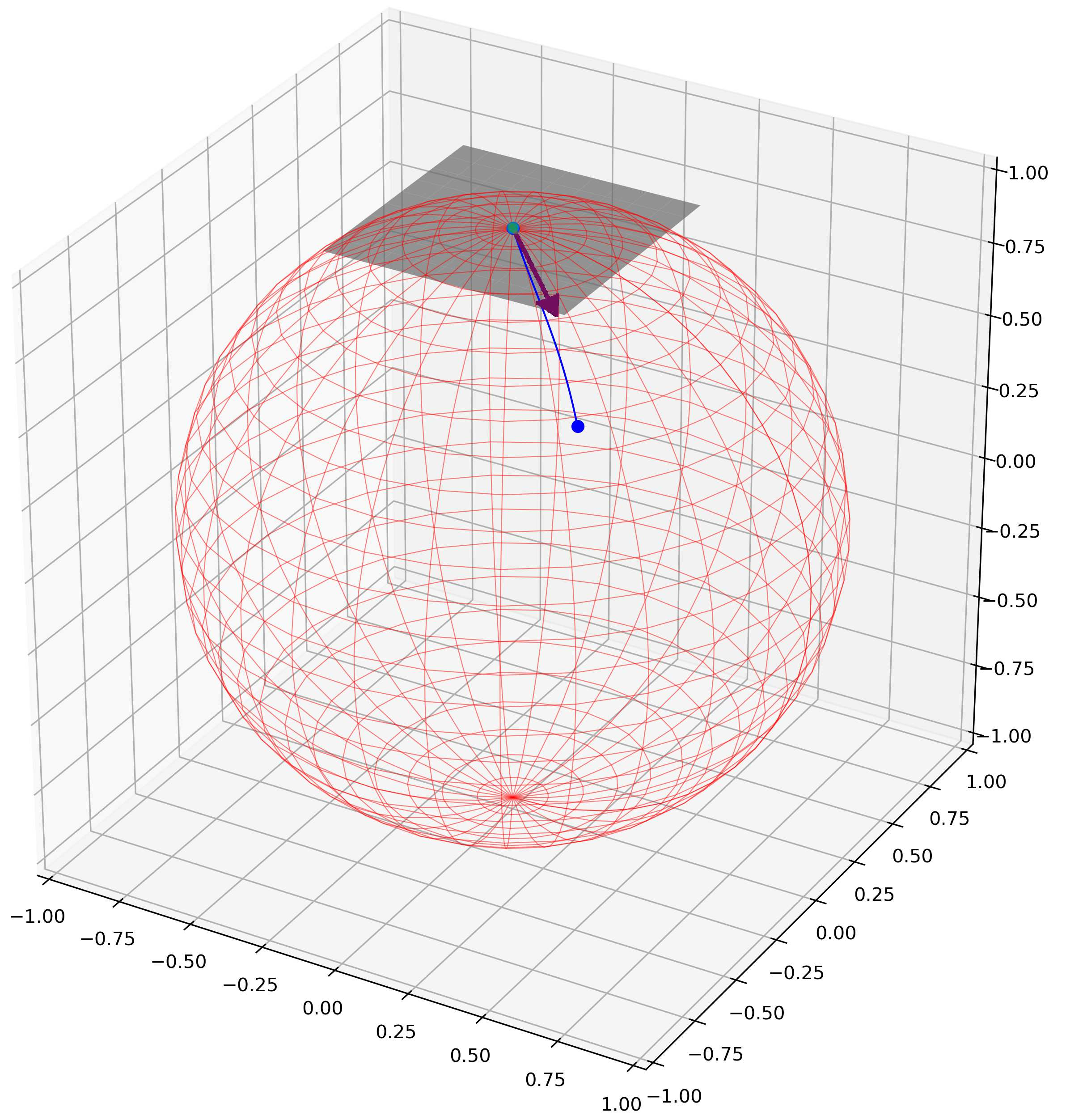

This paper addresses the growing need to process non-Euclidean data, by introducing a geometric deep learning (GDL) framework for building universal feedforward-type models compatible with differentiable manifold geometries. We show that our GDL models can approximate any continuous target function uniformly on compact sets of a controlled maximum diameter. We obtain curvature-dependent lower-bounds on this maximum diameter and upper-bounds on the depth of our approximating GDL models. Conversely, we find that there is always a continuous function between any two non-degenerate compact manifolds that any "locally-defined" GDL model cannot uniformly approximate. Our last main result identifies data-dependent conditions guaranteeing that the GDL model implementing our approximation breaks "the curse of dimensionality." We find that any "real-world" (i.e. finite) dataset always satisfies our condition and, conversely, any dataset satisfies our requirement if the target function is smooth. As applications, we confirm the universal approximation capabilities of the following GDL models: Ganea et al. (2018)'s hyperbolic feedforward networks, the architecture implementing Krishnan et al. (2015)'s deep Kalman-Filter, and deep softmax classifiers. We build universal extensions/variants of: the SPD-matrix regressor of Meyer et al. (2011), and Fletcher (2003)'s Procrustean regressor. In the Euclidean setting, our results imply a quantitative version of Kidger and Lyons (2020)'s approximation theorem and a data-dependent version of Yarotsky and Zhevnerchuk (2019)'s uncursed approximation rates.

翻译:本文通过引入一个几何深度学习框架( GDL ), 用于构建与不同可控最大直径的紧凑组合中的任何连续目标功能。 我们发现, GDL 模型可以在最大直径和顶端深度的最大直径上获取曲线依赖下限的下限。 相反, 我们发现, 任何两个“ 本地定义” GDL 模型都无法统一近似的、 通用的、 通用的、 本地定义的、 通用的、 GDL 模型之间总是有连续的功能。 我们最后一项主要结果确定了数据依赖的条件, 保证执行我们近距离的GDL 模型打破了“ 受控最大直径的诅咒 ” 。 我们发现, 任何“ 现实世界” ( 限值) 数据总是满足我们的条件, 反过来, 如果目标功能平稳, 任何数据都符合我们的要求。 作为应用程序, 我们确认以下 GDL 模型( Ganea 和 本地定义的) Genealteral- developal- develilal liversal develop liversal lial liversal liversal lives: Weeqal- silal- weqal- greal- reliversal- supal ruperals sil.