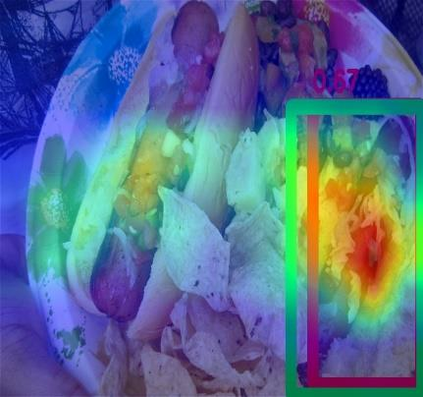

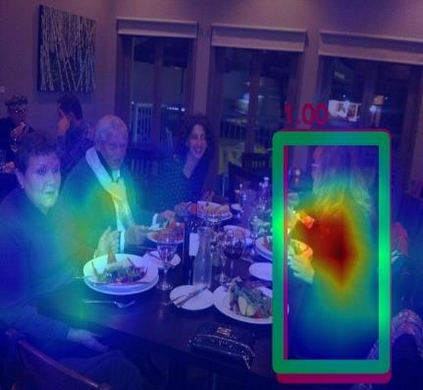

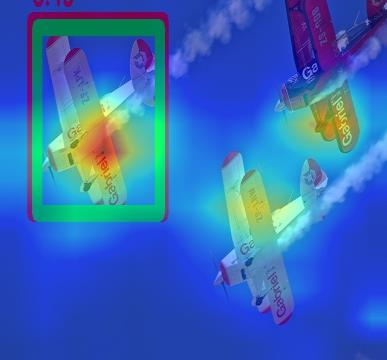

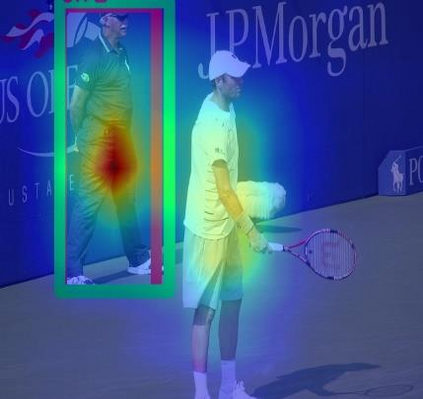

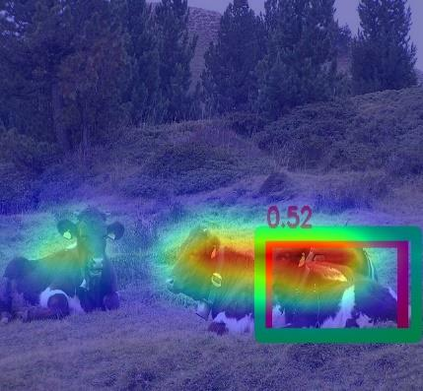

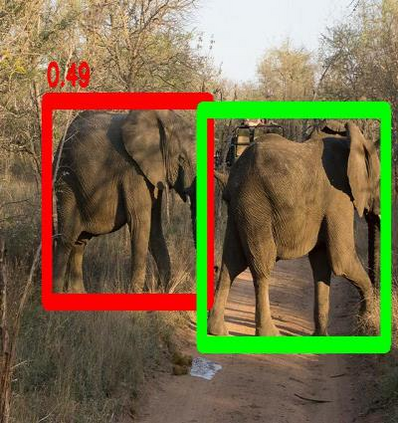

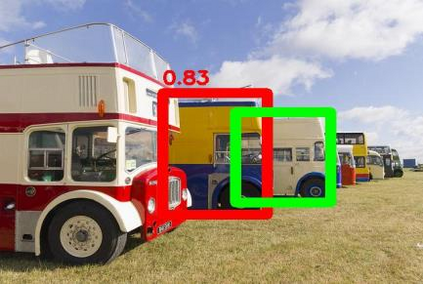

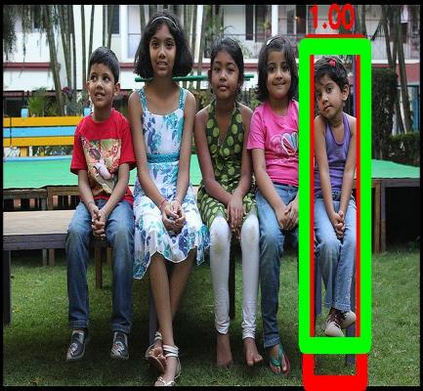

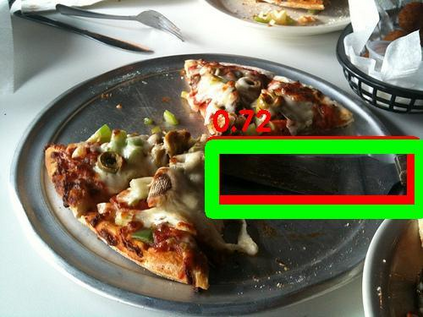

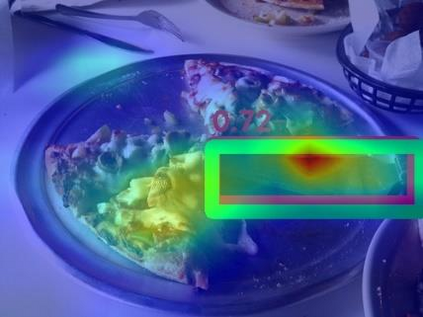

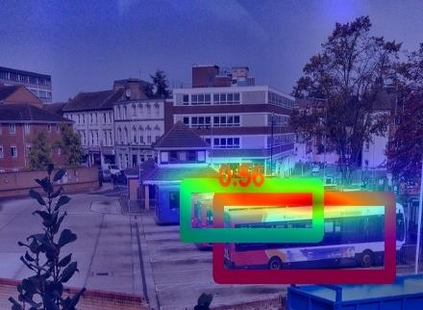

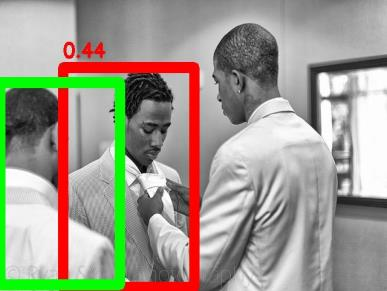

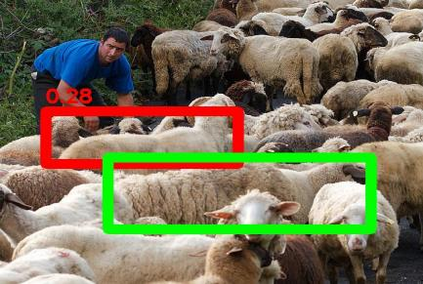

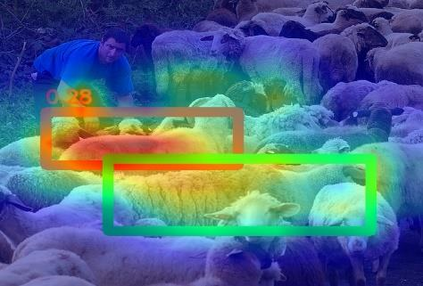

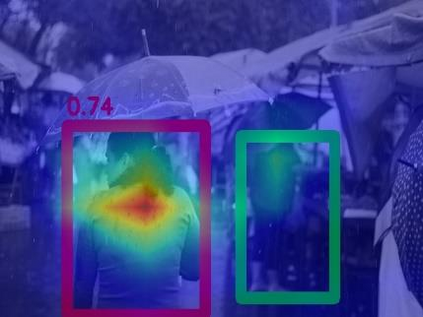

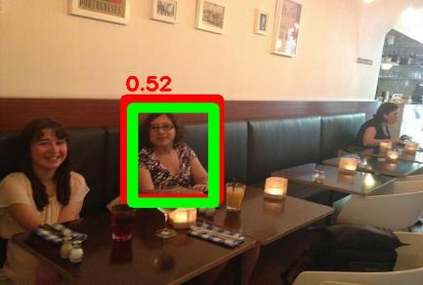

Referring Expression Comprehension (REC) is an emerging research spot in computer vision, which refers to detecting the target region in an image given an text description. Most existing REC methods follow a multi-stage pipeline, which are computationally expensive and greatly limit the application of REC. In this paper, we propose a one-stage model towards real-time REC, termed Real-time Global Inference Network (RealGIN). RealGIN addresses the diversity and complexity issues in REC with two innovative designs: the Adaptive Feature Selection (AFS) and the Global Attentive ReAsoNing unit (GARAN). AFS adaptively fuses features at different semantic levels to handle the varying content of expressions. GARAN uses the textual feature as a pivot to collect expression-related visual information from all regions, and thenselectively diffuse such information back to all regions, which provides sufficient context for modeling the complex linguistic conditions in expressions. On five benchmark datasets, i.e., RefCOCO, RefCOCO+, RefCOCOg, ReferIt and Flickr30k, the proposed RealGIN outperforms most prior works and achieves very competitive performances against the most advanced method, i.e., MAttNet. Most importantly, under the same hardware, RealGIN can boost the processing speed by about 10 times over the existing methods.

翻译:表示宽度(REC)是计算机愿景中一个新兴的研究点,它是指在给文字描述的图像中探测目标区域,现有大多数REC方法都采用多阶段管道,这些管道计算费用昂贵,极大地限制了REC的应用。在本文件中,我们建议了实时REC的一阶段模型,称为实时全球引文网络(RealGIN)。ReGIN处理REC中的多样性和复杂问题,有两种创新设计:适应性特征选择(AFS)和全球惯性再生单元(GARAN)。AFS在不同语义层次上适应性地结合了不同语义的特征,以处理表达内容的不同内容。 GARAN使用文字特征作为从所有区域收集与表达相关的视觉信息的要点,然后将这些信息有选择性地传播到所有区域,为模拟表达中的复杂语言条件提供了充分的背景。关于五个基准数据集,即RefCOCOCO、RefCO+、RefCOCOg、RefCOGCOg、RefIT和FL30k 等不同语系的功能,在最先进前的操作中实现了最先进的实GRIIN和FLFIG 。