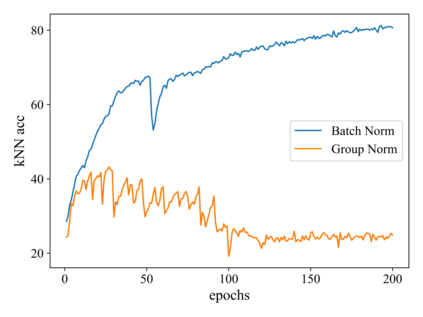

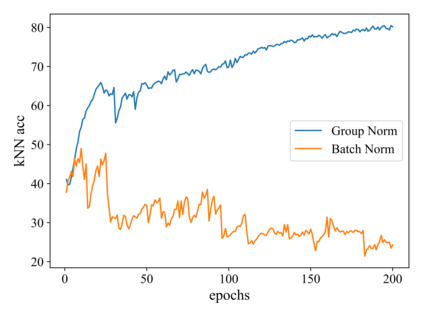

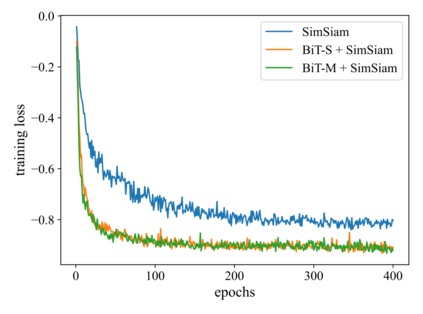

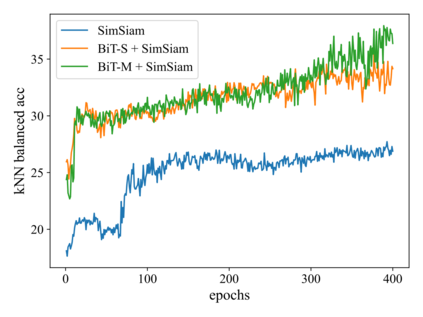

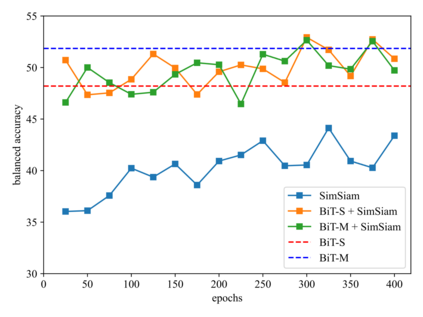

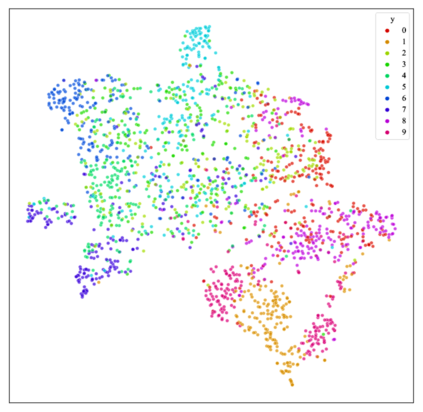

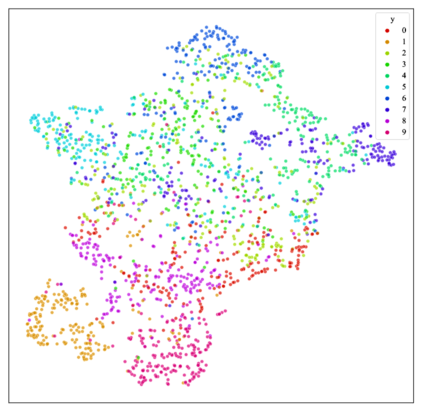

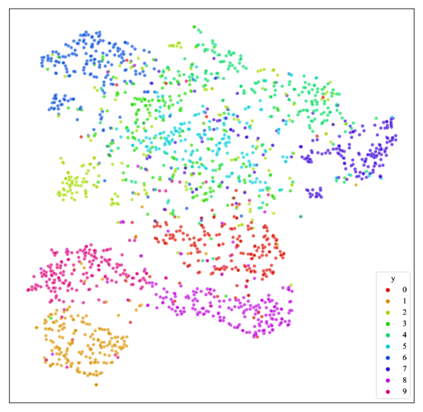

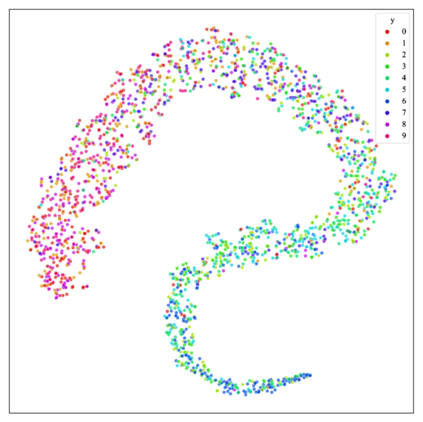

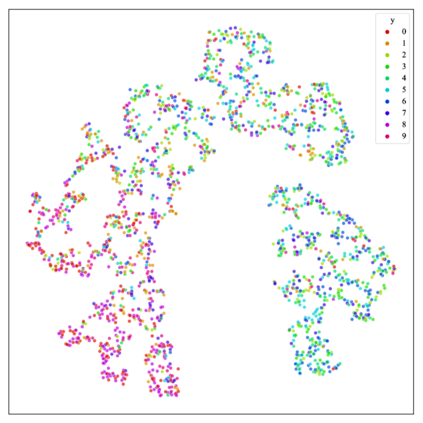

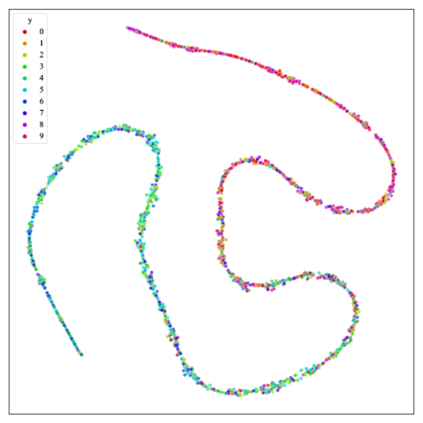

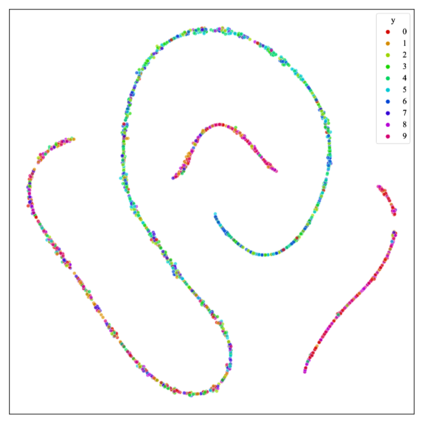

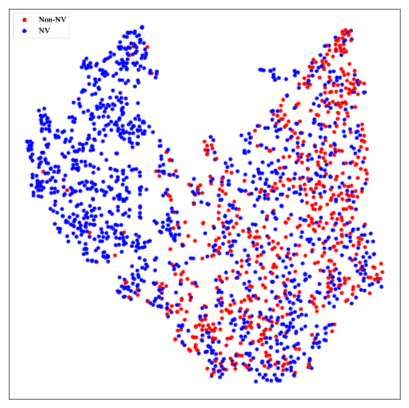

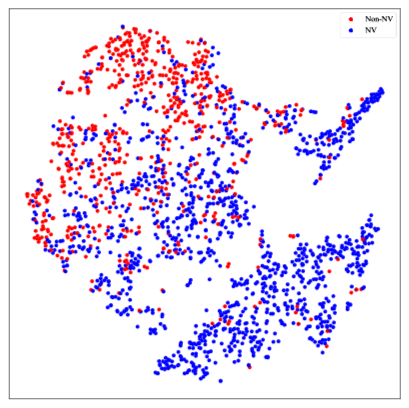

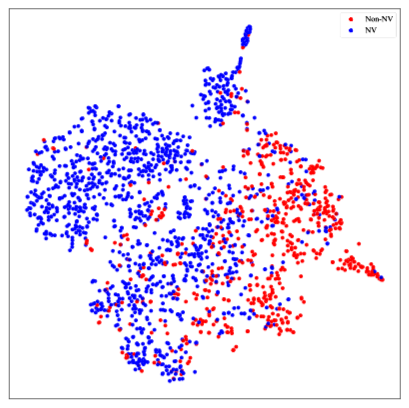

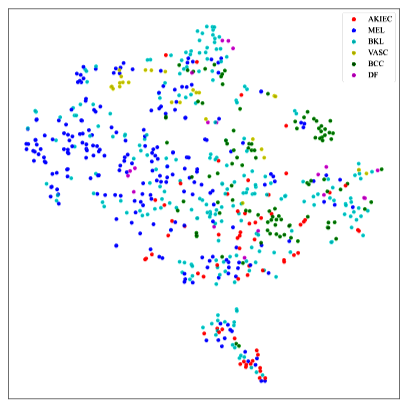

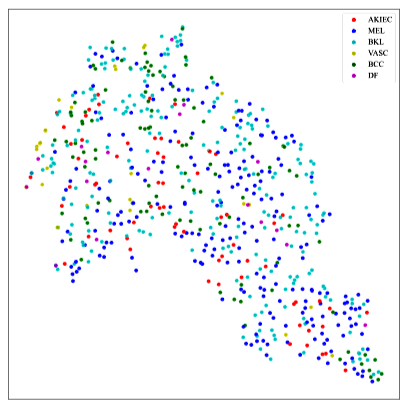

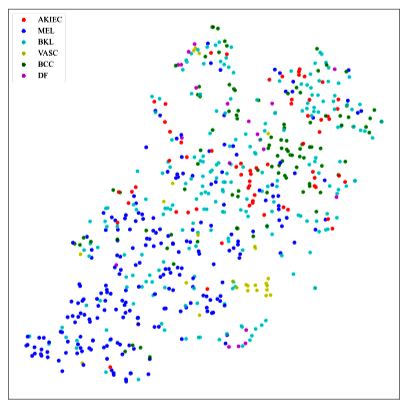

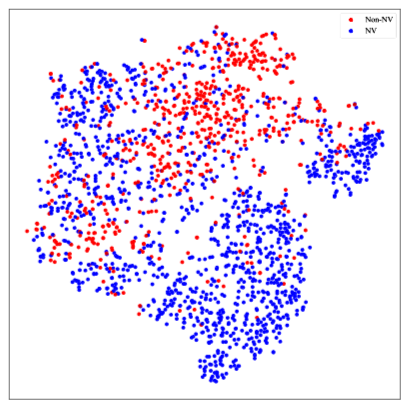

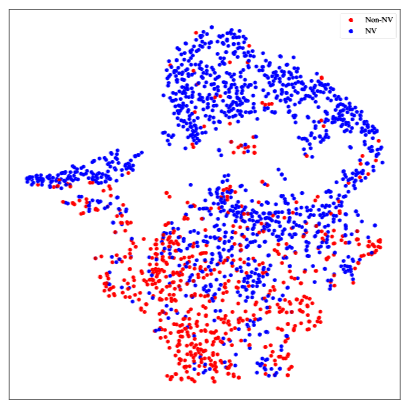

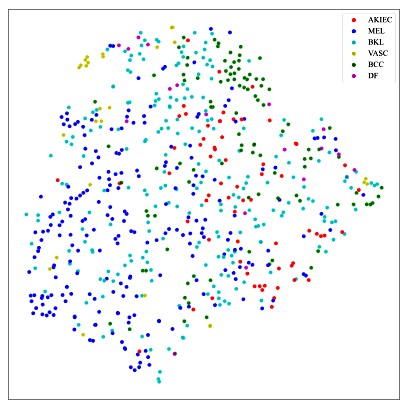

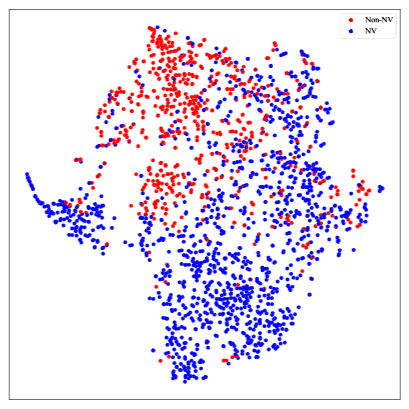

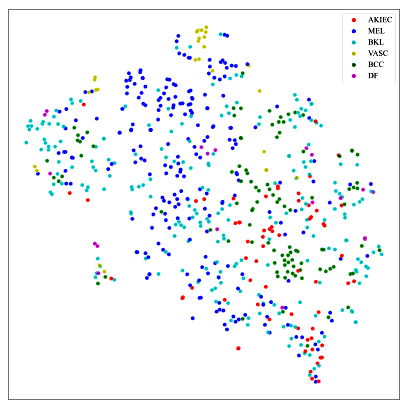

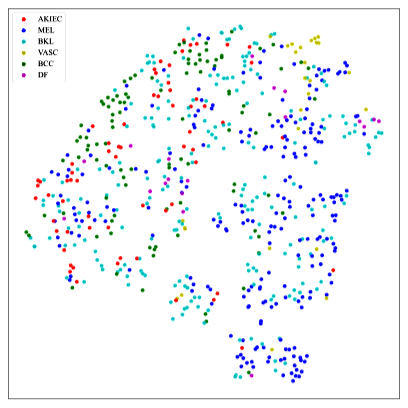

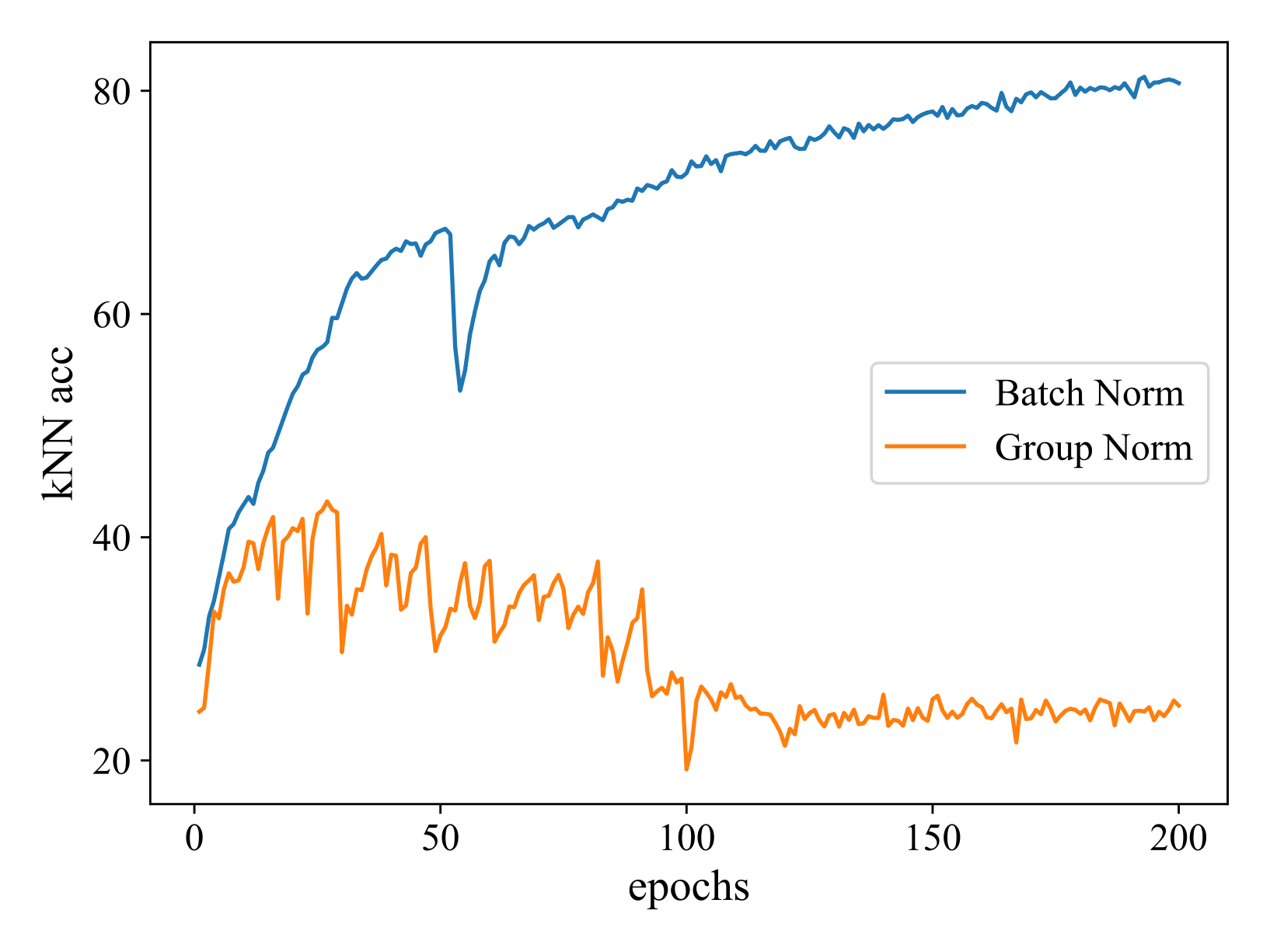

Annotated medical images are typically rarer than labeled natural images since they are limited by domain knowledge and privacy constraints. Recent advances in transfer and contrastive learning have provided effective solutions to tackle such issues from different perspectives. The state-of-the-art transfer learning (e.g., Big Transfer (BiT)) and contrastive learning (e.g., Simple Siamese Contrastive Learning (SimSiam)) approaches have been investigated independently, without considering the complementary nature of such techniques. It would be appealing to accelerate contrastive learning with transfer learning, given that slow convergence speed is a critical limitation of modern contrastive learning approaches. In this paper, we investigate the feasibility of aligning BiT with SimSiam. From empirical analyses, different normalization techniques (Group Norm in BiT vs. Batch Norm in SimSiam) are the key hurdle of adapting BiT to SimSiam. When combining BiT with SimSiam, we evaluated the performance of using BiT, SimSiam, and BiT+SimSiam on CIFAR-10 and HAM10000 datasets. The results suggest that the BiT models accelerate the convergence speed of SimSiam. When used together, the model gives superior performance over both of its counterparts. We hope this study will motivate researchers to revisit the task of aggregating big pre-trained models with contrastive learning models for image analysis.

翻译:附加医疗图象通常比被贴上标签的自然图象少见,因为它们受域内知识和隐私限制的限制。最近在转让和对比学习方面的进展从不同角度为解决这些问题提供了有效的解决办法。最先进的转移学习(例如,大转移(Bit))和对比学习(例如,简单siamese Contrastion Learning(SimSiam))是独立调查Biamse Contracting(SimSiam)方法的关键障碍,没有考虑到这些技术的互补性性质。鉴于缓慢的趋同速度是现代对比学习方法的关键限制。在本文件中,我们研究了使BiT与Simsiam相匹配的可行性。从经验分析、不同的正常化技术(BiT的Norm对SimSiam的Batch Norm)和对比学习(Simsiam的Batch Norm)是使BiT与SimSimsiam前学习方法相适应的关键障碍。当我们评估使用BiT、SimSiam和Bit+SimSim100数据集的模型的进度时,我们将利用SimSimSimS的高级分析的进度加速分析将加速其进度。