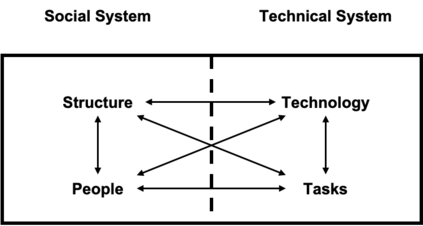

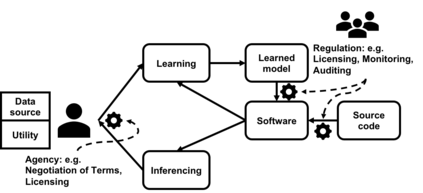

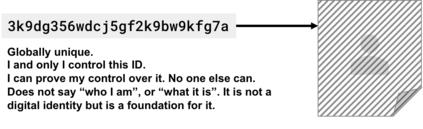

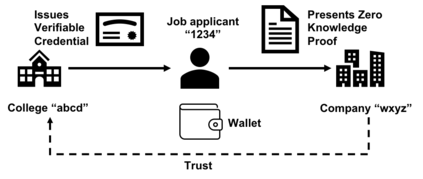

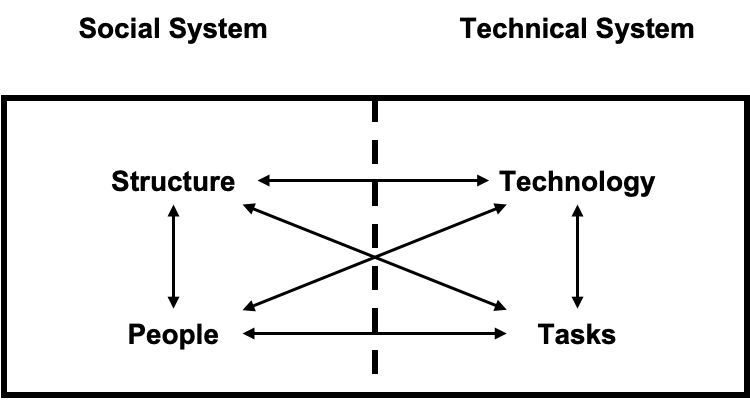

For AI technology to fulfill its full promises, we must have effective means to ensure Responsible AI behavior and curtail potential irresponsible use, e.g., in areas of privacy protection, human autonomy, robustness, and prevention of biases and discrimination in automated decision making. Recent literature in the field has identified serious shortcomings of narrow technology focused and formalism-oriented research and has proposed an interdisciplinary approach that brings the social context into the scope of study. In this paper, we take a sociotechnical approach to propose a more expansive framework of thinking about the Responsible AI challenges in both technical and social context. Effective solutions need to bridge the gap between a technical system with the social system that it will be deployed to. To this end, we propose human agency and regulation as main mechanisms of intervention and propose a decentralized computational infrastructure, or a set of public utilities, as the computational means to bridge this gap. A decentralized infrastructure is uniquely suited for meeting this challenge and enable technical solutions and social institutions in a mutually reinforcing dynamic to achieve Responsible AI goals. Our approach is novel in its sociotechnical approach and its aim in tackling the structural issues that cannot be solved within the narrow confines of AI technical research. We then explore possible features of the proposed infrastructure and discuss how it may help solve example problems recently studied in the field.

翻译:为使AI技术充分履行其全部承诺,我们必须有有效手段确保负责任的AI行为,并减少潜在不负责任的使用,例如在隐私保护、人自主、稳健以及防止自动决策中的偏见和歧视等领域,确保AI行为和减少潜在不负责任的使用,例如,在保护隐私、人的自主性、稳健性以及防止自动决策中的偏见和歧视等领域。最近这一领域的文献已经查明了狭隘技术重点和形式主义研究的严重缺陷,并提出了将社会背景纳入研究范围的跨学科方法。在本文件中,我们采取了社会技术方法,以提出一个更为广泛的思考框架,探讨负责任的AI在技术和社会方面所面临的挑战。有效的解决办法需要弥合技术系统与它将要部署的社会系统之间的差距。为此,我们建议将人力机构和监管作为干预的主要机制,并提出分散的计算基础设施,或一套公共事业设施,作为弥合这一差距的计算手段。分散的基础设施特别适合迎接这一挑战,并且能够使技术解决方案和社会机构能够相互加强地实现负责任的AI目标。我们的方法在社会技术方法及其解决结构性问题的目标方面是新颖的。为此,我们提议在AI技术研究的狭小范围内如何帮助解决这类问题。我们随后将探讨技术研究领域可能具备的特征。