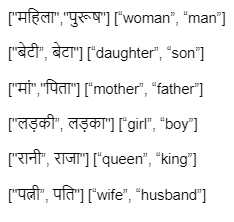

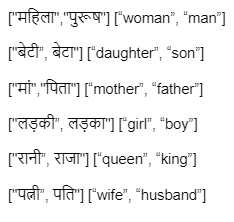

As the use of natural language processing increases in our day-to-day life, the need to address gender bias inherent in these systems also amplifies. This is because the inherent bias interferes with the semantic structure of the output of these systems while performing tasks like machine translation. While research is being done in English to quantify and mitigate bias, debiasing methods in Indic Languages are either relatively nascent or absent for some Indic languages altogether. Most Indic languages are gendered, i.e., each noun is assigned a gender according to each language's grammar rules. As a consequence, evaluation differs from what is done in English. This paper evaluates the gender stereotypes in Hindi and Marathi languages. The methodologies will differ from the ones in the English language because there are masculine and feminine counterparts in the case of some words. We create a dataset of neutral and gendered occupation words, emotion words and measure bias with the help of Embedding Coherence Test (ECT) and Relative Norm Distance (RND). We also attempt to mitigate this bias from the embeddings. Experiments show that our proposed debiasing techniques reduce gender bias in these languages.

翻译:随着我们日常生活中自然语言处理的使用增多,这些系统中固有的性别偏见问题也更加需要解决,这是因为固有的偏见干扰了这些系统输出的语义结构,同时执行机器翻译等任务。虽然正在用英语进行研究,以量化和减少偏见,但一些印地语的贬低方法相对而言还是比较新生,或者完全没有。大多数印地语的性别化,即根据每种语言的语法规则,给每个名词指定了一种性别。因此,评估不同于英语。本文评估了印地语和马拉地语的性别陈规定型观念。方法与英语不同,因为有些语言是男性和女性对应的。我们创建了一个中性和性别化职业语言、情感语言和衡量偏见的数据集,帮助嵌入一致性测试(ECT)和相对诺姆距离(RND),我们还试图减少这种与嵌入语言的偏见。实验显示,我们提议的贬低语言性别化技术会减少这些语言中的性别偏见。