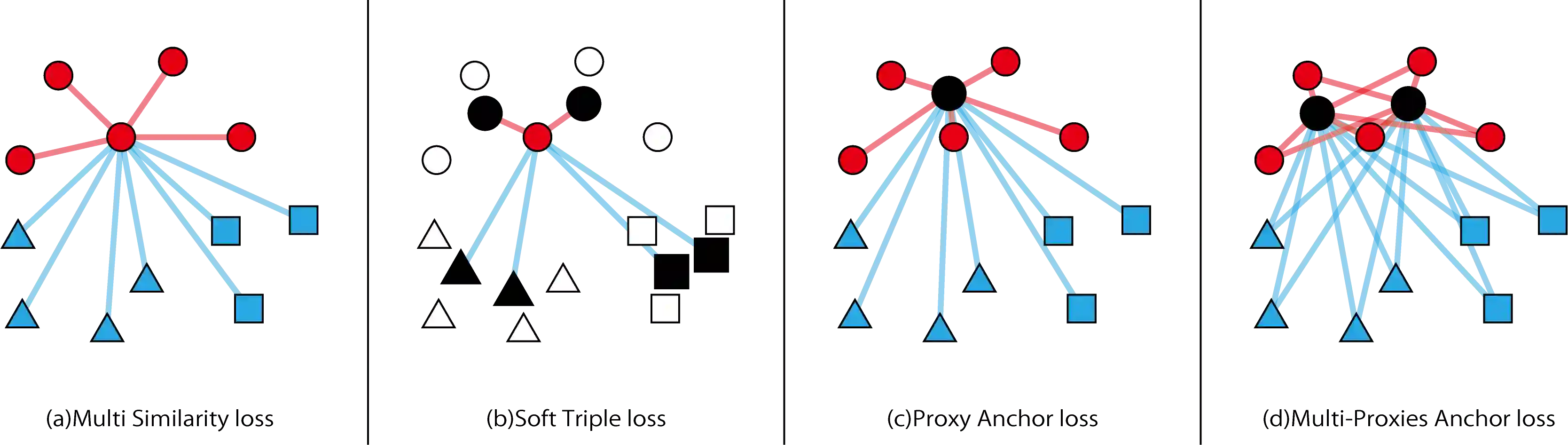

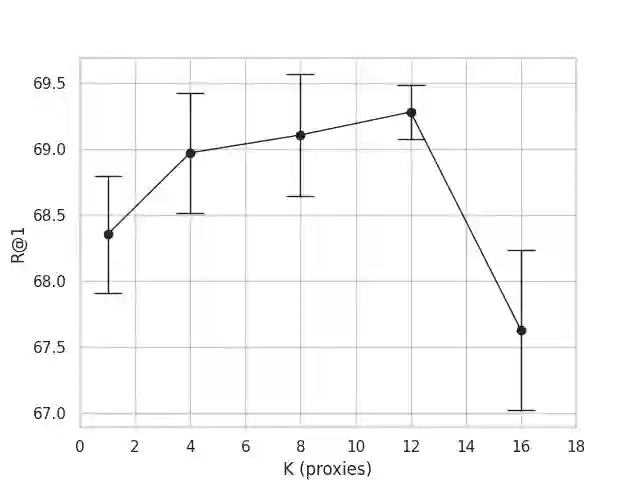

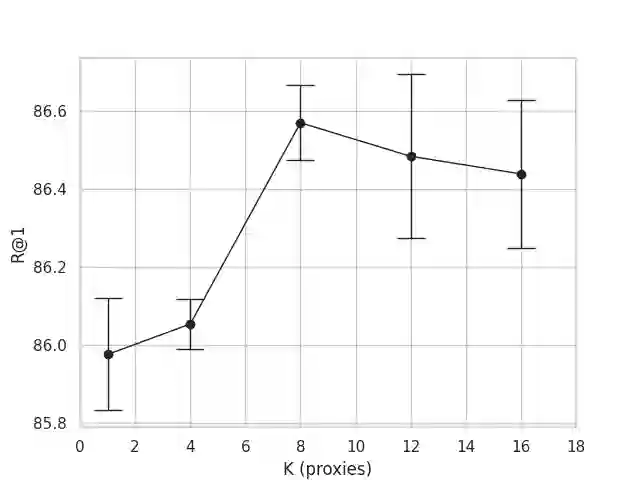

Deep metric learning (DML) learns the mapping, which maps into embedding space in which similar data is near and dissimilar data is far. However, conventional proxy-based losses for DML have two problems: gradient problems and applying the real-world dataset with multiple local centers. Besides, DML performance metrics also have some issues have stability and flexibility. This paper proposes multi-proxies anchor (MPA) loss and normalized discounted cumulative gain (nDCG@k) metric. This study contributes three following: (1) MPA loss is able to learn the real-world dataset with multi-local centers. (2) MPA loss improves the training capacity of a neural network owing to solving the gradient issues. (3) nDCG@k metric encourages complete evaluation for various datasets. Finally, we demonstrate MPA loss's effectiveness, and MPA loss achieves higher accuracy on two datasets for fine-grained images.

翻译:深度学习(DML) 学会了映射,地图映射到类似数据接近且数据相异的空间。然而,传统代用损失DML有两个问题:梯度问题和将真实世界数据集应用于多个地方中心。此外,DML的性能指标也存在一些问题,具有稳定性和灵活性。本文提出了多重代理人锚定(MPA)损失和正常的折扣累积收益(nDCG@k)衡量标准。本研究有以下三个贡献:(1) MPA损失能够与多地方中心学习真实世界数据集。(2) MPA损失通过解决梯度问题提高了神经网络的培训能力。(3) nDCG@k 衡量标准鼓励对各种数据集进行全面评价。最后,我们展示了MPA损失的有效性,MPA损失在两个精细图像数据集上达到了更高的准确度。