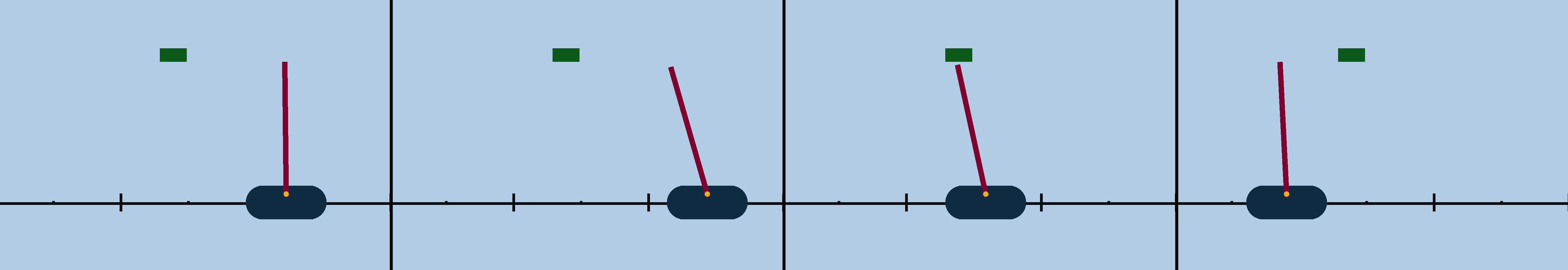

The automatic synthesis of neural-network controllers for autonomous agents through reinforcement learning has to simultaneously optimize many, possibly conflicting, objectives of various importance. This multi-objective optimization task is reflected in the shape of the reward function, which is most often the result of an ad-hoc and crafty-like activity. In this paper we propose a principled approach to shaping rewards for reinforcement learning from multiple objectives that are given as a partially-ordered set of signal-temporal-logic (STL) rules. To this end, we first equip STL with a novel quantitative semantics allowing to automatically evaluate individual requirements. We then develop a method for systematically combining evaluations of multiple requirements into a single reward that takes into account the priorities defined by the partial order. We finally evaluate our approach on several case studies, demonstrating its practical applicability.

翻译:通过强化学习,自动合成自主剂神经网络控制器,必须同时优化许多可能相互冲突的重要目标。这一多目标优化任务体现在奖励功能的形状上,而奖励功能往往是临时和巧妙活动的结果。在本文件中,我们提出了一个原则性办法,从作为部分顺序的一套信号-时序(STL)规则的多重目标中强化学习的奖励。为此,我们首先为STL配备了新的定量语义,允许自动评估个人需求。然后,我们开发了一种方法,将多重要求的评价系统地结合到一个单一的奖励中,其中考虑到部分顺序确定的优先事项。我们最后评估了我们在若干案例研究中的做法,并表明其实际适用性。