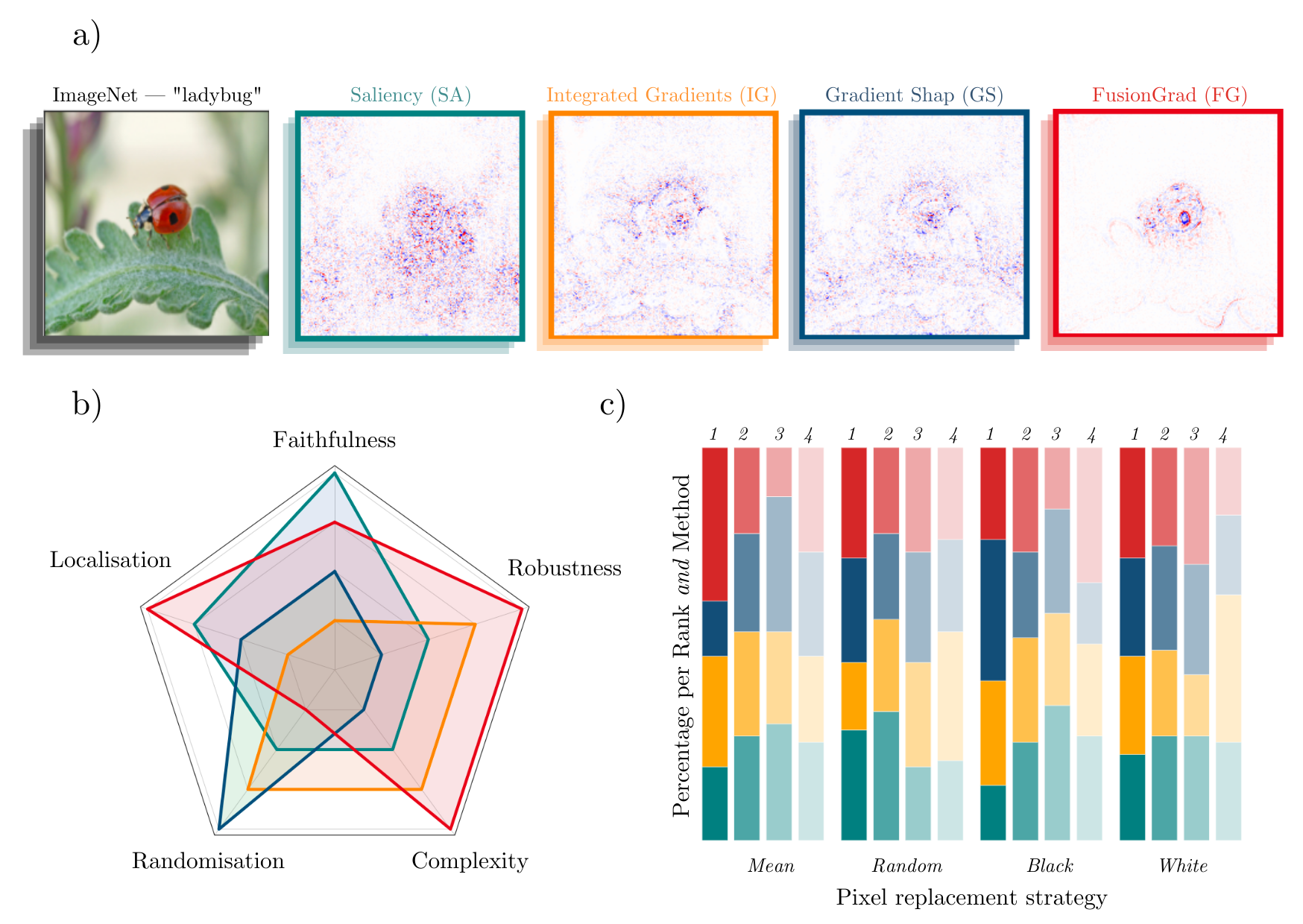

The evaluation of explanation methods is a research topic that has not yet been explored deeply, however, since explainability is supposed to strengthen trust in artificial intelligence, it is necessary to systematically review and compare explanation methods in order to confirm their correctness. Until now, no tool exists that exhaustively and speedily allows researchers to quantitatively evaluate explanations of neural network predictions. To increase transparency and reproducibility in the field, we therefore built Quantus - a comprehensive, open-source toolkit in Python that includes a growing, well-organised collection of evaluation metrics and tutorials for evaluating explainable methods. The toolkit has been thoroughly tested and is available under open source license on PyPi (or on https://github.com/understandable-machine-intelligence-lab/quantus/).

翻译:对解释方法的评价是一个尚未深入探讨的研究课题,然而,由于解释性应旨在加强对人工智能的信任,因此有必要系统地审查和比较解释方法,以确认其正确性;直到现在,还没有一种工具能够使研究人员能够详尽而迅速地对神经网络预测的解释进行定量评估;为了增加实地的透明度和可复制性,我们因此在Python建立了Quantus-一个全面的开放源码工具包,其中包括越来越多的、组织完善的评价指标和用于评价可解释方法的教程汇编;该工具包已经经过彻底测试,并可在PyPi(或https://github.com/underable-manger-intelligence-lab/quantus/)的公开源许可证下查阅。