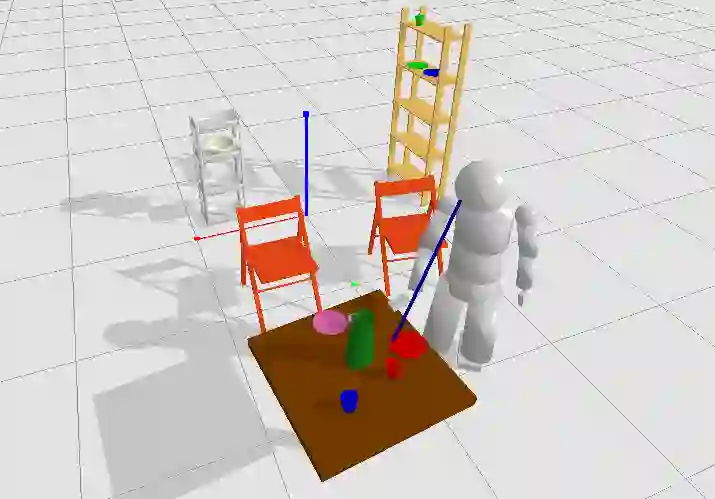

As robots are becoming more and more ubiquitous in human environments, it will be necessary for robotic systems to better understand and predict human actions. However, this is not an easy task, at times not even for us humans, but based on a relatively structured set of possible actions, appropriate cues, and the right model, this problem can be computationally tackled. In this paper, we propose to use an ensemble of long-short term memory (LSTM) networks for human action prediction. To train and evaluate models, we used the MoGaze dataset - currently the most comprehensive dataset capturing poses of human joints and the human gaze. We have thoroughly analyzed the MoGaze dataset and selected a reduced set of cues for this task. Our model can predict (i) which of the labeled objects the human is going to grasp, and (ii) which of the macro locations the human is going to visit (such as table or shelf). We have exhaustively evaluated the proposed method and compared it to individual cue baselines. The results suggest that our LSTM model slightly outperforms the gaze baseline in single object picking accuracy, but achieves better accuracy in macro object prediction. Furthermore, we have also analyzed the prediction accuracy when the gaze is not used, and in this case, the LSTM model considerably outperformed the best single cue baseline

翻译:随着机器人在人类环境中越来越普遍,机器人系统将有必要更好地了解和预测人类的行动。然而,这不是一件容易的任务,有时甚至对我们人类来说,这并非易事,而是根据一套相对结构化的可能行动、适当的提示和正确的模型,这一问题可以计算解决。在本文件中,我们提议使用一个长期短期内存(LSTM)网络的组合,用于人类行动预测。为了培训和评估模型,我们使用了MoGaze数据集——目前这是最全面的数据集,可以捕捉人类的关节和视觉。我们彻底分析了MoGaze数据集,并为这项任务选择了一套较少的提示。我们的模型可以预测(一)人类将要捕捉的标签对象,以及(二)人类将要访问的宏观地点(如表或架子)的哪些。我们详尽地评估了拟议的方法,并将它与单个指示基线进行了比较。结果显示,我们的LSTMS模型略短短地超越了人类关连和视觉的组合基准,我们在一次宏观预测中选择了精确性,但更精确性地实现了我们所使用的直观的精确性。