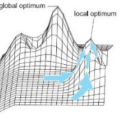

It is an ongoing debate whether and how comma selection in evolutionary algorithms helps to escape local optima. We propose a new benchmark function to investigate the benefits of comma selection: OneMax with randomly planted local optima, generated by frozen noise. We show that comma selection (the $(1,\lambda)$ EA) is faster than plus selection (the $(1+\lambda)$ EA) on this benchmark, in a fixed-target scenario, and for offspring population sizes $\lambda$ for which both algorithms behave differently. For certain parameters, the $(1,\lambda)$ EA finds the target in $\Theta(n \ln n)$ evaluations, with high probability (w.h.p.), while the $(1+\lambda)$ EA) w.h.p. requires almost $\Theta((n\ln n)^2)$ evaluations. We further show that the advantage of comma selection is not arbitrarily large: w.h.p. comma selection outperforms plus selection at most by a factor of $O(n \ln n)$ for most reasonable parameter choices. We develop novel methods for analysing frozen noise and give powerful and general fixed-target results with tail bounds that are of independent interest.

翻译:论文题目翻译:逗号选择优于加号选择的OneMax问题中随机种下最优点的情况

摘要翻译:目前存在一个争论,即逗号选择在进化算法中是否有助于逃脱局部最优解及如何做到这一点。为了探究逗号选择的好处,我们提出了一个新的基准函数:带有随机种下局部最优点的OneMax问题,通过冻结噪声生成。我们证明,在确定目标的场景下,采用逗号选择($(1,\lambda)$ EA)比采用加号选择($(1+\lambda)$ EA)更快,并且只适用于不同的后代种群大小$\lambda$时。对于某些参数,$(1,\lambda)$ EA可以在$\Theta(n \ln n)$次评估内,高概率地找到目标,而$(1+\lambda)$ EA需要几乎$\Theta((n\ln n)^2)$次评估。我们进一步证明,逗号选择的优势并不是任意大的:在大多数合理的参数选择下,w.h.p. 逗号选择最多比加号选择快$O(n \ln n)$倍。我们开发了分析冻结噪声的新方法,并给出了强大且普遍的确定目标结果的尾部界限,对此非常感兴趣。