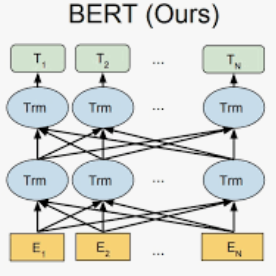

Phrase representations derived from BERT often do not exhibit complex phrasal compositionality, as the model relies instead on lexical similarity to determine semantic relatedness. In this paper, we propose a contrastive fine-tuning objective that enables BERT to produce more powerful phrase embeddings. Our approach (Phrase-BERT) relies on a dataset of diverse phrasal paraphrases, which is automatically generated using a paraphrase generation model, as well as a large-scale dataset of phrases in context mined from the Books3 corpus. Phrase-BERT outperforms baselines across a variety of phrase-level similarity tasks, while also demonstrating increased lexical diversity between nearest neighbors in the vector space. Finally, as a case study, we show that Phrase-BERT embeddings can be easily integrated with a simple autoencoder to build a phrase-based neural topic model that interprets topics as mixtures of words and phrases by performing a nearest neighbor search in the embedding space. Crowdsourced evaluations demonstrate that this phrase-based topic model produces more coherent and meaningful topics than baseline word and phrase-level topic models, further validating the utility of Phrase-BERT.

翻译:发自BERT的语句表达方式往往不表现出复杂的语句构成性,因为模型相反地依赖词汇相似性来确定语义关联性。在本文中,我们提出了一个对比性微调目标,使BERT能够产生更强大的词组嵌入。我们的方法(Phrase-BERT)依靠一套由多种语系参数生成的数据集,该数据集是自动生成的,它使用一个参数生成模型生成的,以及在从书集3体中提取的语句内容中,有一个大型的数据集。 词组-BERT在各种语系相似性任务中超越基线,同时显示矢量空间中最近的邻居之间的词汇多样性增加。最后,作为案例研究,我们表明Phrase-BERT嵌入可以很容易地与一个简单的自动编码器集成,用一个基于语系的神经专题模型,通过在嵌入空间中进行近邻语系搜索,将专题解释为词系混合物。集源评估表明,这一基于语系的主题模型比基线和词系水平的实用性专题模型更加一致和有意义。