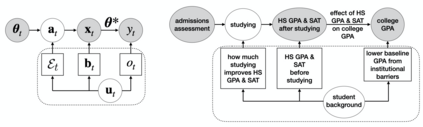

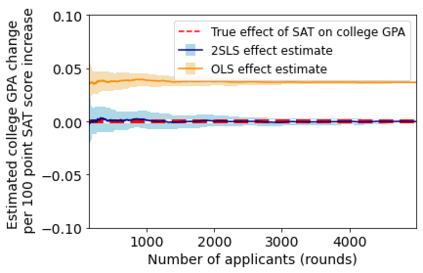

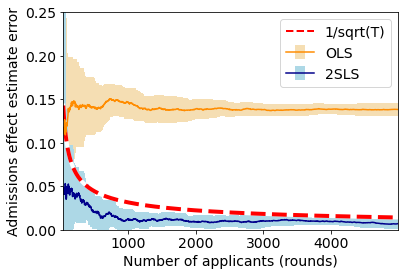

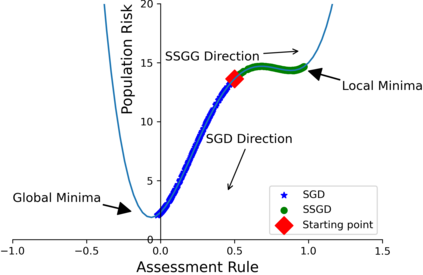

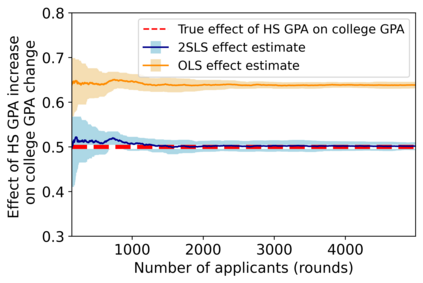

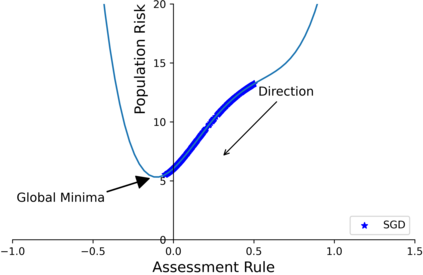

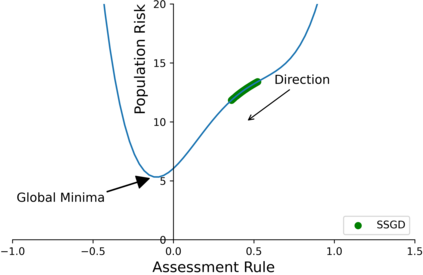

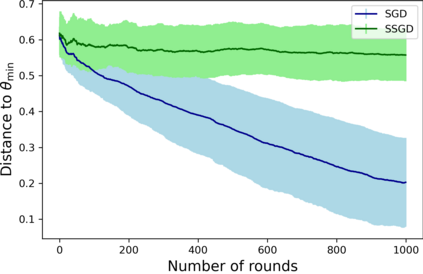

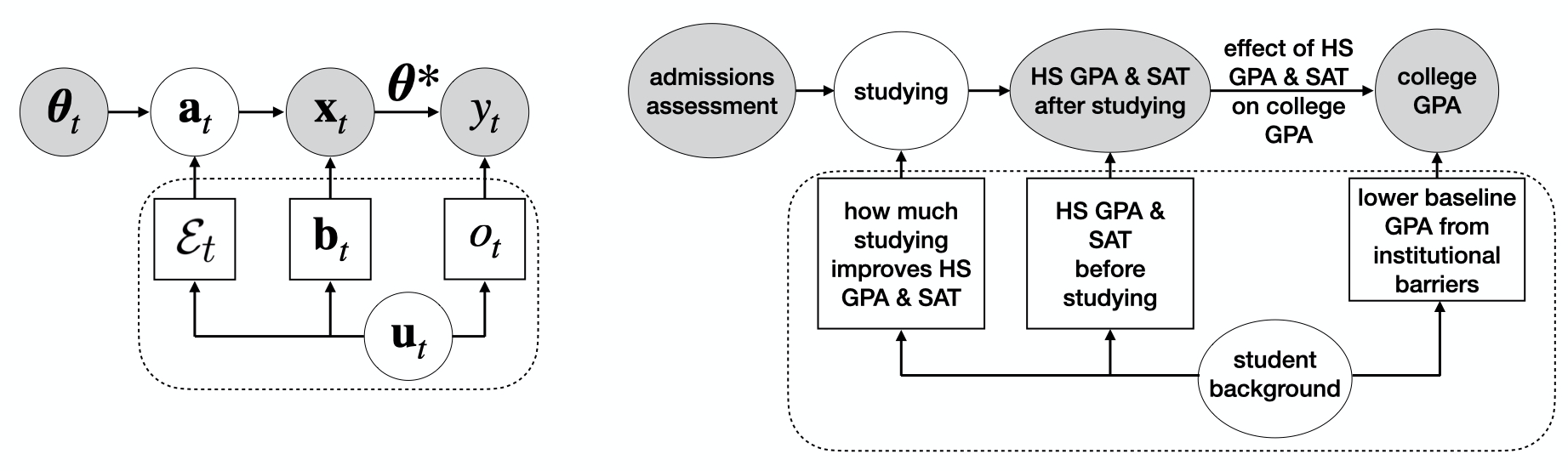

In settings where Machine Learning (ML) algorithms automate or inform consequential decisions about people, individual decision subjects are often incentivized to strategically modify their observable attributes to receive more favorable predictions. As a result, the distribution the assessment rule is trained on may differ from the one it operates on in deployment. While such distribution shifts, in general, can hinder accurate predictions, our work identifies a unique opportunity associated with shifts due to strategic responses: We show that we can use strategic responses effectively to recover causal relationships between the observable features and outcomes we wish to predict, even under the presence of unobserved confounding variables. Specifically, our work establishes a novel connection between strategic responses to ML models and instrumental variable (IV) regression by observing that the sequence of deployed models can be viewed as an instrument that affects agents' observable features but does not directly influence their outcomes. We show that our causal recovery method can be utilized to improve decision-making across several important criteria: individual fairness, agent outcomes, and predictive risk. In particular, we show that if decision subjects differ in their ability to modify non-causal attributes, any decision rule deviating from the causal coefficients can lead to(potentially unbounded) individual-level unfairness.

翻译:在机器学习(ML)算法自动化或告知对人作出相应决定的环境下,个别决策主体往往受到激励,从战略上修改其可观测特征,以获得更有利的预测。因此,评估规则所培训的分布可能不同于部署时所操作的分布。虽然这种分布变化一般会妨碍准确预测,但我们的工作确定了与战略反应所导致转变相关的一个独特机会:我们表明,我们可以利用战略对策有效地恢复可观测特征与结果之间的因果关系,即使存在未观测到的相混淆变量,我们也希望预测这些特征和结果。具体地说,我们的工作在ML模型的战略反应与工具变量(IV)回归之间建立了一种新的联系,通过观察所部署模型的顺序可被视为一种影响代理人的可观察特征但并不直接影响其结果的工具。我们表明,我们的因果回收方法可以用来改善以下几个重要标准的决策:个人公平性、代理人结果和预测性风险。我们特别表明,如果决策主体在修改非因果属性的能力上有所不同,任何决定性规则与因果系数的不公分化程度之间,则可以导致没有因果关系。