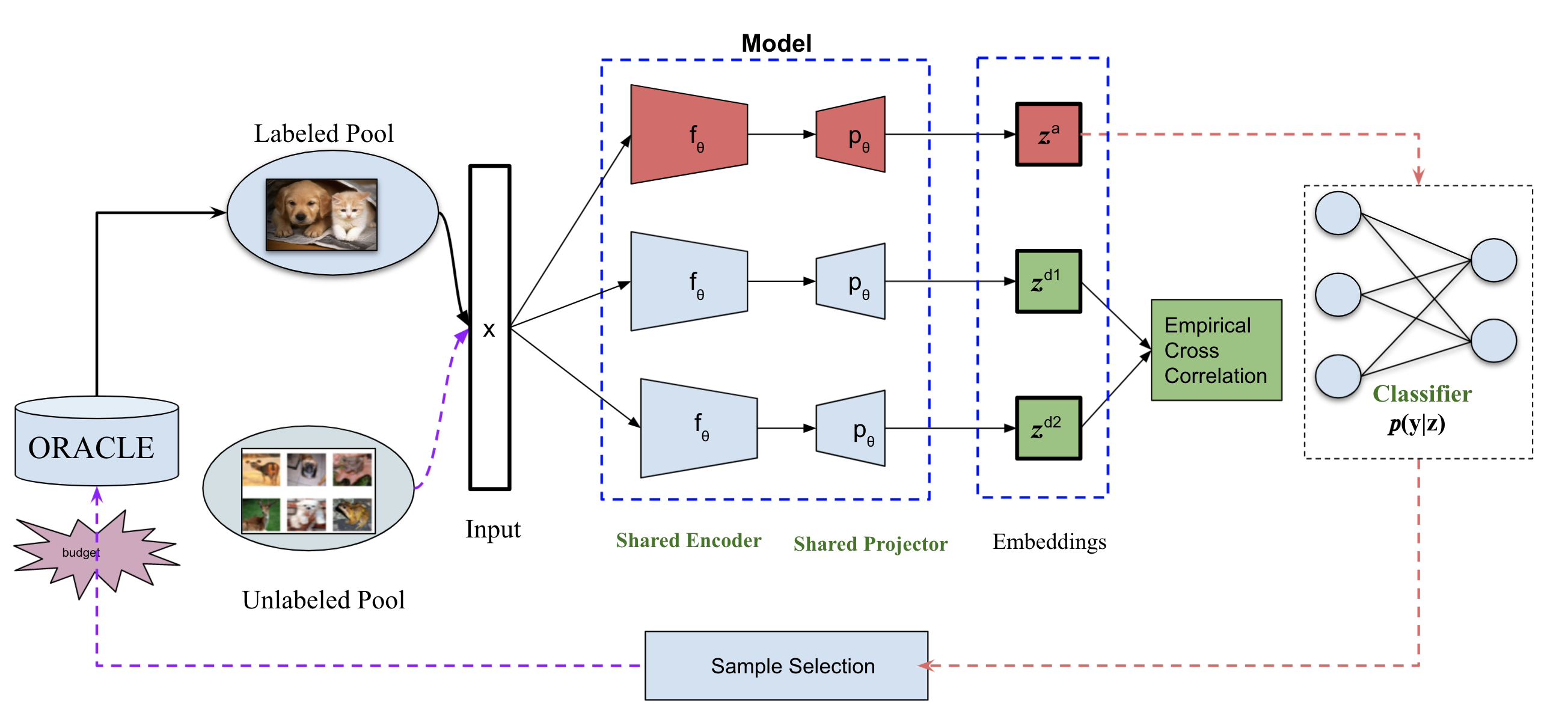

The generalisation performance of a convolutional neural networks (CNN) is majorly predisposed by the quantity, quality, and diversity of the training images. All the training data needs to be annotated in-hand before, in many real-world applications data is easy to acquire but expensive and time-consuming to label. The goal of the Active learning for the task is to draw most informative samples from the unlabeled pool which can used for training after annotation. With total different objective, self-supervised learning which have been gaining meteoric popularity by closing the gap in performance with supervised methods on large computer vision benchmarks. self-supervised learning (SSL) these days have shown to produce low-level representations that are invariant to distortions of the input sample and can encode invariance to artificially created distortions, e.g. rotation, solarization, cropping etc. self-supervised learning (SSL) approaches rely on simpler and more scalable frameworks for learning. In this paper, we unify these two families of approaches from the angle of active learning using self-supervised learning mainfold and propose Deep Active Learning using BarlowTwins(DALBT), an active learning method for all the datasets using combination of classifier trained along with self-supervised loss framework of Barlow Twins to a setting where the model can encode the invariance of artificially created distortions, e.g. rotation, solarization, cropping etc.

翻译:由于培训图象的数量、质量和多样性,培训图象的量、质量和多样性使综合神经网络(CNN)的总体性表现大为容易。所有培训数据都需要事先加注,在许多现实世界应用数据中,数据很容易获得,但费用昂贵,而且需要花费时间贴标签。积极学习的目的是从可作说明后用于培训的未贴标签的人才库中抽取最丰富的信息样本。由于目标完全不同,自我监督的学习越来越受流体欢迎,在大型计算机视觉基准上用监督的方法缩小业绩差距。自我监督的学习(SSL)表明,这些天已经产生了低层次的演示,无法扭曲输入样本,但费用昂贵,而且需要贴上标签。积极学习,例如轮用、太阳能、太阳能、裁剪等,自我监督的学习方法取决于更简单、更可缩放的学习框架。在本文中,我们将这两种方法从积极学习的角度从自我监督的自我监督的学习主页和提议采用经培训的“深层次”的自我学习框架,可以将“深层次”和“深层次”的自我学习,同时使用经过训练的“透明”的“透明”的自我学习,可以将所有“深层次”的“透明”的“透明”的自我学习,可以将“透明”的“透明”的“方向与“透明”的“透明”的“透明”的“自我学习”的“方向结合结合起来结合结合起来,可以将“透明”的“透明”的自我学习。