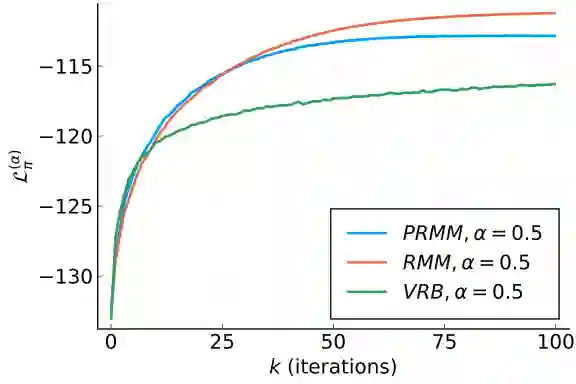

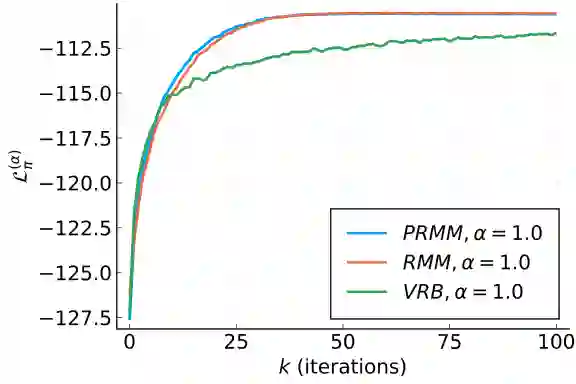

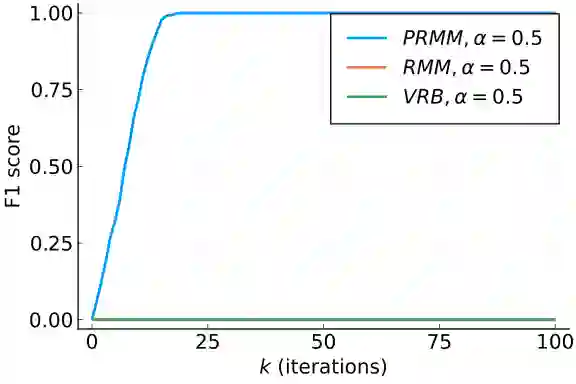

We study the variational inference problem of minimizing a regularized R\'enyi divergence over an exponential family, and propose a relaxed moment-matching algorithm, which includes a proximal-like step. Using the information-geometric link between Bregman divergences and the Kullback-Leibler divergence, this algorithm is shown to be equivalent to a Bregman proximal gradient algorithm. This novel perspective allows us to exploit the geometry of our approximate model while using stochastic black-box updates. We use this point of view to prove strong convergence guarantees including monotonic decrease of the objective, convergence to a stationary point or to the minimizer, and convergence rates. These new theoretical insights lead to a versatile, robust, and competitive method, as illustrated by numerical experiments.

翻译:我们研究了在指数式家庭上将常规R\'enyi差异最小化的变推论问题,并提出了一种宽松的瞬间匹配算法,其中包括一个近似准的步子。利用布雷格曼差异和库尔贝克-利伯尔差异之间的信息几何联系,这种算法被证明等同于布雷格曼准偏差梯度算法。这种新颖的视角使我们能够利用我们近似模型的几何方法,同时使用随机黑盒更新。我们用这个观点来证明强烈的趋同保证,包括目标的单调下降、与固定点或最小化点的趋同和趋同率。这些新的理论洞见导致一种多功能、强力和竞争性的方法,如数字实验所说明的那样。