打通多个视觉任务的全能骨干:HRNet

加入极市专业CV交流群,与 10000+来自港科大、北大、清华、中科院、CMU、腾讯、百度 等名校名企视觉开发者互动交流!

同时提供每月大咖直播分享、真实项目需求对接、干货资讯汇总,行业技术交流。关注 极市平台 公众号 ,回复 加群,立刻申请入群~

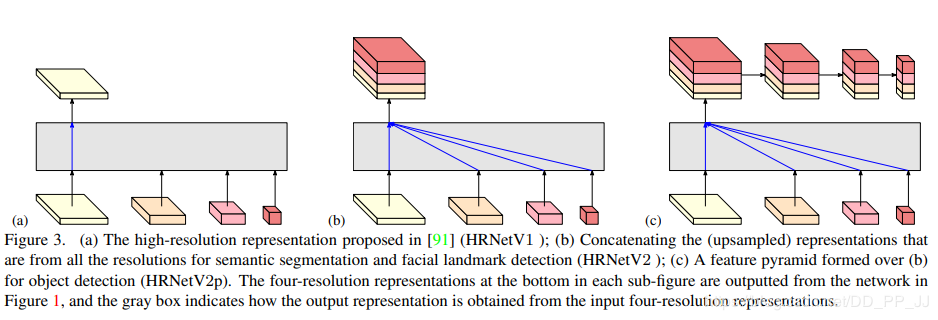

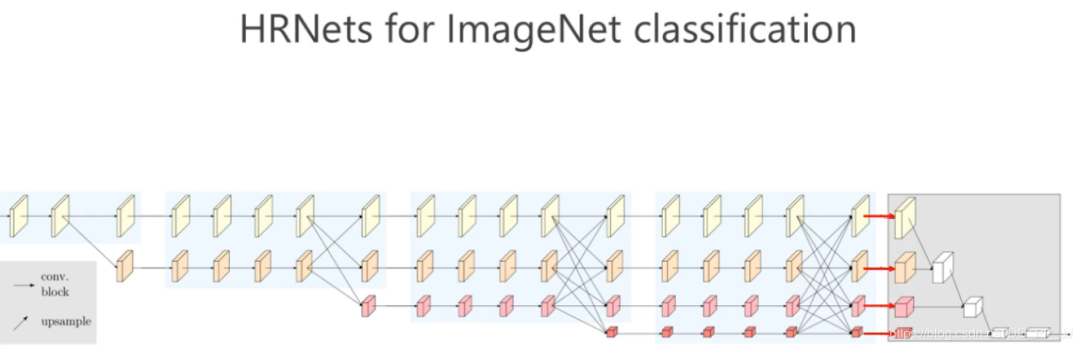

HRNet是微软亚洲研究院的王井东老师领导的团队完成的,打通图像分类、图像分割、目标检测、人脸对齐、姿态识别、风格迁移、Image Inpainting、超分、optical flow、Depth estimation、边缘检测等网络结构。

王老师在ValseWebinar《物体和关键点检测》中亲自讲解了HRNet,讲解地非常透彻。以下文章主要参考了王老师在演讲中的解读,配合论文+代码部分,来为各位读者介绍这个全能的Backbone-HRNet。

王老师演讲视频地址:

https://www.bilibili.com/video/BV1WJ41197dh/

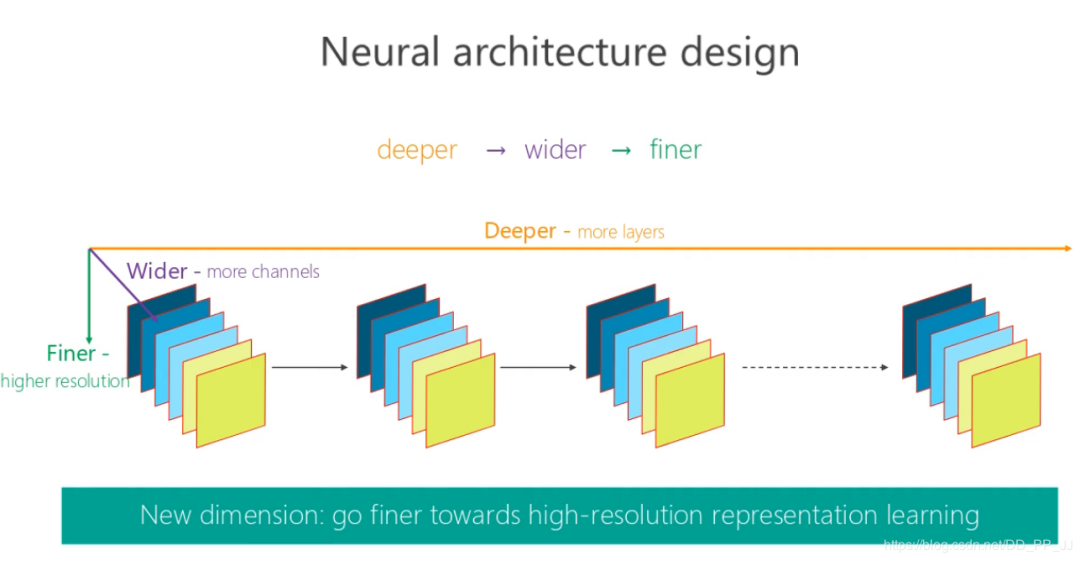

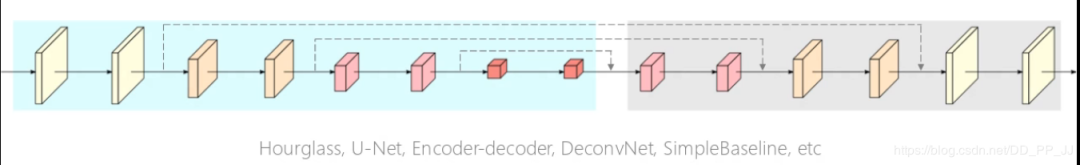

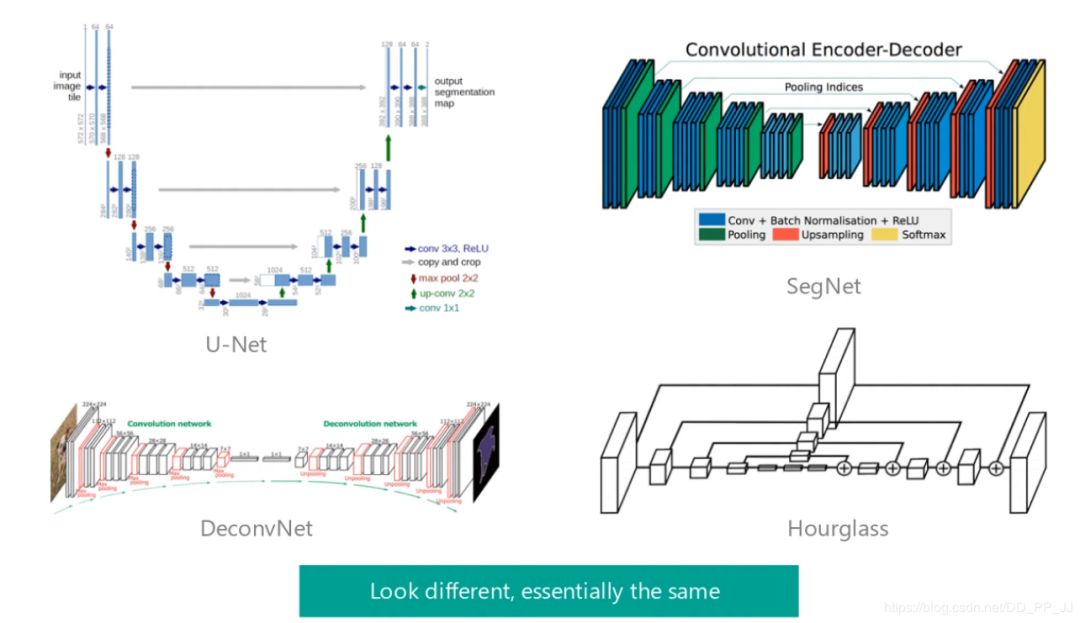

1. 引入

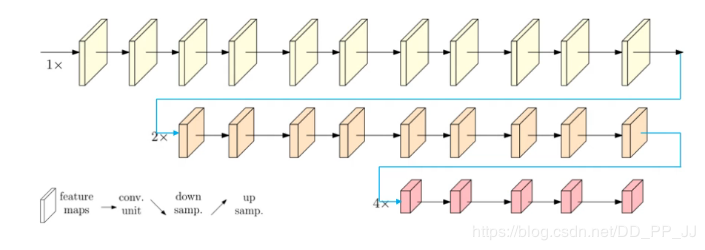

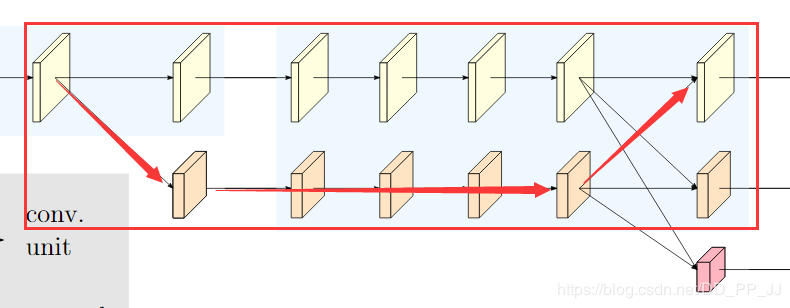

2. 核心

-

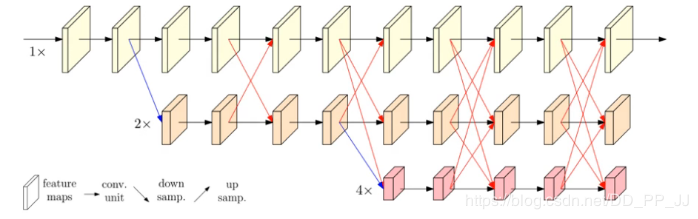

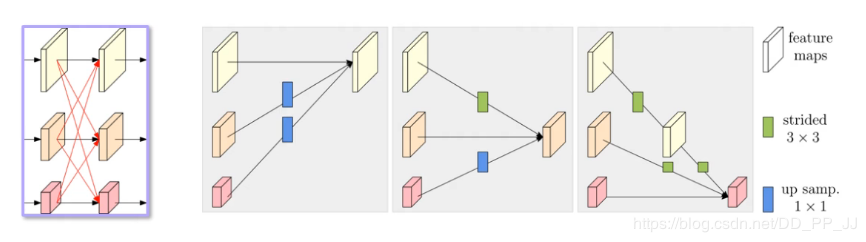

同分辨率的层直接复制。 -

需要升分辨率的使用bilinear upsample + 1x1卷积将channel数统一。 -

需要降分辨率的使用strided 3x3 卷积。 -

三个feature map融合的方式是相加。

至于为何要用strided 3x3卷积,这是因为卷积在降维的时候会出现信息损失,使用strided 3x3卷积是为了通过学习的方式,降低信息的损耗。所以这里没有用maxpool或者组合池化。

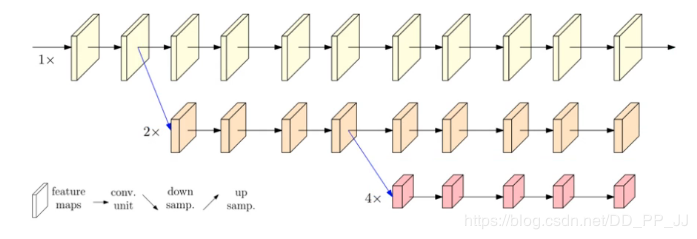

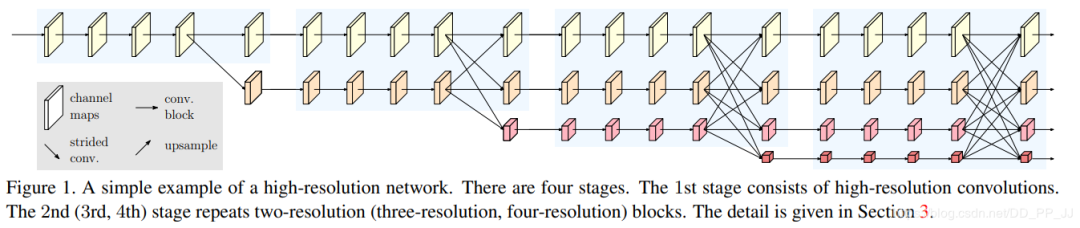

-

将高低分辨率之间的链接由串联改为并联。 -

在整个网络结构中都保持了高分辨率的表征(最上边那个通路)。 -

在高低分辨率中引入了交互来提高模型性能。

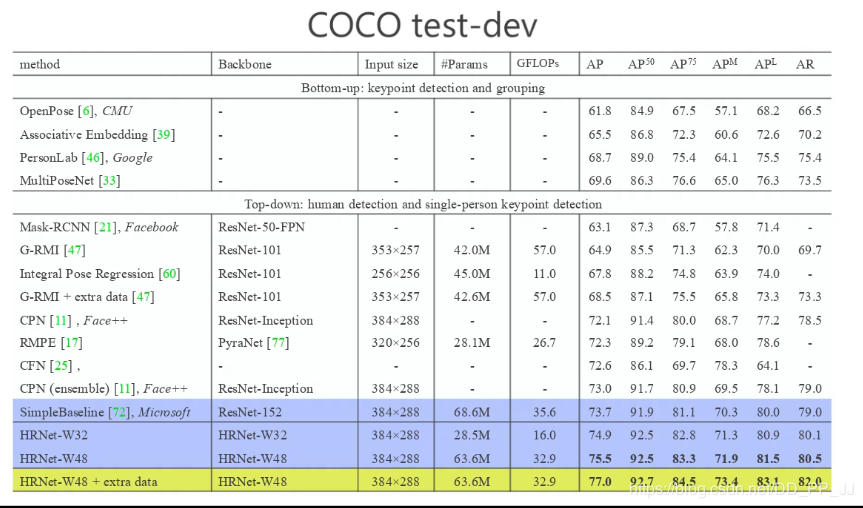

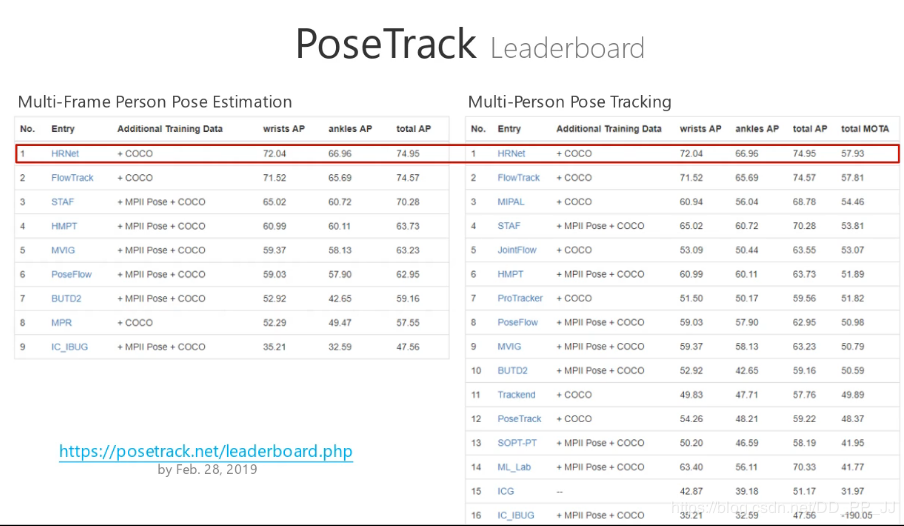

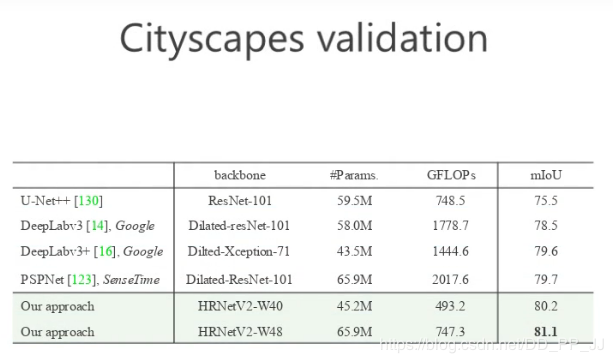

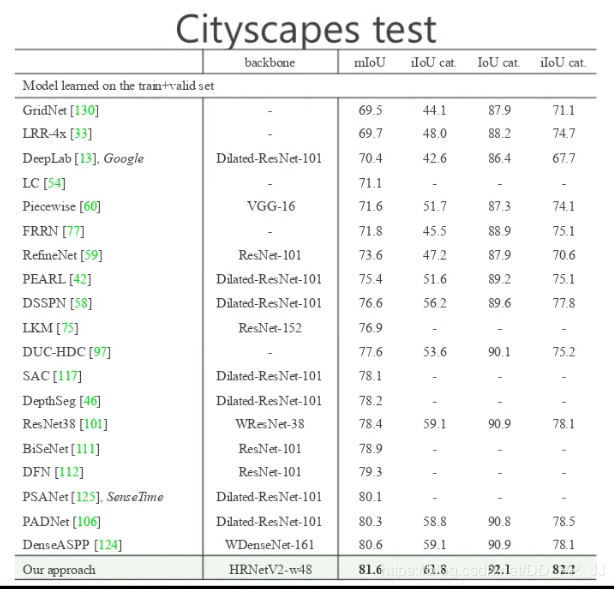

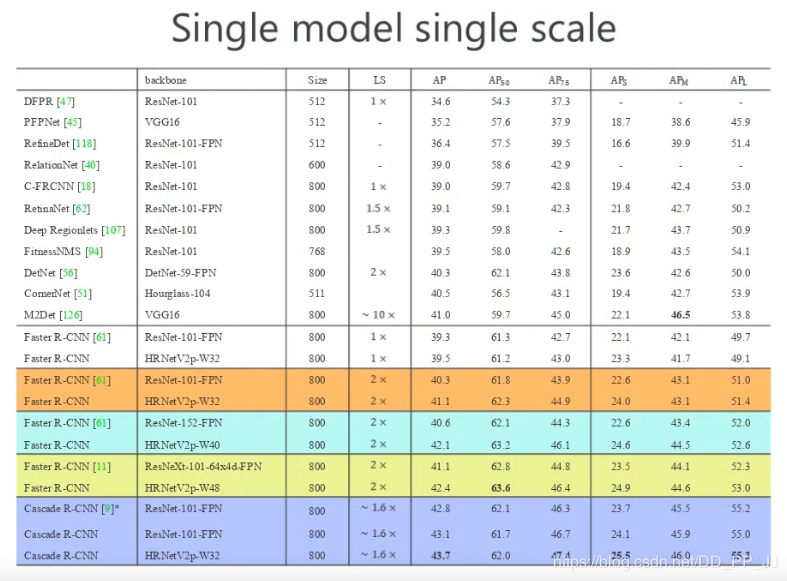

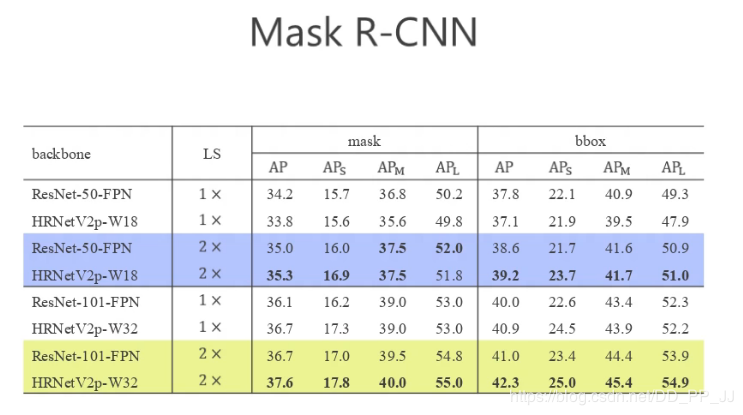

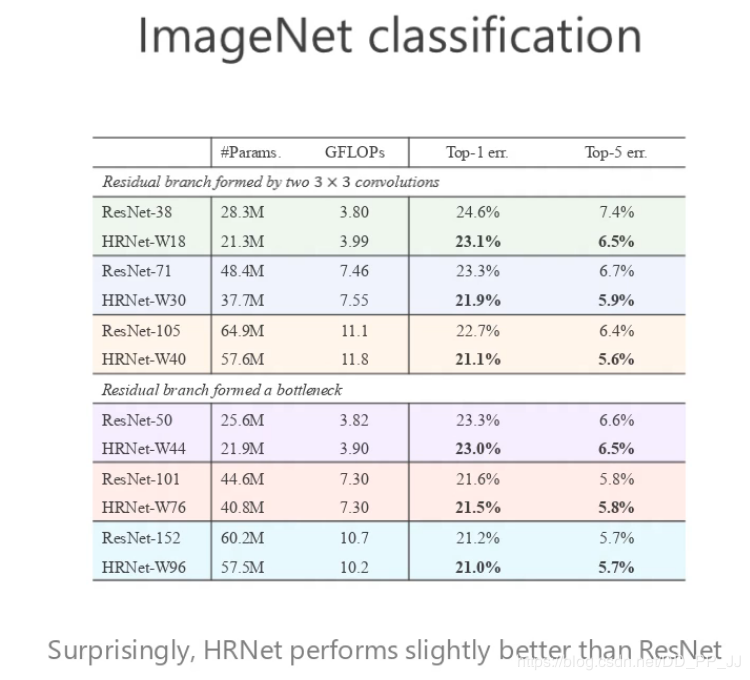

3. 效果

-

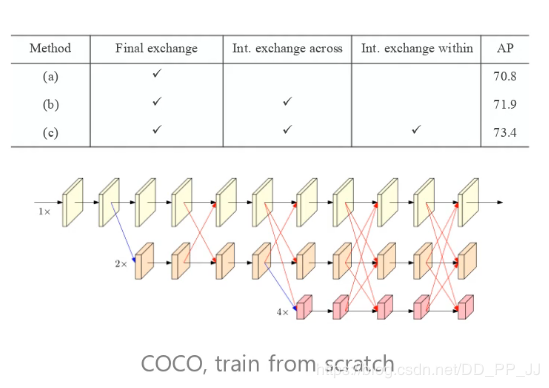

对交互方法进行消融实验,证明了当前跨分辨率的融合的有效性。

-

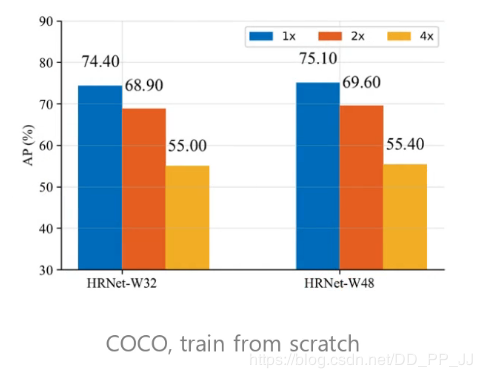

证明高分辨率feature map的表征能力

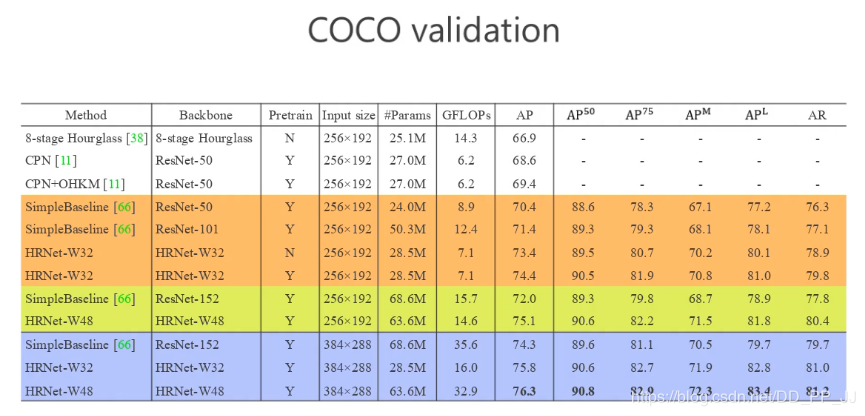

ps: 王井东老师在这部分提到,分割的网络也需要使用分类的预训练模型,否则结果会差几个点。

4.代码

def conv3x3(in_planes, out_planes, stride=1):

"""3x3 convolution with padding"""

return nn.Conv2d(in_planes, out_planes, kernel_size=3, stride=stride,

padding=1, bias=False)

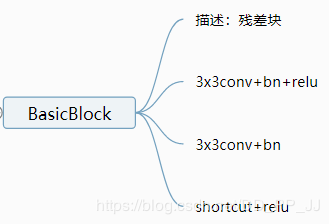

class BasicBlock(nn.Module):

expansion = 1

def __init__(self, inplanes, planes, stride=1, downsample=None):

super(BasicBlock, self).__init__()

self.conv1 = conv3x3(inplanes, planes, stride)

self.bn1 = nn.BatchNorm2d(planes, momentum=BN_MOMENTUM)

self.relu = nn.ReLU(inplace=True)

self.conv2 = conv3x3(planes, planes)

self.bn2 = nn.BatchNorm2d(planes, momentum=BN_MOMENTUM)

self.downsample = downsample

self.stride = stride

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

if self.downsample is not None:

residual = self.downsample(x)

out += residual

out = self.relu(out)

return out

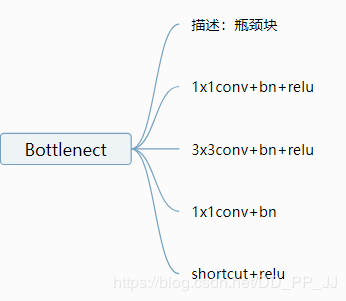

class Bottleneck(nn.Module):

expansion = 4

def __init__(self, inplanes, planes, stride=1, downsample=None):

super(Bottleneck, self).__init__()

self.conv1 = nn.Conv2d(inplanes, planes, kernel_size=1, bias=False)

self.bn1 = nn.BatchNorm2d(planes, momentum=BN_MOMENTUM)

self.conv2 = nn.Conv2d(planes, planes, kernel_size=3, stride=stride,

padding=1, bias=False)

self.bn2 = nn.BatchNorm2d(planes, momentum=BN_MOMENTUM)

self.conv3 = nn.Conv2d(planes, planes * self.expansion, kernel_size=1,

bias=False)

self.bn3 = nn.BatchNorm2d(planes * self.expansion,

momentum=BN_MOMENTUM)

self.relu = nn.ReLU(inplace=True)

self.downsample = downsample

self.stride = stride

def forward(self, x):

residual = x

out = self.conv1(x)

out = self.bn1(out)

out = self.relu(out)

out = self.conv2(out)

out = self.bn2(out)

out = self.relu(out)

out = self.conv3(out)

out = self.bn3(out)

if self.downsample is not None:

residual = self.downsample(x)

out += residual

out = self.relu(out)

return out

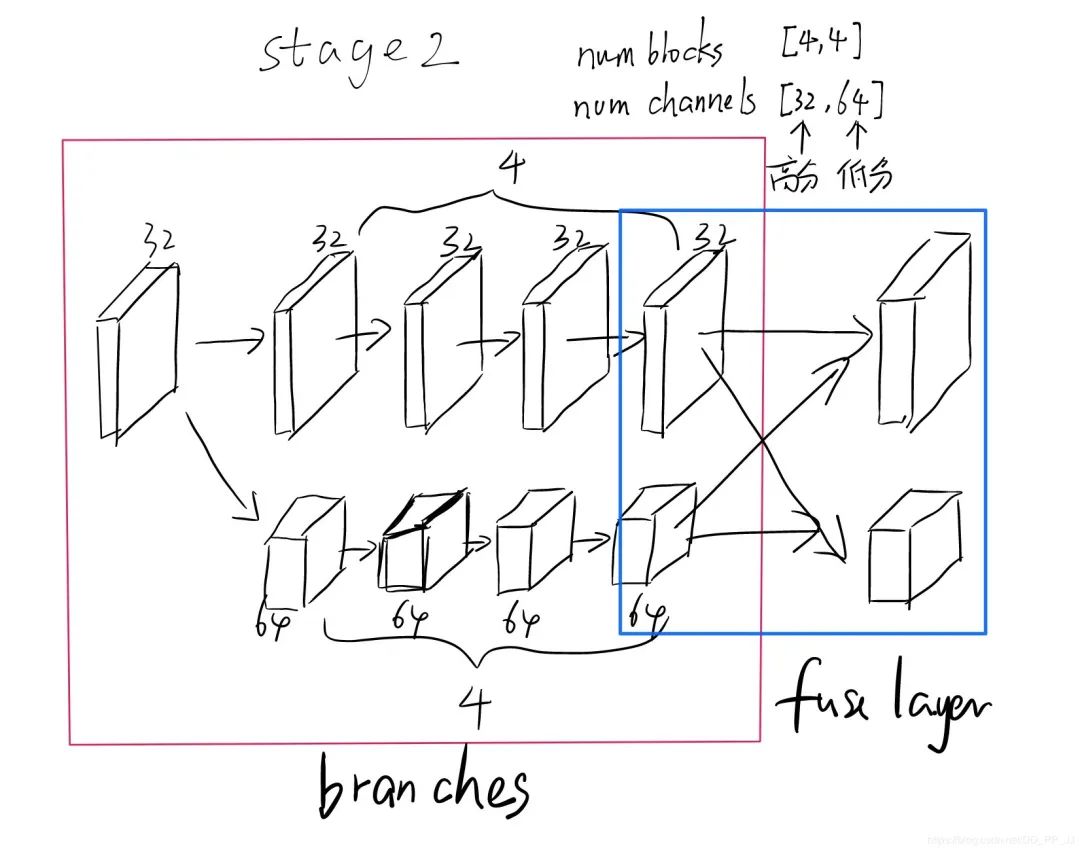

class HighResolutionModule(nn.Module):

def __init__(self, num_branches, blocks, num_blocks, num_inchannels,

num_channels, fuse_method, multi_scale_output=True):

'''

调用:

# 调用高低分辨率交互模块, stage2 为例

HighResolutionModule(num_branches, # 2

block, # 'BASIC'

num_blocks, # [4, 4]

num_inchannels, # 上个stage的out channel

num_channels, # [32, 64]

fuse_method, # SUM

reset_multi_scale_output)

'''

super(HighResolutionModule, self).__init__()

self._check_branches(

# 检查分支数目是否合理

num_branches, blocks, num_blocks, num_inchannels, num_channels)

self.num_inchannels = num_inchannels

# 融合选用相加的方式

self.fuse_method = fuse_method

self.num_branches = num_branches

self.multi_scale_output = multi_scale_output

# 两个核心部分,一个是branches构建,一个是融合layers构建

self.branches = self._make_branches(

num_branches, blocks, num_blocks, num_channels)

self.fuse_layers = self._make_fuse_layers()

self.relu = nn.ReLU(False)

def _check_branches(self, num_branches, blocks, num_blocks,

num_inchannels, num_channels):

# 分别检查参数是否符合要求,看models.py中的参数,blocks参数冗余了

if num_branches != len(num_blocks):

error_msg = 'NUM_BRANCHES({}) <> NUM_BLOCKS({})'.format(

num_branches, len(num_blocks))

logger.error(error_msg)

raise ValueError(error_msg)

if num_branches != len(num_channels):

error_msg = 'NUM_BRANCHES({}) <> NUM_CHANNELS({})'.format(

num_branches, len(num_channels))

logger.error(error_msg)

raise ValueError(error_msg)

if num_branches != len(num_inchannels):

error_msg = 'NUM_BRANCHES({}) <> NUM_INCHANNELS({})'.format(

num_branches, len(num_inchannels))

logger.error(error_msg)

raise ValueError(error_msg)

def _make_one_branch(self, branch_index, block, num_blocks, num_channels,

stride=1):

# 构建一个分支,一个分支重复num_blocks个block

downsample = None

# 这里判断,如果通道变大(分辨率变小),则使用下采样

if stride != 1 or \

self.num_inchannels[branch_index] != num_channels[branch_index] * block.expansion:

downsample = nn.Sequential(

nn.Conv2d(self.num_inchannels[branch_index],

num_channels[branch_index] * block.expansion,

kernel_size=1, stride=stride, bias=False),

nn.BatchNorm2d(num_channels[branch_index] * block.expansion,

momentum=BN_MOMENTUM),

)

layers = []

layers.append(block(self.num_inchannels[branch_index],

num_channels[branch_index], stride, downsample))

self.num_inchannels[branch_index] = \

num_channels[branch_index] * block.expansion

for i in range(1, num_blocks[branch_index]):

layers.append(block(self.num_inchannels[branch_index],

num_channels[branch_index]))

return nn.Sequential(*layers)

def _make_branches(self, num_branches, block, num_blocks, num_channels):

branches = []

# 通过循环构建多分支,每个分支属于不同的分辨率

for i in range(num_branches):

branches.append(

self._make_one_branch(i, block, num_blocks, num_channels))

return nn.ModuleList(branches)

def _make_fuse_layers(self):

if self.num_branches == 1:

return None

num_branches = self.num_branches # 2

num_inchannels = self.num_inchannels

fuse_layers = []

for i in range(num_branches if self.multi_scale_output else 1):

# i代表枚举所有分支

fuse_layer = []

for j in range(num_branches):

# j代表处理的当前分支

if j > i: # 进行上采样,使用最近邻插值

fuse_layer.append(nn.Sequential(

nn.Conv2d(num_inchannels[j],

num_inchannels[i],

1,

1,

0,

bias=False),

nn.BatchNorm2d(num_inchannels[i],

momentum=BN_MOMENTUM),

nn.Upsample(scale_factor=2**(j-i), mode='nearest')))

elif j == i:

# 本层不做处理

fuse_layer.append(None)

else:

conv3x3s = []

# 进行strided 3x3 conv下采样,如果跨两层,就使用两次strided 3x3 conv

for k in range(i-j):

if k == i - j - 1:

num_outchannels_conv3x3 = num_inchannels[i]

conv3x3s.append(nn.Sequential(

nn.Conv2d(num_inchannels[j],

num_outchannels_conv3x3,

3, 2, 1, bias=False),

nn.BatchNorm2d(num_outchannels_conv3x3,

momentum=BN_MOMENTUM)))

else:

num_outchannels_conv3x3 = num_inchannels[j]

conv3x3s.append(nn.Sequential(

nn.Conv2d(num_inchannels[j],

num_outchannels_conv3x3,

3, 2, 1, bias=False),

nn.BatchNorm2d(num_outchannels_conv3x3,

nn.ReLU(False)))

fuse_layer.append(nn.Sequential(*conv3x3s))

fuse_layers.append(nn.ModuleList(fuse_layer))

return nn.ModuleList(fuse_layers)

def get_num_inchannels(self):

return self.num_inchannels

def forward(self, x):

if self.num_branches == 1:

return [self.branches[0](x[0])]

for i in range(self.num_branches):

x[i]=self.branches[i](x[i])

x_fuse=[]

for i in range(len(self.fuse_layers)):

y=x[0] if i == 0 else self.fuse_layers[i][0](x[0])

for j in range(1, self.num_branches):

if i == j:

y=y + x[j]

else:

y=y + self.fuse_layers[i][j](x[j])

x_fuse.append(self.relu(y))

# 将fuse以后的多个分支结果保存到list中

return x_fuse

# high_resoluton_net related params for classification

POSE_HIGH_RESOLUTION_NET = CN()

POSE_HIGH_RESOLUTION_NET.PRETRAINED_LAYERS = ['*']

POSE_HIGH_RESOLUTION_NET.STEM_INPLANES = 64

POSE_HIGH_RESOLUTION_NET.FINAL_CONV_KERNEL = 1

POSE_HIGH_RESOLUTION_NET.WITH_HEAD = True

POSE_HIGH_RESOLUTION_NET.STAGE2 = CN()

POSE_HIGH_RESOLUTION_NET.STAGE2.NUM_MODULES = 1

POSE_HIGH_RESOLUTION_NET.STAGE2.NUM_BRANCHES = 2

POSE_HIGH_RESOLUTION_NET.STAGE2.NUM_BLOCKS = [4, 4]

POSE_HIGH_RESOLUTION_NET.STAGE2.NUM_CHANNELS = [32, 64]

POSE_HIGH_RESOLUTION_NET.STAGE2.BLOCK = 'BASIC'

POSE_HIGH_RESOLUTION_NET.STAGE2.FUSE_METHOD = 'SUM'

POSE_HIGH_RESOLUTION_NET.STAGE3 = CN()

POSE_HIGH_RESOLUTION_NET.STAGE3.NUM_MODULES = 1

POSE_HIGH_RESOLUTION_NET.STAGE3.NUM_BRANCHES = 3

POSE_HIGH_RESOLUTION_NET.STAGE3.NUM_BLOCKS = [4, 4, 4]

POSE_HIGH_RESOLUTION_NET.STAGE3.NUM_CHANNELS = [32, 64, 128]

POSE_HIGH_RESOLUTION_NET.STAGE3.BLOCK = 'BASIC'

POSE_HIGH_RESOLUTION_NET.STAGE3.FUSE_METHOD = 'SUM'

POSE_HIGH_RESOLUTION_NET.STAGE4 = CN()

POSE_HIGH_RESOLUTION_NET.STAGE4.NUM_MODULES = 1

POSE_HIGH_RESOLUTION_NET.STAGE4.NUM_BRANCHES = 4

POSE_HIGH_RESOLUTION_NET.STAGE4.NUM_BLOCKS = [4, 4, 4, 4]

POSE_HIGH_RESOLUTION_NET.STAGE4.NUM_CHANNELS = [32, 64, 128, 256]

POSE_HIGH_RESOLUTION_NET.STAGE4.BLOCK = 'BASIC'

POSE_HIGH_RESOLUTION_NET.STAGE4.FUSE_METHOD = 'SUM'

def forward(self, x):

# 使用两个strided 3x3conv进行快速降维

x=self.relu(self.bn1(self.conv1(x)))

x=self.relu(self.bn2(self.conv2(x)))

# 构建了一串BasicBlock构成的模块

x=self.layer1(x)

# 然后是多个stage,每个stage核心是调用HighResolutionModule模块

x_list=[]

for i in range(self.stage2_cfg['NUM_BRANCHES']):

if self.transition1[i] is not None:

x_list.append(self.transition1[i](x))

else:

x_list.append(x)

y_list=self.stage2(x_list)

x_list=[]

for i in range(self.stage3_cfg['NUM_BRANCHES']):

if self.transition2[i] is not None:

x_list.append(self.transition2[i](y_list[-1]))

else:

x_list.append(y_list[i])

y_list=self.stage3(x_list)

x_list=[]

for i in range(self.stage4_cfg['NUM_BRANCHES']):

if self.transition3[i] is not None:

x_list.append(self.transition3[i](y_list[-1]))

else:

x_list.append(y_list[i])

y_list=self.stage4(x_list)

# 添加分类头,上文中有显示,在分类问题中添加这种头

# 在其他问题中换用不同的头

y=self.incre_modules[0](y_list[0])

for i in range(len(self.downsamp_modules)):

y=self.incre_modules[i+1](y_list[i+1]) + \

self.downsamp_modules[i](y)

y=self.final_layer(y)

if torch._C._get_tracing_state():

# 在不写C代码的情况下执行forward,直接用python版本

y=y.flatten(start_dim=2).mean(dim=2)

else:

y=F.avg_pool2d(y, kernel_size=y.size()

[2:]).view(y.size(0), -1)

y=self.classifier(y)

return y

5. 总结

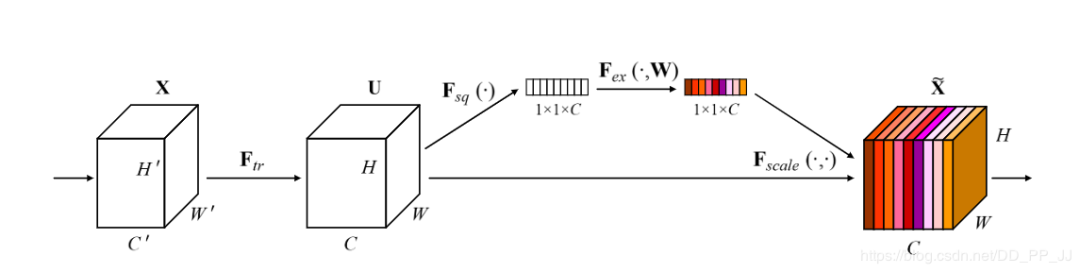

senet是hrnet的一个特例,hrnet不仅有通道注意力,同时也有空间注意力 -- akkaze-郑安坤

-

SENet没有像HRNet这样分辨率变为原来的一半,分辨率直接变为1x1,比较极端。变为1x1向量以后,SENet中使用了两个全连接网络来学习通道的特征分布;但是在HRNet中,使用了几个卷积(Residual block)来学习特征。 -

SENet在主干部分(高分辨率分支)没有安排卷积进行特征的学习;HRNet在主干部分(高分辨率分支)安排了几个卷积(Residual block)来学习特征。 -

特征融合部分SENet和HRNet区分比较大,SENet使用的对应通道相乘的方法,HRNet则使用的是相加。之所以说SENet是通道注意力机制是因为通过全局平均池化后没有了空间特征,只剩通道的特征;HRNet则可以看作同时保留了空间特征和通道特征,所以说HRNet不仅有通道注意力,同时也有空间注意力。

推荐阅读:

△长按添加极市小助手

△长按关注极市平台,获取最新CV干货

觉得有用麻烦给个在看啦~

登录查看更多

相关内容

Arxiv

11+阅读 · 2019年4月1日

Arxiv

4+阅读 · 2019年1月4日

Arxiv

10+阅读 · 2018年12月4日

Arxiv

6+阅读 · 2018年1月11日