【泡泡一分钟】DualNet:学习互补特征用于图像识别(ICCV2017-51)

每天一分钟,带你读遍机器人顶级会议文章

标题:DualNet: Learn Complementary Features for Image Recognition

作者:Saihui Hou, Xu Liu and Zilei Wang

来源:International Conference on Computer Vision (ICCV 2017)

播音员:丸子

编译:张鲁 周平(53)

欢迎个人转发朋友圈;其他机构或自媒体如需转载,后台留言申请授权

摘要

大家好,今天为大家带来的文章是—— DualNet:学习互补特征用于图像识别。该文章发表于ICCV 2017。

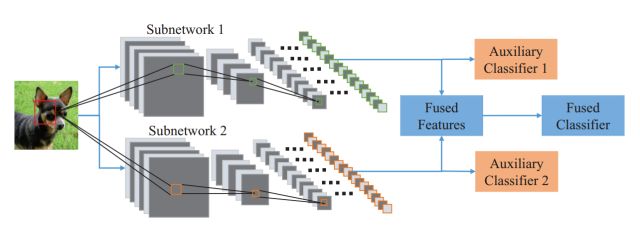

在这项工作中,我们提出了一个名为DualNet的新框架,旨在学习更精确的图像识别表示。在这里,两个平行的神经网络相互协调来学习互补特征,从而构建更宽的网络。具体而言,我们将端到端深度卷积神经网络从逻辑上划分为两个功能部分,即特征提取器和图像分类器。

DualNet的特征提取器由两个提取器子网络并排放置构成。然后,将两个流特征聚合到最终分类器以进行总体分类,同时在每个子网络的特征提取器后面附加两个辅助分类器,以使单独学习的特征单独进行判别。互补约束是通过加权三个分类器来实现的,这也是DualNet的关键所在。

我们还提出了相应的训练策略,包括迭代训练和联合微调,以此来使得两个子网络更好地协调工作。最后,我们使用DualNet基于知名的CaffeNet,VGGNet,NIN以及ResNet在包括CIFAR-100,Stanford Dogs和UEC FOOD-100在内的多个数据集上进行了彻底调查和实验评估。实验结果表明DualNet确实可以学习到更为精确的图像表达,因此在图像识别中获得了更高的精度。特别地,DualNet在CIFAR-100数据集中达到了近期工作的最高水平。

图1. DualNet的整体框架。图像输入两个相互协调学习互补特征的子网络。然后,两个流特征被融合为一个通用的表达,并被传入融合分类器(Fused Classifier)来进行总体分类。增加的辅助分类器用来保持两个网络独立学习的特征的区分性。

Abstract

In this work we propose a novel framework named DualNet aiming at learning more accurate representation for image recognition. Here two parallel neural networks are coordinated to learn complementary features and thus a wider network is constructed. Specifically, we logically divide an end-to-end deep convolutional neural network into two functional parts, i.e., feature extractor and image classifier. The extractors of two subnetworks are placed side by side, which exactly form the feature extractor of DualNet. Then the two-stream features are aggregated to the final classifier for overall classification, while two auxiliary classifiers are appended behind the feature extractor of each subnetwork to make the separately learned features discriminative alone. The complementary constraint is imposed by weighting the three classifiers, which is indeed the key of DualNet. The corresponding training strategy is also proposed, consisting of iterative training and joint finetuning, to make the two subnetworks cooperate well with each other. Finally, DualNet based on the well-known CaffeNet, VGGNet, NIN and ResNet are thoroughly investigated and experimentally evaluated on multiple datasets including CIFAR-100, Stanford Dogs and UEC FOOD-100. The results demonstrate that DualNet can really help learn more accurate image representation, and thus result in higher accuracy for recognition. In particular, the performance on CIFAR-100 is state-of-the-art compared to the recent works.

如果你对本文感兴趣,想要下载完整文章进行阅读,可以关注【泡泡机器人SLAM】公众号。

欢迎来到泡泡论坛,这里有大牛为你解答关于SLAM的任何疑惑。

有想问的问题,或者想刷帖回答问题,泡泡论坛欢迎你!

泡泡网站:www.paopaorobot.org

泡泡论坛:http://paopaorobot.org/forums/

泡 泡机器人SLAM的原创内容均由泡泡机器人的成员花费大量心血制作而成,希望大家珍惜我们的劳动成果,转载请务必注明出自【泡泡机器人SLAM】微信公众 号,否则侵权必究!同时,我们也欢迎各位转载到自己的朋友圈,让更多的人能进入到SLAM这个领域中,让我们共同为推进中国的SLAM事业而努力!

商业合作及转载请联系liufuqiang_robot@hotmail.com