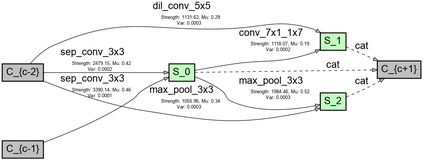

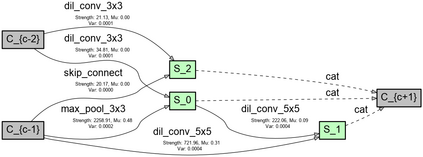

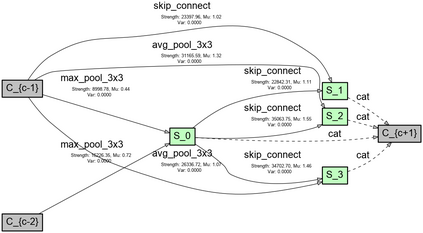

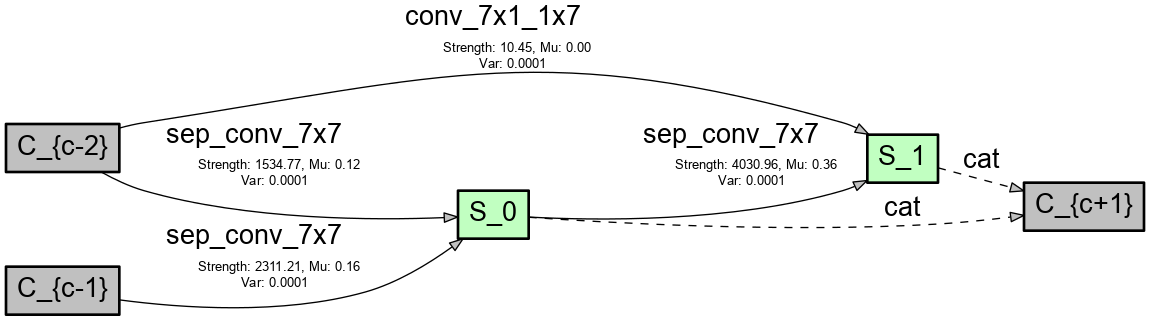

In recent years, neural architecture search (NAS) has received intensive scientific and industrial interest due to its capability of finding a neural architecture with high accuracy for various artificial intelligence tasks such as image classification or object detection. In particular, gradient-based NAS approaches have become one of the more popular approaches thanks to their computational efficiency during the search. However, these methods often experience a mode collapse, where the quality of the found architectures is poor due to the algorithm resorting to choosing a single operation type for the entire network, or stagnating at a local minima for various datasets or search spaces. To address these defects, we present a differentiable variational inference-based NAS method for searching sparse convolutional neural networks. Our approach finds the optimal neural architecture by dropping out candidate operations in an over-parameterised supergraph using variational dropout with automatic relevance determination prior, which makes the algorithm gradually remove unnecessary operations and connections without risking mode collapse. The evaluation is conducted through searching two types of convolutional cells that shape the neural network for classifying different image datasets. Our method finds diverse network cells, while showing state-of-the-art accuracy with up to $3 \times$ fewer parameters.

翻译:近些年来,神经结构搜索(NAS)由于能够找到对各种人工智能任务(如图像分类或对象探测)具有高度精准度的神经结构,因此获得了大量的科学和工业兴趣。特别是,基于梯度的NAS方法由于在搜索过程中的计算效率而成为更受欢迎的方法之一。然而,这些方法往往会遇到模式崩溃,因为发现的结构的质量由于使用为整个网络选择单一操作类型的算法,或者由于对各种数据集或搜索空间在本地迷你模型中停滞不前,而发现神经结构的质量较差。为了解决这些缺陷,我们提出了一个基于不同变异的NAS方法,用于搜索稀有的卷发神经网络。我们的方法发现最佳的神经结构,通过使用具有自动相关性决定的变异性辍学超强测量方法将候选操作丢弃出去,从而使算法逐渐消除不必要的操作和连接,而不会出现模式崩溃的风险。评估是通过两种塑造神经网络对不同图像数据集进行分类的进化细胞来进行的。我们的方法在显示不同的网络的精确度上找到不同的网络细胞,同时显示不同的参数。