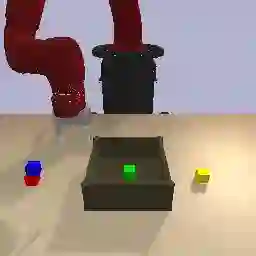

Can we use reinforcement learning to learn general-purpose policies that can perform a wide range of different tasks, resulting in flexible and reusable skills? Contextual policies provide this capability in principle, but the representation of the context determines the degree of generalization and expressivity. Categorical contexts preclude generalization to entirely new tasks. Goal-conditioned policies may enable some generalization, but cannot capture all tasks that might be desired. In this paper, we propose goal distributions as a general and broadly applicable task representation suitable for contextual policies. Goal distributions are general in the sense that they can represent any state-based reward function when equipped with an appropriate distribution class, while the particular choice of distribution class allows us to trade off expressivity and learnability. We develop an off-policy algorithm called distribution-conditioned reinforcement learning (DisCo RL) to efficiently learn these policies. We evaluate DisCo RL on a variety of robot manipulation tasks and find that it significantly outperforms prior methods on tasks that require generalization to new goal distributions.

翻译:我们能否利用强化学习学习学习能够执行各种不同任务、从而产生灵活和可重复使用的技能的通用政策?背景政策原则上提供这种能力?背景政策原则上提供这种能力,但背景的代表性决定了一般化和可表达性的程度。分类环境排除了对全新任务的概括化。受目标限制的政策可能促成某种一般化,但无法涵盖所有可能要求的任务。在本文件中,我们提议将目标分布作为适合背景政策的一般和广泛适用的任务代表。目标分布是一般性的,因为它们在配备适当的分配类别时可以代表任何基于国家的奖励功能,而特定分配类别的选择使我们得以进行与表达性和可学习性之间的交易。我们开发了一种叫作分配条件强化学习的非政策性算法(DisCo RL),以便有效地学习这些政策。我们评估关于各种机器人操纵任务的Disco RL,发现它大大超越了需要概括到新目标分配的任务的先前方法。