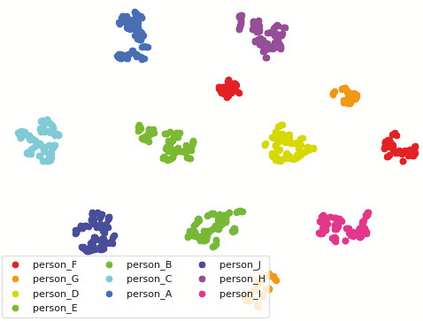

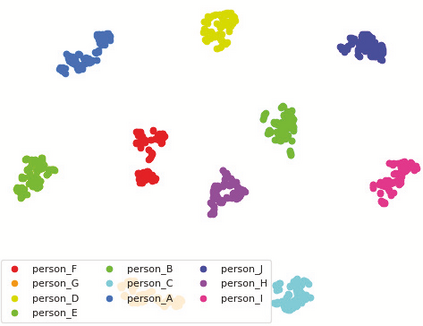

In a real world environment, person re-identification (Re-ID) is a challenging task due to variations in lighting conditions, viewing angles, pose and occlusions. Despite recent performance gains, current person Re-ID algorithms still suffer heavily when encountering these variations. To address this problem, we propose a semantic consistency and identity mapping multi-component generative adversarial network (SC-IMGAN) which provides style adaptation from one to many domains. To ensure that transformed images are as realistic as possible, we propose novel identity mapping and semantic consistency losses to maintain identity across the diverse domains. For the Re-ID task, we propose a joint verification-identification quartet network which is trained with generated and real images, followed by an effective quartet loss for verification. Our proposed method outperforms state-of-the-art techniques on six challenging person Re-ID datasets: CUHK01, CUHK03, VIPeR, PRID2011, iLIDS and Market-1501.

翻译:在现实世界环境中,由于照明条件、观光角度、姿势和隐蔽性的差异,个人再识别(Re-ID)是一项具有挑战性的任务。尽管最近取得了一些成绩,但目前的个人再识别算法在遇到这些变异时仍然深受其害。为了解决这一问题,我们提议采用语义一致性和身份制图多元成份的对抗性网络(SC-IMGAN),从一个领域到多个领域提供风格的适应性。为了确保变形图像尽可能切合实际,我们提议采用新的身份映像和语义一致性损失,以维护不同领域的特征。关于再识别任务,我们提议建立一个联合核查识别四方网络,在培训后以生成真实图像,并随后是有效的四方核查损失。我们提议的方法优于六种具有挑战性的人再识别数据集:CUHK01、CUHK03、VIMER、PRD2011、iLIDDS和市场1501。