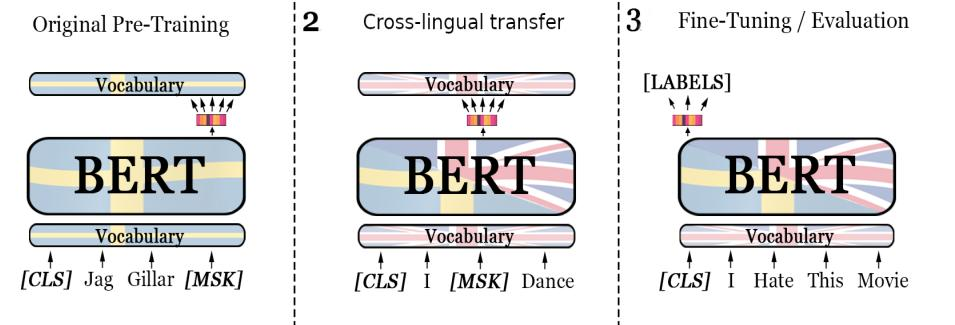

Recent studies in zero-shot cross-lingual learning using multilingual models have falsified the previous hypothesis that shared vocabulary and joint pre-training are the keys to cross-lingual generalization. Inspired by this advancement, we introduce a cross-lingual transfer method for monolingual models based on domain adaptation. We study the effects of such transfer from four different languages to English. Our experimental results on GLUE show that the transferred models outperform the native English model independently of the source language. After probing the English linguistic knowledge encoded in the representations before and after transfer, we find that semantic information is retained from the source language, while syntactic information is learned during transfer. Additionally, the results of evaluating the transferred models in source language tasks reveal that their performance in the source domain deteriorates after transfer.

翻译:最近对使用多种语言模式的零点跨语言学习进行的研究表明,使用多种语言模式的最近研究歪曲了先前的假设,即共享词汇和联合培训前是跨语言概括的关键。受这一进展的启发,我们采用了一种基于地区适应的单一语言模式的跨语言传输方法。我们研究了这种从四种不同语言转移到英语的影响。我们关于GLUE的实验结果表明,所转让的模式超越了本地英语模式,而与源语言无关。在测试了在传输前后在演示中编码的英语知识之后,我们发现,语义信息从源语言中保留,而合成信息则在转让过程中学习。此外,对源语言任务中所转让的模式的评估结果显示,这些在源语言任务中的表现在传输后会恶化。