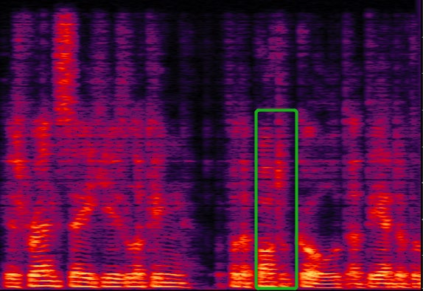

We propose a multi-channel speech enhancement approach with a novel two-stage feature fusion method and a pre-trained acoustic model in a multi-task learning paradigm. In the first fusion stage, the time-domain and frequency-domain features are extracted separately. In the time domain, the multi-channel convolution sum (MCS) and the inter-channel convolution differences (ICDs) features are computed and then integrated with a 2-D convolutional layer, while in the frequency domain, the log-power spectra (LPS) features from both original channels and super-directive beamforming outputs are combined with another 2-D convolutional layer. To fully integrate the rich information of multi-channel speech, i.e. time-frequency domain features and the array geometry, we apply a third 2-D convolutional layer in the second stage of fusion to obtain the final convolutional features. Furthermore, we propose to use a fixed clean acoustic model trained with the end-to-end lattice-free maximum mutual information criterion to enforce the enhanced output to have the same distribution as the clean waveform to alleviate the over-estimation problem of the enhancement task and constrain distortion. On the Task1 development dataset of the ConferencingSpeech 2021 challenge, a PESQ improvement of 0.24 and 0.19 is attained compared to the official baseline and a recently proposed multi-channel separation method.

翻译:我们建议采用多声道语音增强方法,采用新型的两阶段特征融合方法和多任务学习范式中经过预先训练的声学模型,在合并的第一阶段,将时间域和频率域特性分别分离。在时间域,多声道连动和分流差异特性(MCS)进行计算,然后将多声道扩增,然后与2波层整合。在频率域,原频道和超导波形成型超级波段的原动力光谱(LPS)功能与另外的2-D层相结合。要充分整合多声道讲话的丰富信息,即时频域特性和阵列几何等特性。我们在熔化的第二阶段,将多声道相和次波变差异(ICDs)特性计算,然后与2D相融合。此外,我们提议使用一个固定的清洁声学模型,在零端至端无顶端的最大相互信息标准下,以实施强化的输出,4 与20度调层域域域域域域特性4 对比的更精确度改进方法的分布。