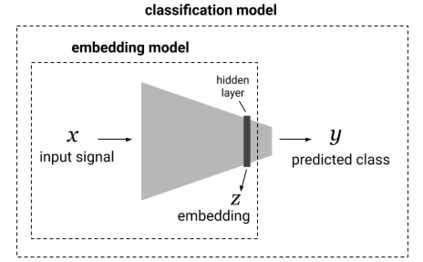

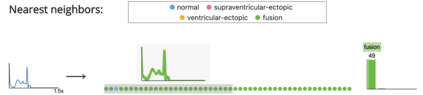

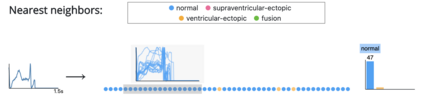

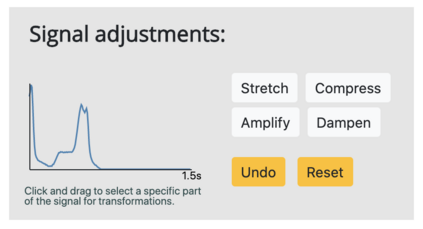

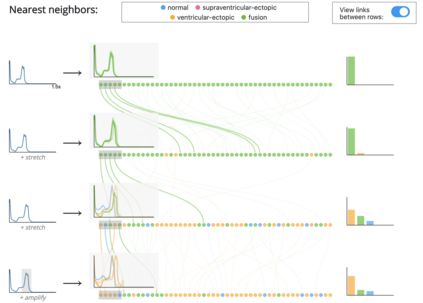

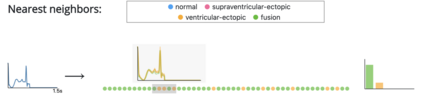

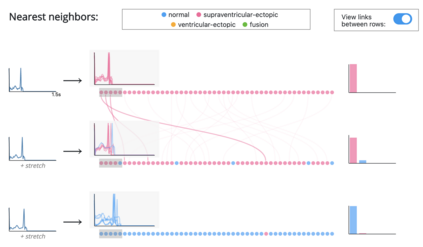

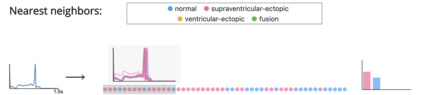

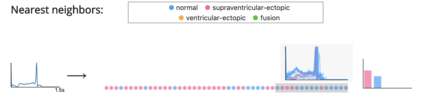

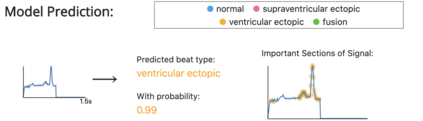

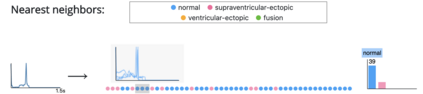

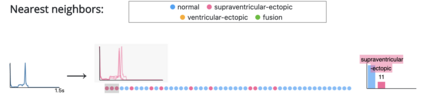

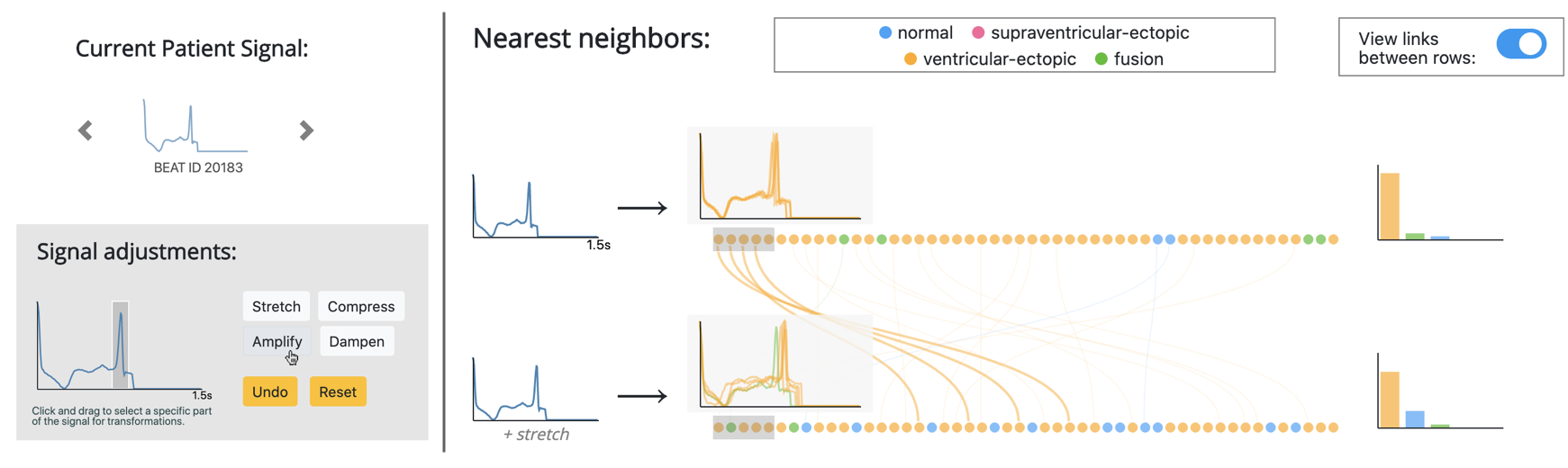

Interpretability methods aim to help users build trust in and understand the capabilities of machine learning models. However, existing approaches often rely on abstract, complex visualizations that poorly map to the task at hand or require non-trivial ML expertise to interpret. Here, we present two visual analytics modules that facilitate an intuitive assessment of model reliability. To help users better characterize and reason about a model's uncertainty, we visualize raw and aggregate information about a given input's nearest neighbors. Using an interactive editor, users can manipulate this input in semantically-meaningful ways, determine the effect on the output, and compare against their prior expectations. We evaluate our interface using an electrocardiogram beat classification case study. Compared to a baseline feature importance interface, we find that 14 physicians are better able to align the model's uncertainty with domain-relevant factors and build intuition about its capabilities and limitations.

翻译:解释方法旨在帮助用户建立对机器学习模型能力的信任和理解。 但是,现有方法往往依赖于抽象、复杂的可视化,对手头的任务映射不善,或需要非三角 ML 专门知识来解释。 这里, 我们展示了两个视觉分析模块, 便于对模型可靠性进行直观评估。 为了帮助用户更好地描述模型不确定性和解释模型不确定性, 我们设想了特定输入的近邻的原始和汇总信息。 使用互动编辑, 用户可以用语义上有意义的方式操作该输入, 确定对输出的影响, 并对照他们先前的期望进行比较。 我们用电心图比分级案例研究来评估我们的界面。 与基线特征重要性界面相比, 我们发现14名医生更有能力将模型的不确定性与与域相关因素相匹配, 并且建立有关其能力和局限性的直觉。