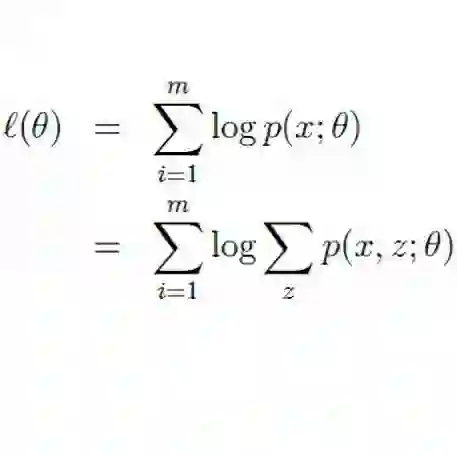

Expectation-Maximization (EM) algorithm is a widely used iterative algorithm for computing (local) maximum likelihood estimate (MLE). It can be used in an extensive range of problems, including the clustering of data based on the Gaussian mixture model (GMM). Numerical instability and convergence problems may arise in situations where the sample size is not much larger than the data dimensionality. In such low sample support (LSS) settings, the covariance matrix update in the EM-GMM algorithm may become singular or poorly conditioned, causing the algorithm to crash. On the other hand, in many signal processing problems, a priori information can be available indicating certain structures for different cluster covariance matrices. In this paper, we present a regularized EM algorithm for GMM-s that can make efficient use of such prior knowledge as well as cope with LSS situations. The method aims to maximize a penalized GMM likelihood where regularized estimation may be used to ensure positive definiteness of covariance matrix updates and shrink the estimators towards some structured target covariance matrices. We show that the theoretical guarantees of convergence hold, leading to better performing EM algorithm for structured covariance matrix models or with low sample settings.

翻译:EM算法是用于计算(局部)最大似然估计的广泛使用的迭代算法。它可在广泛的问题中使用,包括基于高斯混合模型(GMM)的数据聚类。在样本大小与数据维度不相差的情况下,可能会出现数值不稳定性和收敛问题。在这种低样本支撑(LSS)情况下,EM-GMM算法中的协方差矩阵更新可能变得奇异或病态,导致算法崩溃。另一方面,在许多信号处理问题中,先验信息可以可用,指示不同聚类协方差矩阵的某些结构。在本文中,我们提出了一种GMM的正则化EM算法,可以高效利用这种先验知识,并处理LSS情况。该方法旨在最大化罚函数的GMM似然函数,其中可以使用正则化估计来确保协方差矩阵更新的正定性,并将估计器收缩到一些结构化的目标协方差矩阵。我们证明了收敛的理论保证,从而为结构化协方差矩阵模型或低样本设置提供更好的性能EM算法。