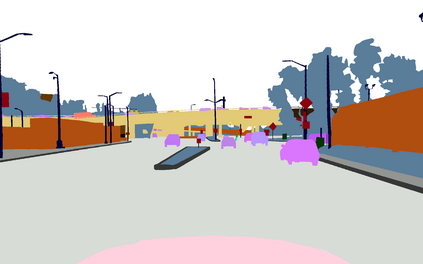

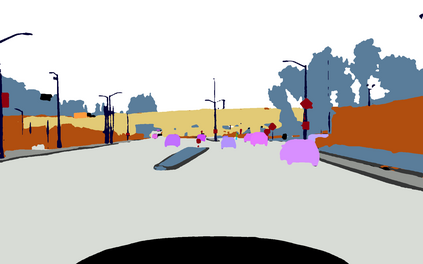

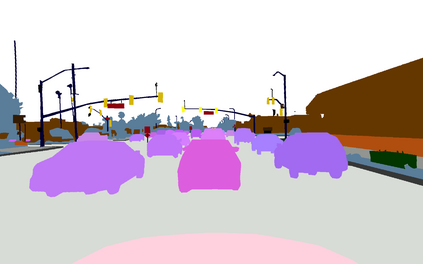

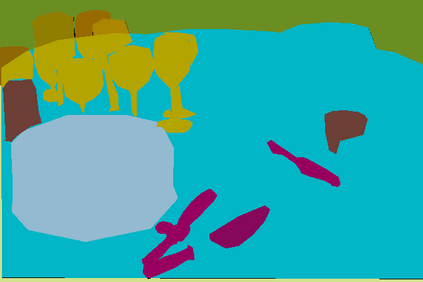

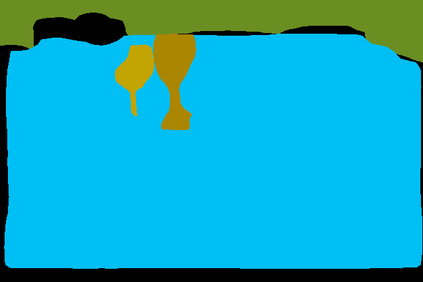

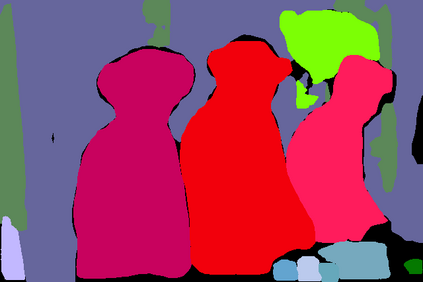

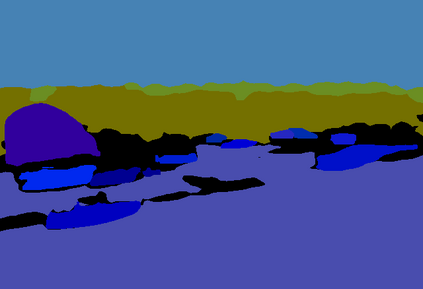

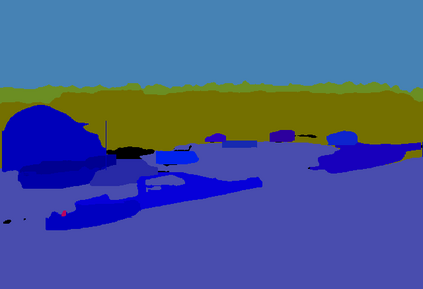

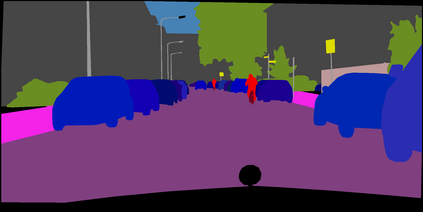

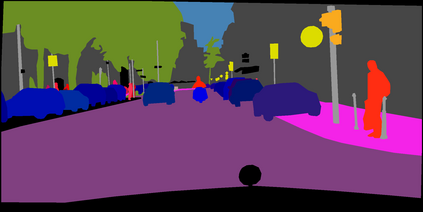

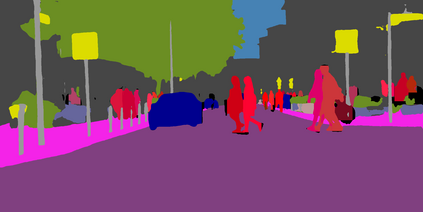

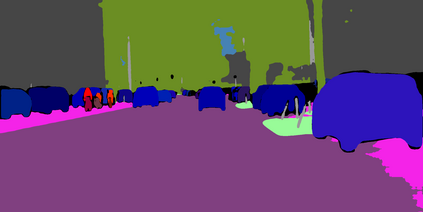

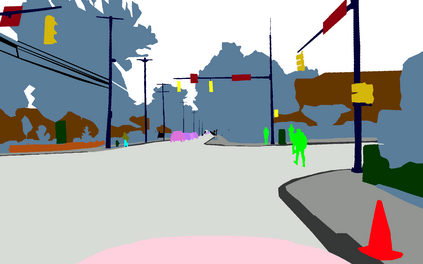

In this paper, we propose a unified panoptic segmentation network (UPSNet) for tackling the newly proposed panoptic segmentation task. On top of a single backbone residual network, we first design a deformable convolution based semantic segmentation head and a Mask R-CNN style instance segmentation head which solve these two subtasks simultaneously. More importantly, we introduce a parameter-free panoptic head which solves the panoptic segmentation via pixel-wise classification. It first leverages the logits from the previous two heads and then innovatively expands the representation for enabling prediction of an extra unknown class which helps better resolve the conflicts between semantic and instance segmentation. Additionally, it handles the challenge caused by the varying number of instances and permits back propagation to the bottom modules in an end-to-end manner. Extensive experimental results on Cityscapes, COCO and our internal dataset demonstrate that our UPSNet achieves state-of-the-art performance with much faster inference.

翻译:在本文中, 我们提出一个统一的光学分离网络( UPSNet), 以解决新提议的光学分割任务 。 在单一的骨干残余网络上, 我们首先设计一个基于变形的变形变异变异变异变异变异变异变异的语义分割面板头和一个同时解析这两个子任务。 更重要的是, 我们引入了一个无参数的光学面板头, 通过像素分类解决光学分离。 它首先利用前两个头的对账, 然后创新地扩展代表面, 以便预测一个有助于更好地解决语义分割和实例分割之间冲突的额外未知类。 此外, 它处理因不同情况造成的挑战, 并允许以端到端的方式回传到底部模块。 在城市景区、 COCO 和我们内部数据集上的广泛实验结果显示, 我们的UPSNet以更快的推论方式实现了状态的艺术性能 。