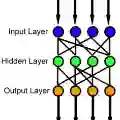

We algorithmically construct a two hidden layer feedforward neural network (TLFN) model with the weights fixed as the unit coordinate vectors of the $d$-dimensional Euclidean space and having $3d+2$ number of hidden neurons in total, which can approximate any continuous $d$-variable function with an arbitrary precision. This result, in particular, shows an advantage of the TLFN model over the single hidden layer feedforward neural network (SLFN) model, since SLFNs with fixed weights do not have the capability of approximating multivariate functions.

翻译:我们从逻辑上构建了两种隐性层向导神经网络(TLFN)模型,其重量是固定的,作为单位协调以美元为维的Euclidean空间的矢量,总共拥有3d+2美元的隐藏神经元,这可以任意精确地近似任何连续的以美元为变量的功能,这尤其表明TLFN模式优于单一的隐性层向导神经网络(SLFFN)模型,因为具有固定重量的SLFN不具备近似多变量功能的能力。

相关内容

专知会员服务

36+阅读 · 2019年10月17日