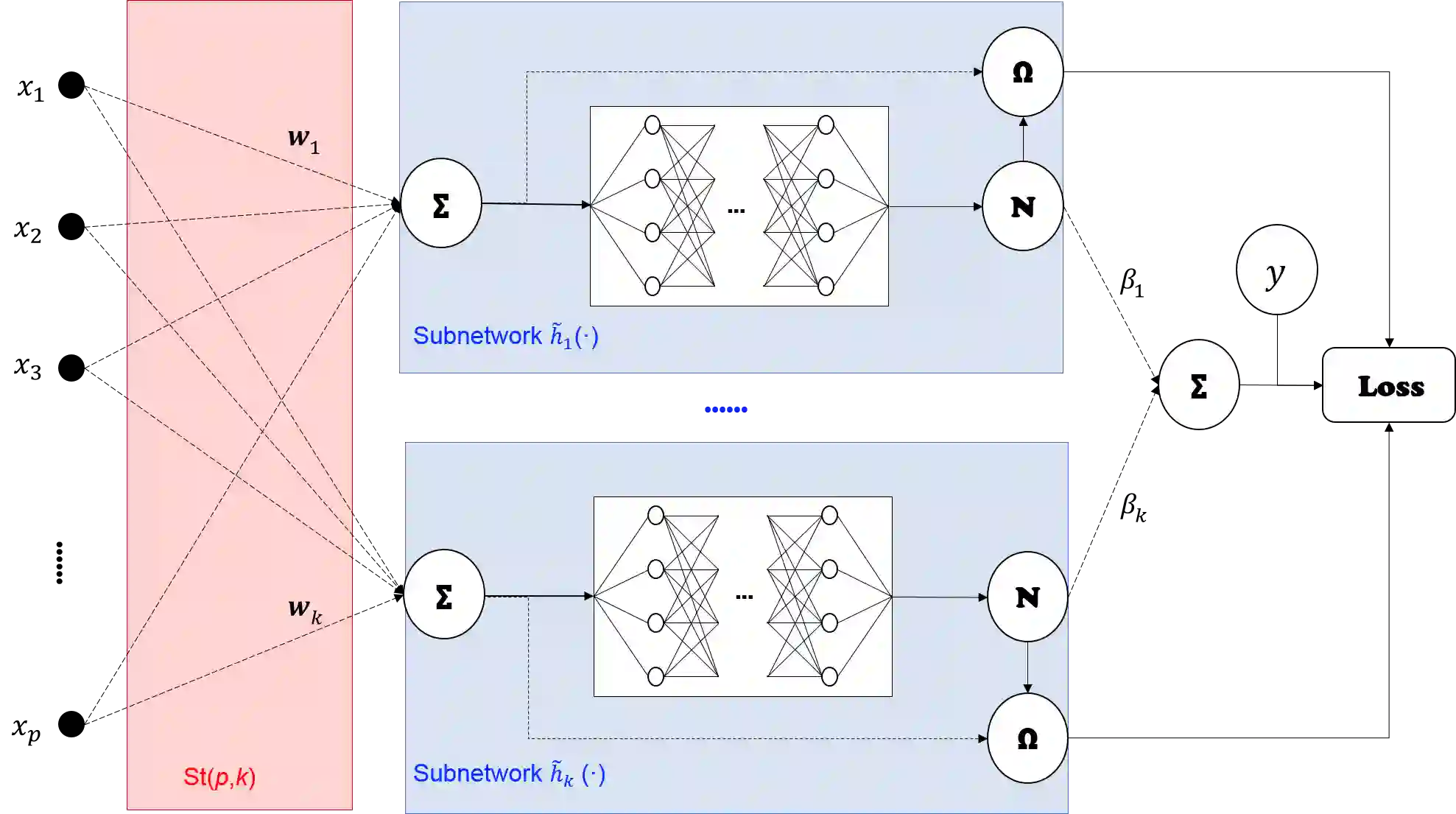

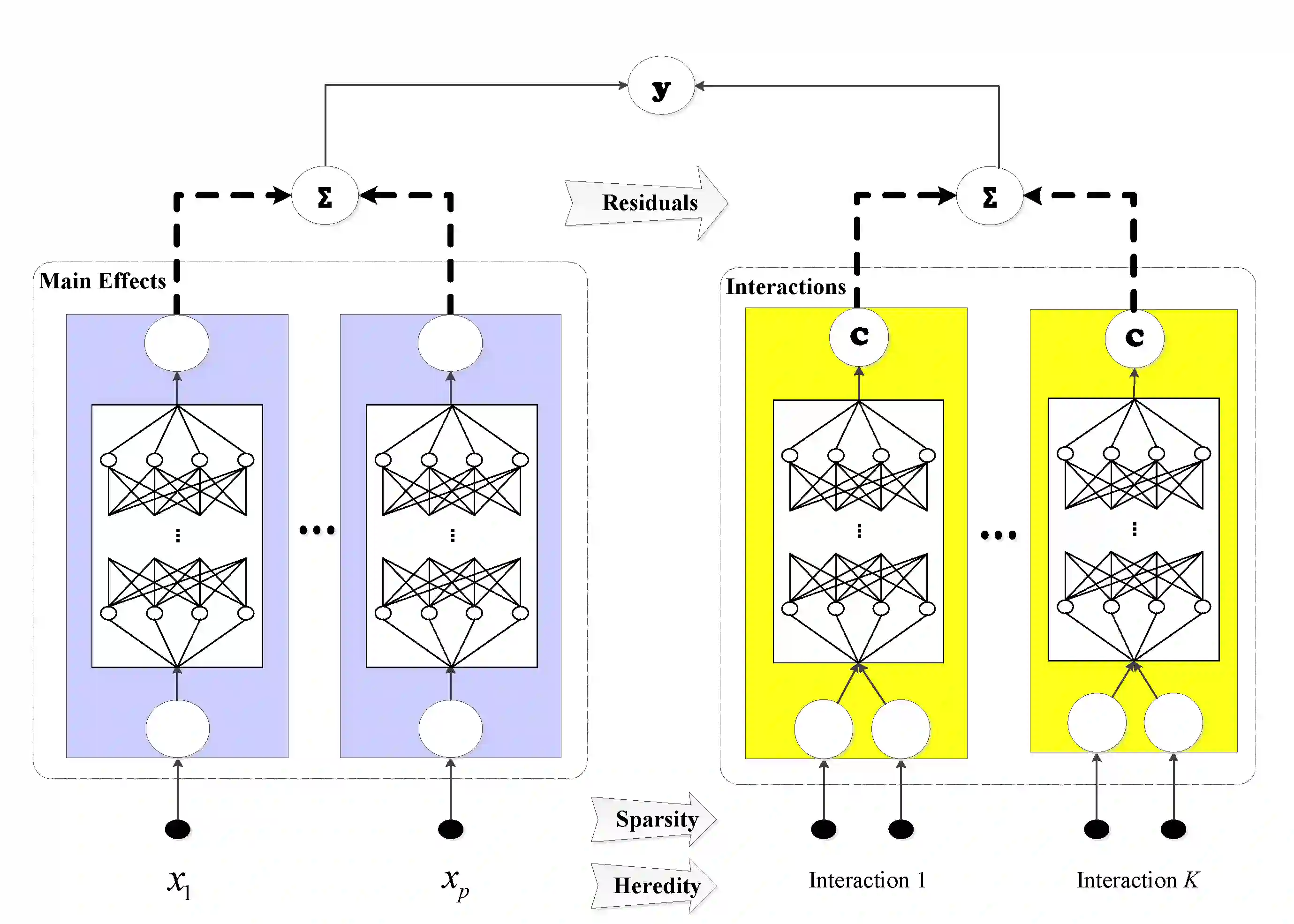

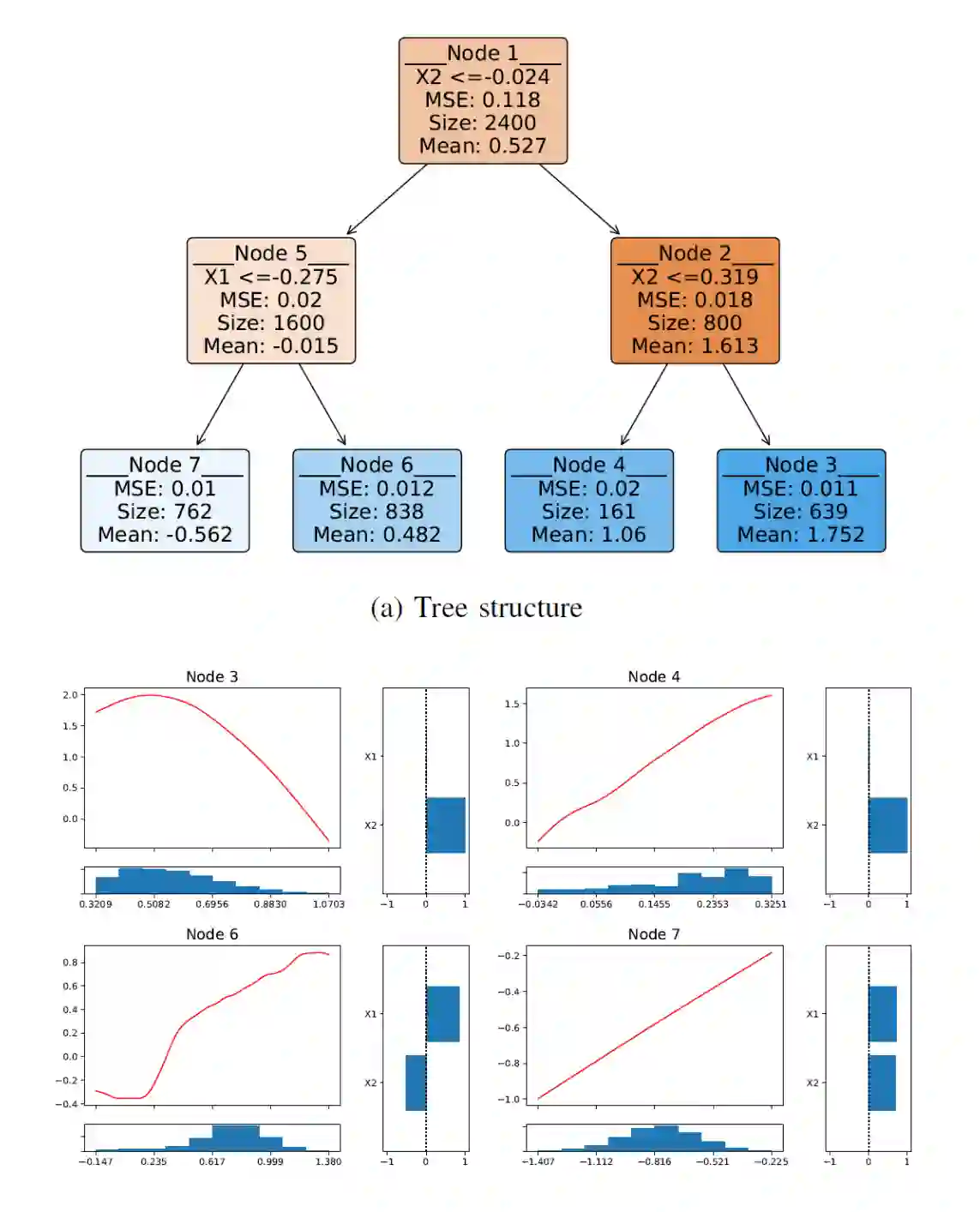

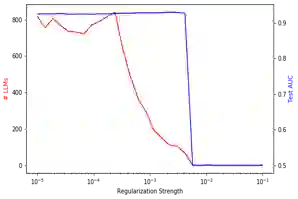

Interpretable machine learning (IML) becomes increasingly important in highly regulated industry sectors related to the health and safety or fundamental rights of human beings. In general, the inherently IML models should be adopted because of their transparency and explainability, while black-box models with model-agnostic explainability can be more difficult to defend under regulatory scrutiny. For assessing inherent interpretability of a machine learning model, we propose a qualitative template based on feature effects and model architecture constraints. It provides the design principles for high-performance IML model development, with examples given by reviewing our recent works on ExNN, GAMI-Net, SIMTree, and the Aletheia toolkit for local linear interpretability of deep ReLU networks. We further demonstrate how to design an interpretable ReLU DNN model with evaluation of conceptual soundness for a real case study of predicting credit default in home lending. We hope that this work will provide a practical guide of developing inherently IML models in high risk applications in banking industry, as well as other sectors.

翻译:解释性机器学习(IML)在与人类健康和安全或基本权利有关的高度监管行业部门日益重要,一般而言,应当采用固有的IML模型,因为其透明度和解释性,而具有模型-不可知性解释性的黑盒模型在监管监督下可能更难维护。为了评估机器学习模型的内在可解释性,我们根据特征效应和模型结构限制,提出了一个质量模板,为高绩效IML模型开发提供了设计原则,其中举例说明了我们最近关于ExNN、GAMI-Net、SIMTree和Aletheia关于深RELU网络的局部线性解释工具。我们进一步展示了如何设计一个可解释性ReLU DNNN模型,用于评价关于预测家庭贷款中信用违约的实际案例研究的概念正确性。我们希望,这项工作将为在银行业以及其他部门的高风险应用中开发固有的IML模型提供实用指南。