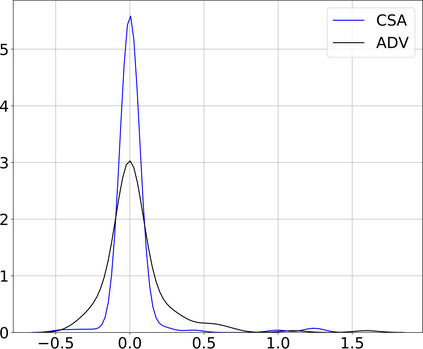

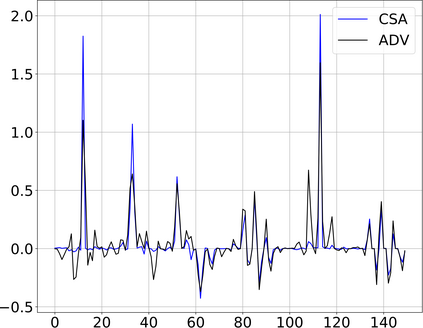

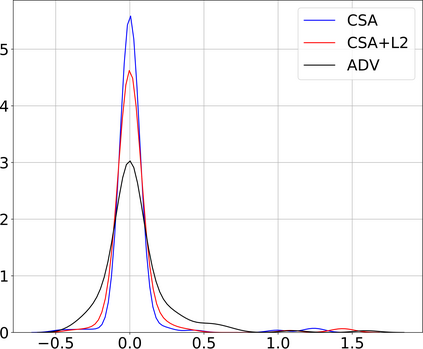

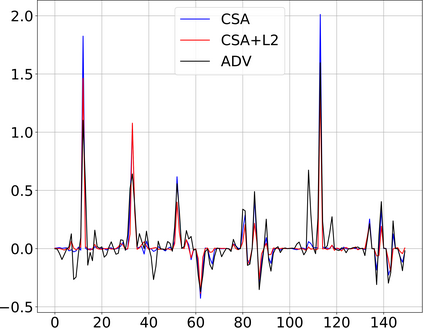

It is necessary to improve the performance of some special classes or to particularly protect them from attacks in adversarial learning. This paper proposes a framework combining cost-sensitive classification and adversarial learning together to train a model that can distinguish between protected and unprotected classes, such that the protected classes are less vulnerable to adversarial examples. We find in this framework an interesting phenomenon during the training of deep neural networks, called Min-Max property, that is, the absolute values of most parameters in the convolutional layer approach zero while the absolute values of a few parameters are significantly larger becoming bigger. Based on this Min-Max property which is formulated and analyzed in a view of random distribution, we further build a new defense model against adversarial examples for adversarial robustness improvement. An advantage of the built model is that it does no longer need adversarial training, and thus, has a higher computational efficiency than most existing models of needing adversarial training. It is experimentally confirmed that, regarding the average accuracy of all classes, our model is almost as same as the existing models when an attack does not occur and is better than the existing models when an attack occurs. Specifically, regarding the accuracy of protected classes, the proposed model is much better than the existing models when an attack occurs.

翻译:有必要改善某些特殊班级的绩效,或特别保护他们免受对抗性学习攻击。本文件提出一个将成本敏感分类和对抗性学习相结合的框架,以共同培训一种能够区分受保护和无保护班级的模式,这样受保护班级就比较不易受敌对性例子的影响。在这个框架中,我们发现在深层神经网络(称为Min-Max属性)的培训过程中,一个有趣的现象,即,在革命层方法中,大多数参数的绝对值为零,而少数参数的绝对值则大得多。根据这个在随机分布的情况下制定和分析的Min-Max属性,我们进一步建立了一个新的防御模型,以对抗敌对性对抗性强力增强的对抗性实例。这个模型的优点是,它不再需要对抗性培训,因此,其计算效率高于大多数现有的需要对抗性培训的模式。实验性地证实,关于所有班级的平均准确度,我们的模式与现有模型几乎相同,当攻击没有发生时,而且攻击发生时比现有模型好得多。具体地说,在攻击发生受保护班级时,关于攻击的准确性模型发生时,拟议的是更好的。