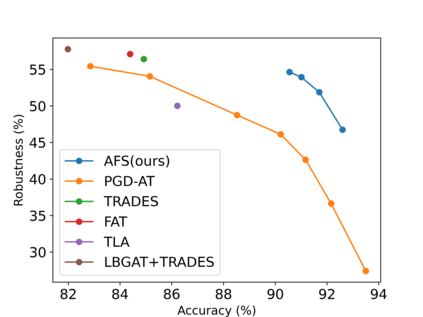

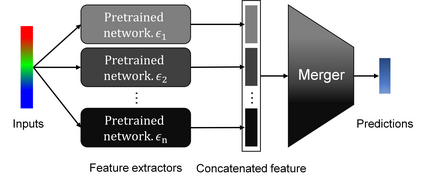

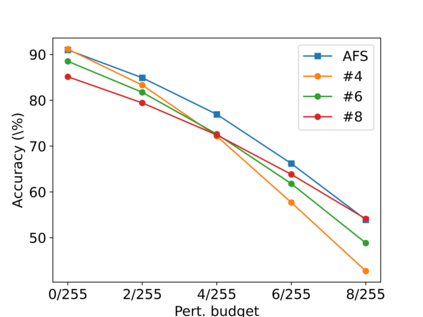

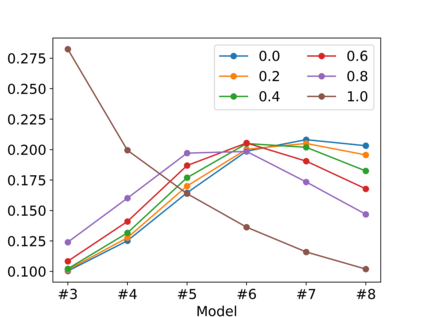

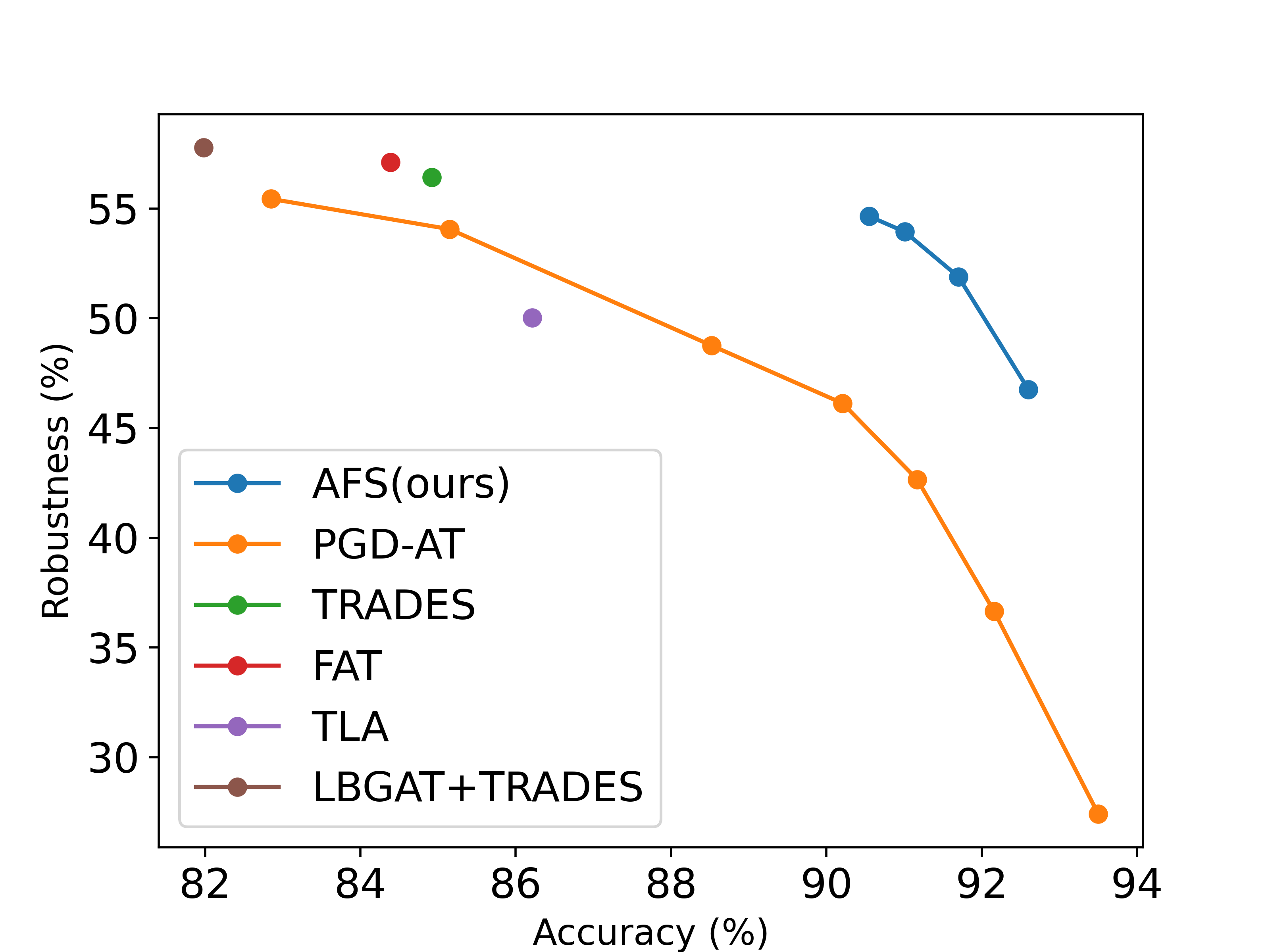

Deep Neural Networks (DNNs) have achieved remarkable performance on a variety of applications but are extremely vulnerable to adversarial perturbation. To address this issue, various defense methods have been proposed to enhance model robustness. Unfortunately, the most representative and promising methods, such as adversarial training and its variants, usually degrade model accuracy on benign samples, limiting practical utility. This indicates that it is difficult to extract both robust and accurate features using a single network under certain conditions, such as limited training data, resulting in a trade-off between accuracy and robustness. To tackle this problem, we propose an Adversarial Feature Stacking (AFS) model that can jointly take advantage of features with varied levels of robustness and accuracy, thus significantly alleviating the aforementioned trade-off. Specifically, we adopt multiple networks adversarially trained with different perturbation budgets to extract either more robust features or more accurate features. These features are then fused by a learnable merger to give final predictions. We evaluate the AFS model on CIFAR-10 and CIFAR-100 datasets with strong adaptive attack methods, which significantly advances the state-of-the-art in terms of the trade-off. Without extra training data, the AFS model achieves a benign accuracy improvement of 6% on CIFAR-10 and 9% on CIFAR-100 with comparable or even stronger robustness than the state-of-the-art adversarial training methods. This work demonstrates the feasibility to obtain both accurate and robust models under the circumstances of limited training data.

翻译:深神经网络(DNNS)在各种应用中取得了显著的成绩,但极易受到对抗性干扰。为了解决这一问题,我们提议了各种防御方法,以加强模型的稳健性。不幸的是,最有代表性和最有希望的方法,如对抗性培训及其变体,通常会降低良性样本的模型准确性,限制实际效用。这表明,在某些条件下,难以利用单一网络获得稳健和准确性的特征,例如有限的培训数据,从而在准确性和稳健性之间实现平衡。为了解决这一问题,我们提议了一种反向精确拼写(AFRS)模型,可以联合利用不同程度的稳健性和准确性特征,从而大大缓解上述交易。具体地说,我们采用经过不同扰动预算的对抗性培训的多个网络,以提取更稳健或更准确的特征。这些特征随后会通过学习的合并加以整合,以便作出最后的预测。我们评估了CFS模型10和CIFAR-100数据集集,采用强大的适应性攻击方法,可以联合利用不同程度和准确性水平的特征,从而大大改进了第9号FAR模型,从而实现了第10号标准的准确性培训的准确性模型。