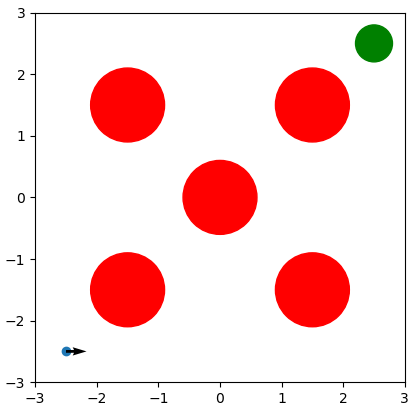

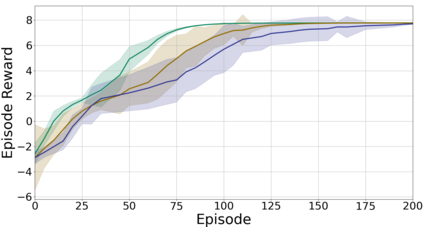

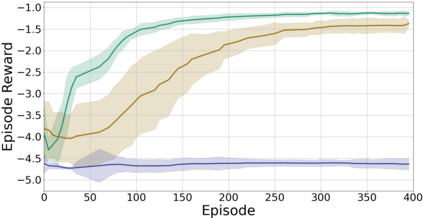

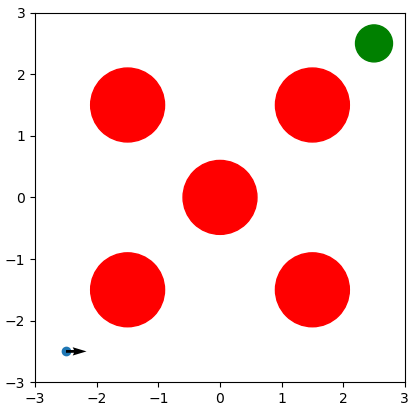

Reinforcement Learning (RL) is effective in many scenarios. However, it typically requires the exploration of a sufficiently large number of state-action pairs, some of which may be unsafe. Consequently, its application to safety-critical systems remains a challenge. Towards this end, an increasingly common approach to address safety involves the addition of a safety layer that projects the RL actions onto a safe set of actions. In turn, a challenge for such frameworks is how to effectively couple RL with the safety layer to improve the learning performance. In the context of leveraging control barrier functions for safe RL training, prior work focuses on a restricted class of barrier functions and utilizes an auxiliary neural net to account for the effects of the safety layer which inherently results in an approximation. In this paper, we frame safety as a differentiable robust-control-barrier-function layer in a model-based RL framework. As such, this approach both ensures safety and effectively guides exploration during training resulting in increased sample efficiency as demonstrated in the experiments.

翻译:在许多情景中,强化学习(RL)是有效的,但通常需要探索数量足够多的州-州-州-行动对,其中一些可能是不安全的,因此,在安全临界系统中应用这一系统仍是一项挑战。为此,一个日益常见的解决安全问题的方法是增加一个安全层,将RL行动投射为一套安全的行动。反过来,这种框架面临的挑战是如何有效地将RL与安全层结合起来,以提高学习绩效。在利用控制屏障功能进行安全RL培训方面,先前的工作侧重于有限的一类屏障功能,并使用辅助神经网来说明安全层的内在效果,在本文中,我们把安全作为一个不同的稳健控制-控制-控制-功能层置于基于模型的RL框架中。因此,这种方法既能确保安全,又能有效地指导培训期间的探索,从而提高试验中显示的样本效率。