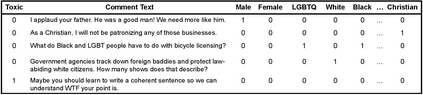

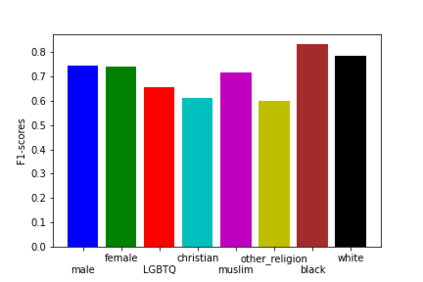

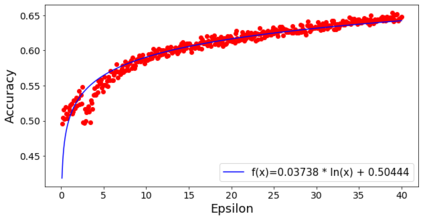

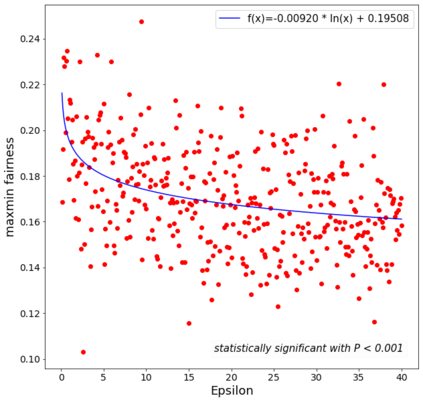

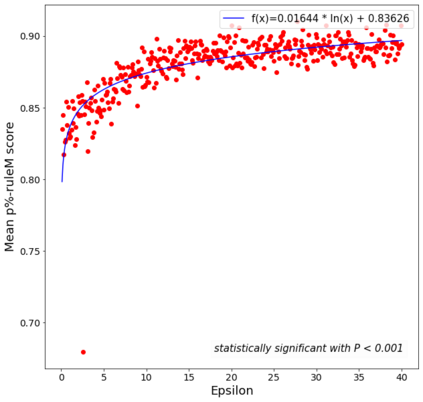

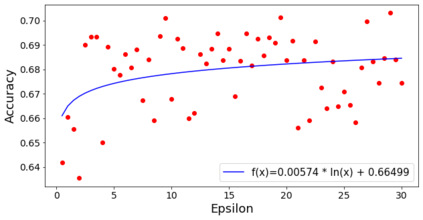

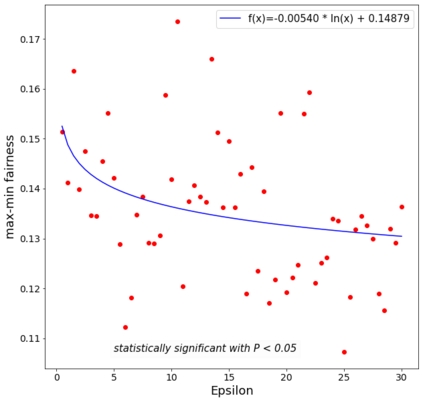

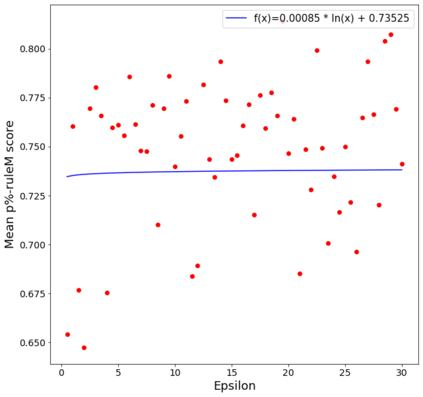

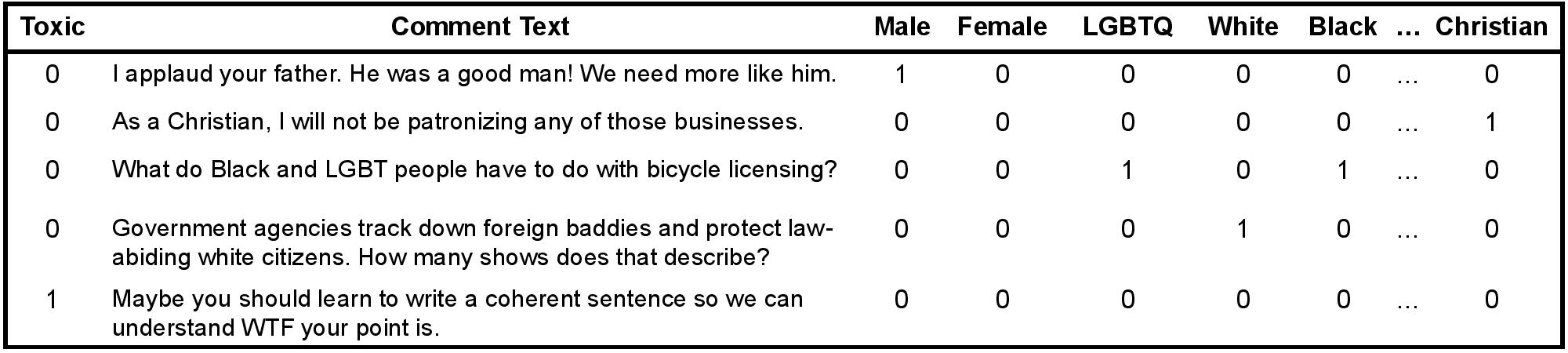

End users and regulators require private and fair artificial intelligence models, but previous work suggests these objectives may be at odds. We use the CivilComments to evaluate the impact of applying the {\em de facto} standard approach to privacy, DP-SGD, across several fairness metrics. We evaluate three implementations of DP-SGD: for dimensionality reduction (PCA), linear classification (logistic regression), and robust deep learning (Group-DRO). We establish a negative, logarithmic correlation between privacy and fairness in the case of linear classification and robust deep learning. DP-SGD had no significant impact on fairness for PCA, but upon inspection, also did not seem to lead to private representations.

翻译:终端用户和监管者需要私人和公平的人工智能模型,但先前的工作表明这些目标可能存在分歧。我们利用《民事评论》来评估采用“事实上”标准方法(DP-SGD)在多个公平指标中评估隐私的影响。我们评估了DP-SGD的三个执行情况:减少维度(PCA),线性分类(逻辑回归)和强有力的深层次学习(Group-DRO ) 。 在线性分类和强有力的深层次学习中,我们在隐私和公平之间建立了负面的对论关系。DP-SGD对五氯苯甲醚的公平性没有重大影响,但对检查来说,似乎也没有导致私人陈述。