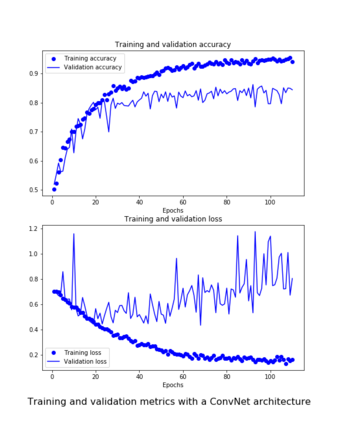

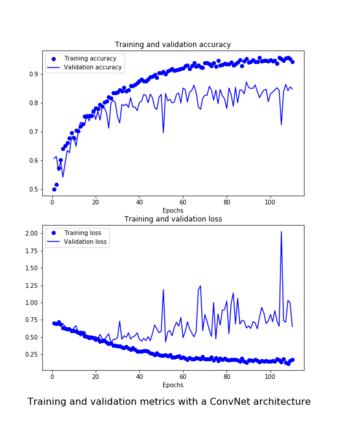

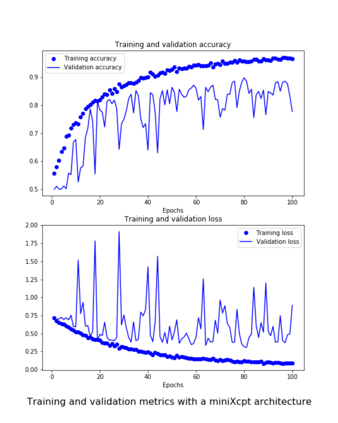

The nondeterminism of Deep Learning (DL) training algorithms and its influence on the explainability of neural network (NN) models are investigated in this work with the help of image classification examples. To discuss the issue, two convolutional neural networks (CNN) have been trained and their results compared. The comparison serves the exploration of the feasibility of creating deterministic, robust DL models and deterministic explainable artificial intelligence (XAI) in practice. Successes and limitation of all here carried out efforts are described in detail. The source code of the attained deterministic models has been listed in this work. Reproducibility is indexed as a development-phase-component of the Model Governance Framework, proposed by the EU within their excellence in AI approach. Furthermore, reproducibility is a requirement for establishing causality for the interpretation of model results and building of trust towards the overwhelming expansion of AI systems applications. Problems that have to be solved on the way to reproducibility and ways to deal with some of them, are examined in this work.

翻译:在这项工作中,利用图像分类实例调查了深层学习培训算法的不确定性及其对神经网络模型的解释性的影响。为了讨论这一问题,对两个革命性神经网络进行了培训,并比较了结果。比较有助于探索在实践中建立确定性、强健的DL模型和可确定性可解释的人工智能的可行性。详细介绍了所有在这里进行的努力的成功和局限性。在这项工作中列出了已确定型模型的源代码。欧盟在AI方法的优异范围内提议的示范治理框架的开发阶段组成部分之一,将可复制性编入索引。此外,复制性是确定模型结果解释的因果关系和为绝大多数AI系统应用的扩展建立信任的一个必要条件。在这项工作中,必须解决的一些问题是如何复制和如何处理其中一些问题。