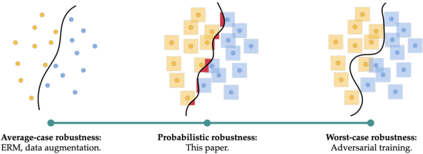

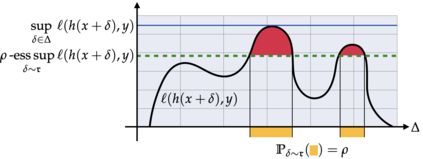

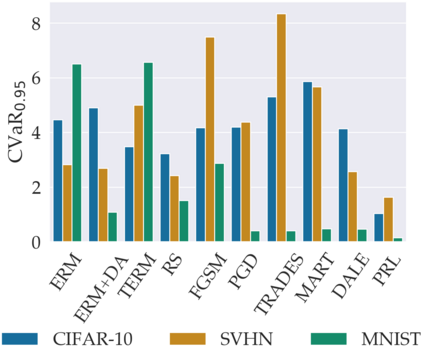

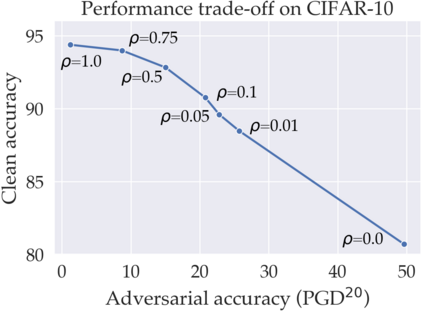

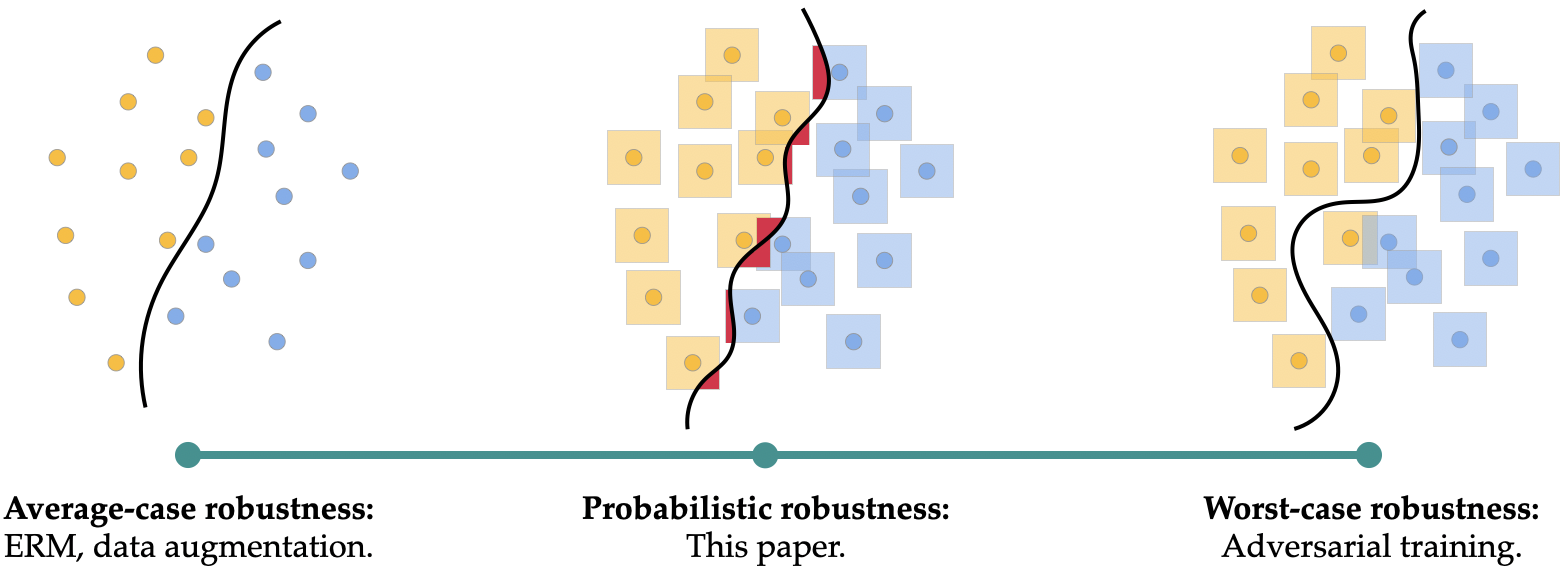

Many of the successes of machine learning are based on minimizing an averaged loss function. However, it is well-known that this paradigm suffers from robustness issues that hinder its applicability in safety-critical domains. These issues are often addressed by training against worst-case perturbations of data, a technique known as adversarial training. Although empirically effective, adversarial training can be overly conservative, leading to unfavorable trade-offs between nominal performance and robustness. To this end, in this paper we propose a framework called probabilistic robustness that bridges the gap between the accurate, yet brittle average case and the robust, yet conservative worst case by enforcing robustness to most rather than to all perturbations. From a theoretical point of view, this framework overcomes the trade-offs between the performance and the sample-complexity of worst-case and average-case learning. From a practical point of view, we propose a novel algorithm based on risk-aware optimization that effectively balances average- and worst-case performance at a considerably lower computational cost relative to adversarial training. Our results on MNIST, CIFAR-10, and SVHN illustrate the advantages of this framework on the spectrum from average- to worst-case robustness.

翻译:机械学习的许多成功都以尽量减少平均损失功能为基础,然而,众所周知,这一模式存在强健问题,阻碍了其在安全关键领域的适用性;这些问题往往通过针对数据最坏情况的扰动(称为对抗性培训)的培训来解决;尽管在经验上是有效的,但对抗性培训可能是过于保守的,导致名义业绩和稳健之间的不利权衡。为此,我们在本文件中提议了一个称为概率稳健的框架,以弥补准确的、但易碎的平均案例与强健的、但保守的最坏案例之间的差距,办法是将稳健的强健情况提高到大多数,而不是所有扰动情况。从理论上看,这一框架克服了业绩与最坏的情况和普通情况学习的抽样复杂性之间的权衡。从实际角度看,我们建议一种基于风险意识优化的新算法,有效地平衡平均和最坏的绩效,而计算成本则大大低于对抗性能培训。我们关于MNIST、CIFAR-10和SVHN这一框架从平均数到最差的优势。