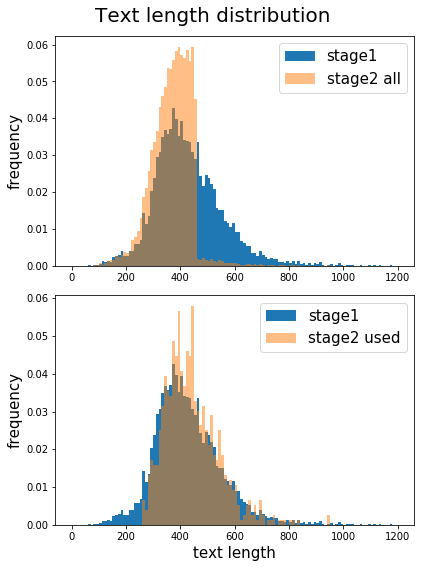

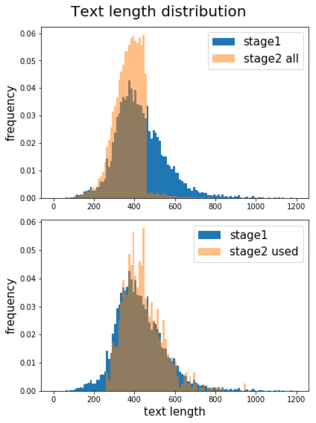

We present our 7th place solution to the Gendered Pronoun Resolution challenge, which uses BERT without fine-tuning and a novel augmentation strategy designed for contextual embedding token-level tasks. Our method anonymizes the referent by replacing candidate names with a set of common placeholder names. Besides the usual benefits of effectively increasing training data size, this approach diversifies idiosyncratic information embedded in names. Using same set of common first names can also help the model recognize names better, shorten token length, and remove gender and regional biases associated with names. The system scored 0.1947 log loss in stage 2, where the augmentation contributed to an improvements of 0.04. Post-competition analysis shows that, when using different embedding layers, the system scores 0.1799 which would be third place.

翻译:我们提出了解决性别化Pronoun解决挑战的第7个地方方案,这个方案在不作微调的情况下使用BERT,并且为嵌入象征性任务的背景设置了新的增强战略。我们的方法用一套通用的占位符名称替换候选人姓名,以此来将推荐人匿名。除了有效增加培训数据规模的通常好处外,这种方法还分散了嵌入姓名中的特异性信息。使用同一套通用的首名也可以帮助模型识别名称,缩短象征性长度,并消除与姓名相关的性别和地区偏见。系统在第二阶段损失了0.1947年的日志,而增强有助于0.04年的改进。竞争后分析显示,在使用不同的嵌入层时,系统分数为0.1799,这将是第三位。