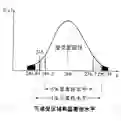

Differential privacy (DP) has become a rigorous central concept for privacy protection in the past decade. We use Gaussian differential privacy (GDP) in gauging the level of privacy protection for releasing statistical summaries from data. The GDP is a natural and easy-to-interpret differential privacy criterion based on the statistical hypothesis testing framework. The Gaussian mechanism is a natural and fundamental mechanism that can be used to perturb multivariate statistics to satisfy a $\mu$-GDP criterion, where $\mu>0$ stands for the level of privacy protection. Requiring a certain level of differential privacy inevitably leads to a loss of statistical utility. We improve ordinary Gaussian mechanisms by developing rank-deficient James-Stein Gaussian mechanisms for releasing private multivariate statistics, and show that the proposed mechanisms have higher statistical utilities. Laplace mechanisms, the most commonly used mechanisms in the pure DP framework, are also investigated under the GDP criterion. We show that optimal calibration of multivariate Laplace mechanisms requires more information on the statistic than just the global sensitivity, and derive the minimal amount of Laplace perturbation for releasing $\mu$-GDP contingency tables. Gaussian mechanisms are shown to have higher statistical utilities than Laplace mechanisms, except for very low levels of privacy. The utility of proposed multivariate mechanisms is further demonstrated using differentially private hypotheses tests on contingency tables. Bootstrap-based goodness-of-fit and homogeneity tests, utilizing the proposed rank-deficient James--Stein mechanisms, exhibit higher powers than natural competitors.

翻译:暂无翻译