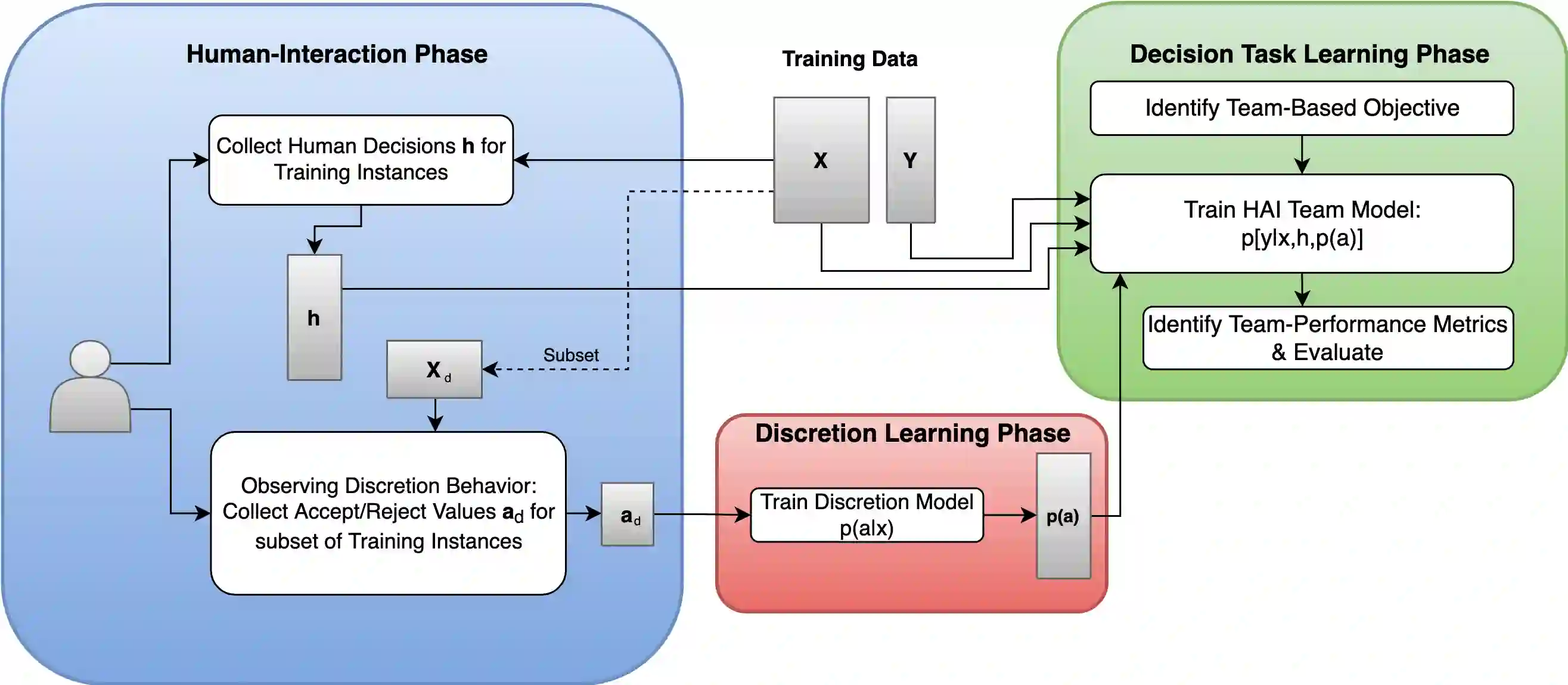

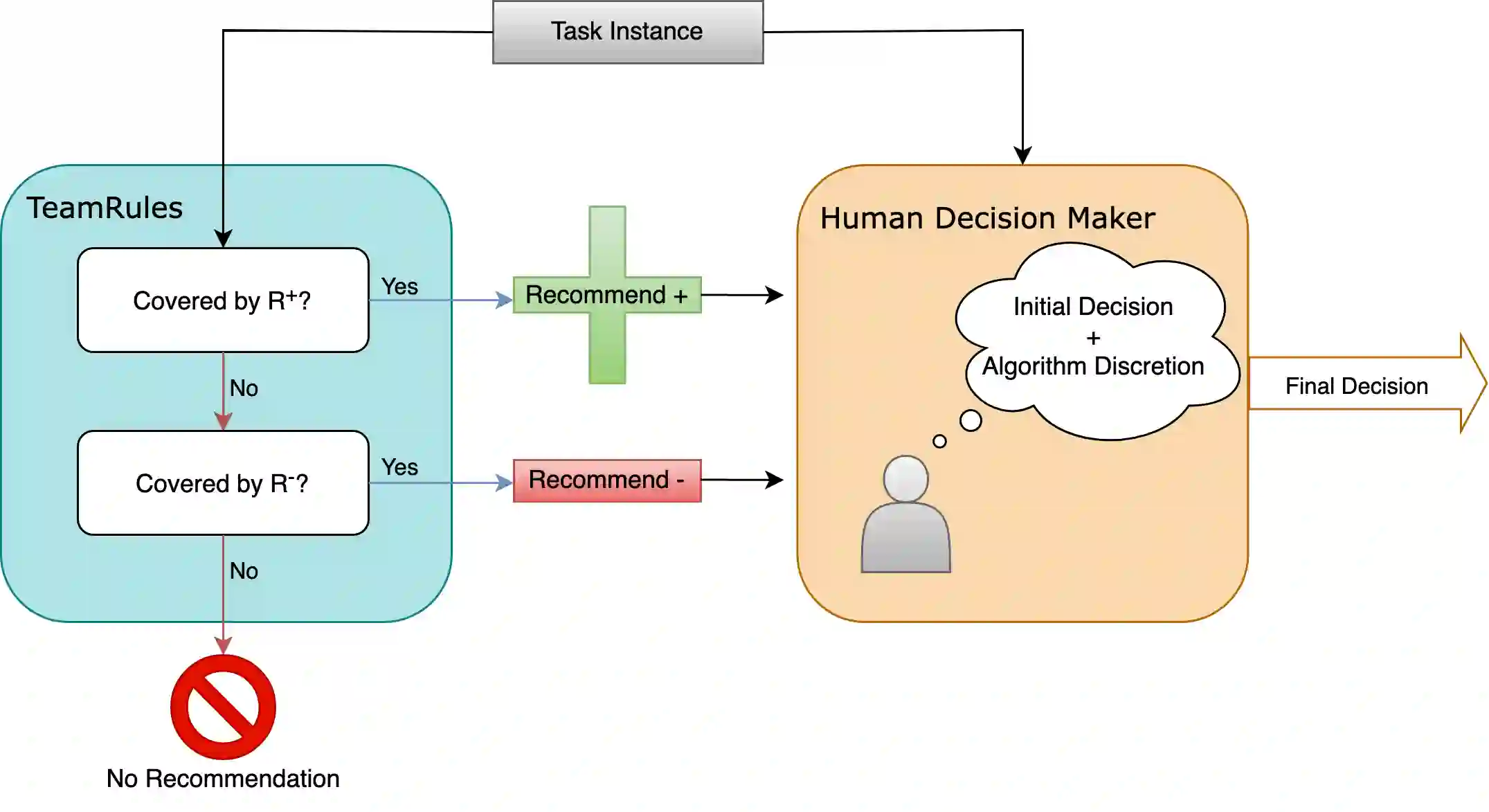

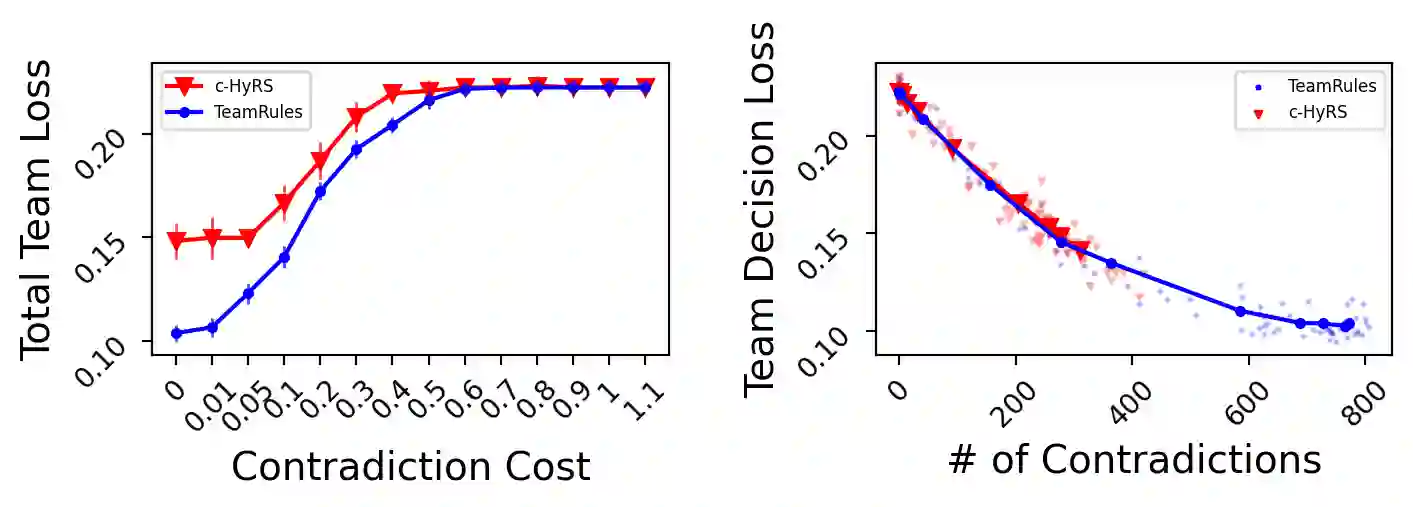

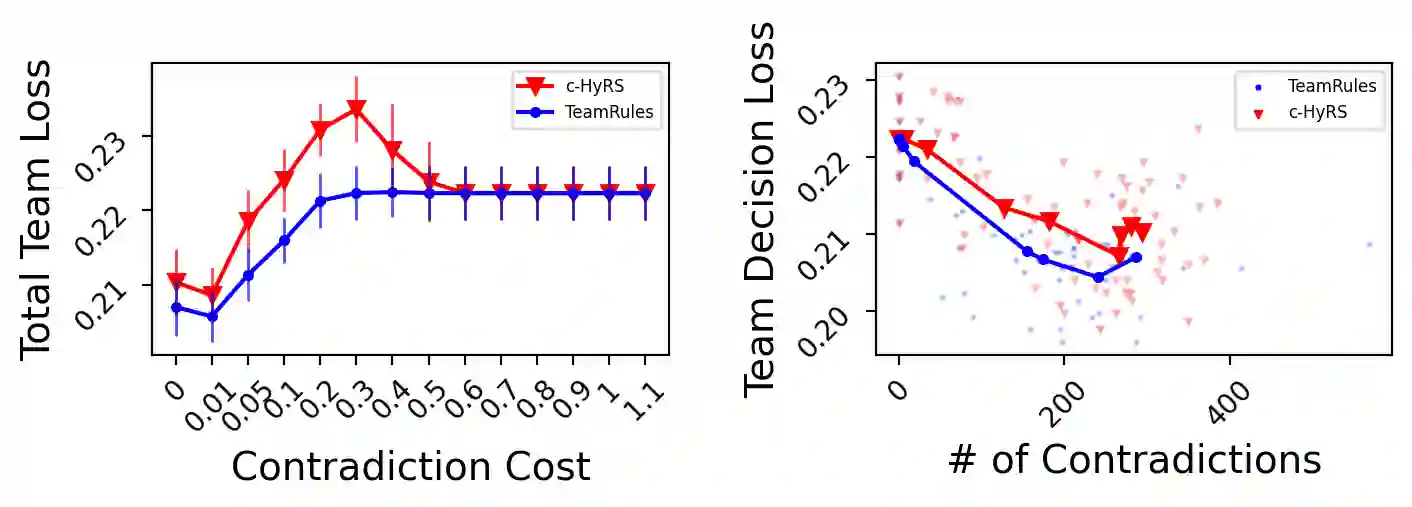

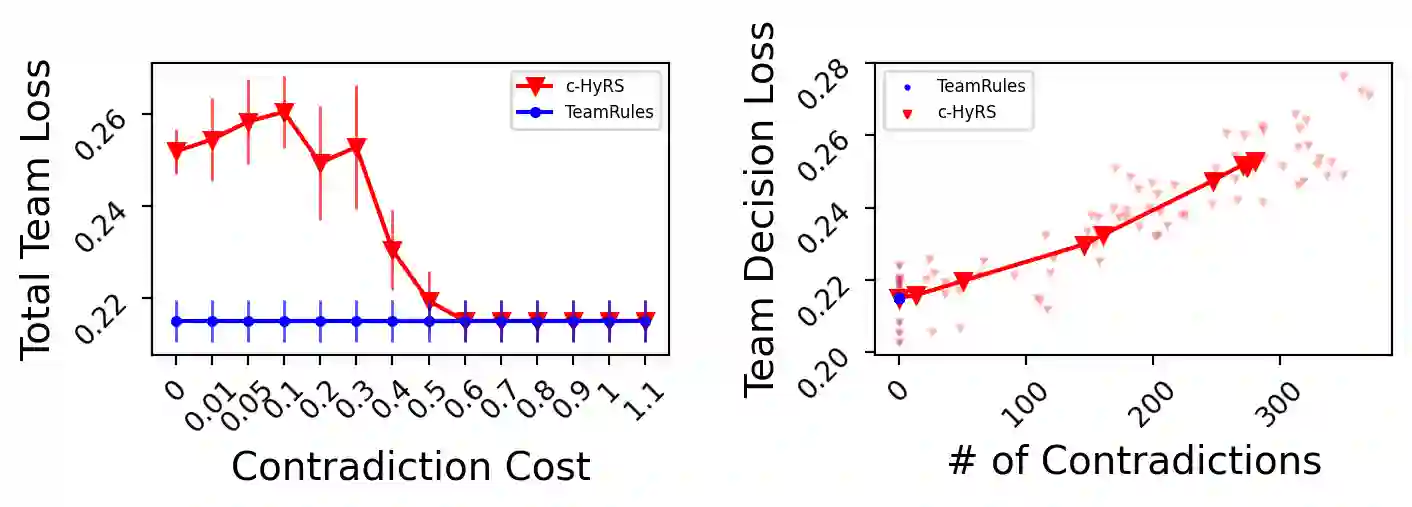

Expert decision-makers (DMs) in high-stakes AI-advised (AIDeT) settings receive and reconcile recommendations from AI systems before making their final decisions. We identify distinct properties of these settings which are key to developing AIDeT models that effectively benefit team performance. First, DMs in AIDeT settings exhibit algorithm discretion behavior (ADB), i.e., an idiosyncratic tendency to imperfectly accept or reject algorithmic recommendations for any given decision task. Second, DMs incur contradiction costs from exerting decision-making resources (e.g., time and effort) when reconciling AI recommendations that contradict their own judgment. Third, the human's imperfect discretion and reconciliation costs introduce the need for the AI to offer advice selectively. We refer to the task of developing AI to advise humans in AIDeT settings as learning to advise and we address this task by first introducing the AIDeT-Learning Framework. Additionally, we argue that leveraging the human partner's ADB is key to maximizing the AIDeT's decision accuracy while regularizing for contradiction costs. Finally, we instantiate our framework to develop TeamRules (TR): an algorithm that produces rule-based models and recommendations for AIDeT settings. TR is optimized to selectively advise a human and to trade-off contradiction costs and team accuracy for a given environment by leveraging the human partner's ADB. Evaluations on synthetic and real-world benchmark datasets with a variety of simulated human accuracy and discretion behaviors show that TR robustly improves the team's objective across settings over interpretable, rule-based alternatives.

翻译:高端专家决策者(DMs)在做出最终决定之前接受并调和AI(AIDET)系统的建议。我们确定这些设置的独特性是开发AIDET模式的关键,这些模式有效地有利于团队业绩。首先,AIDET环境中的专家(DMs)展示了算法自由裁量行为(ADB),即一种不完全接受或拒绝任何特定决策任务的算法建议的特殊倾向。第二,在调和AI(AIDET)系统提出的与其自身判断相矛盾的AI建议时,DMs会产生矛盾性成本。第三,人类的不完善的裁量权与和解费用使得AI有必要有选择地提供建议。我们提到开发AI的工作是向AIDET环境中的人提供建议,以学习和解决这项任务,首先引入AIDET学习-学习框架。此外,我们说,利用基于人类伙伴的亚银是尽量提高AIDET决定的精度,同时规范成本。最后,我们将我们的框架与规则解释性规则的准确性解释和ADR(TR)小组的精确性分析成本。