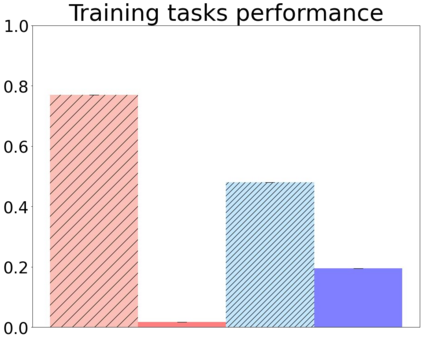

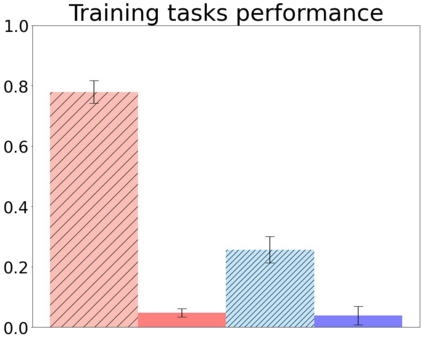

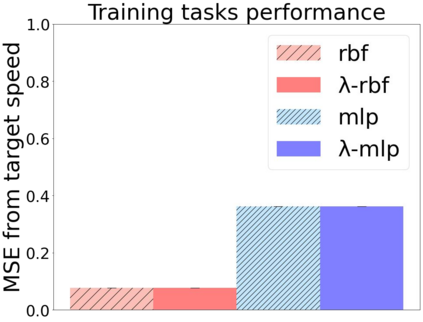

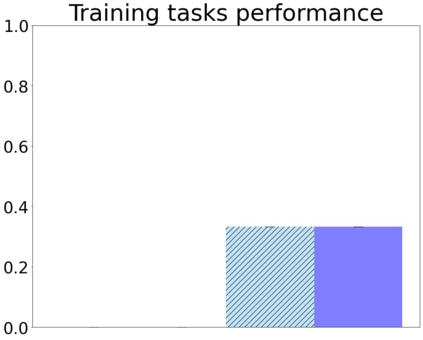

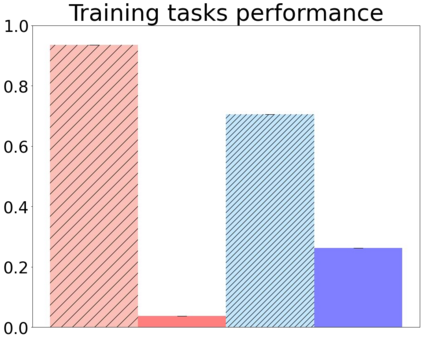

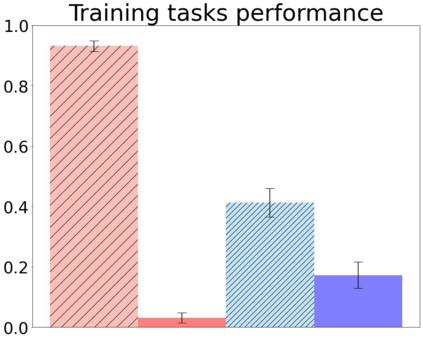

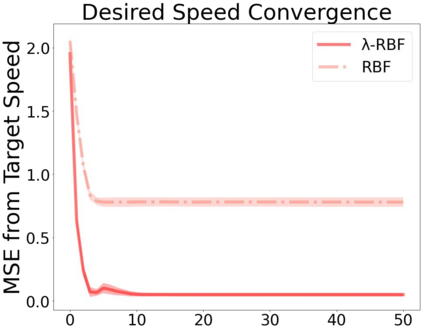

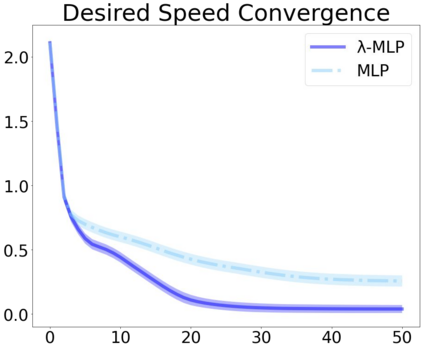

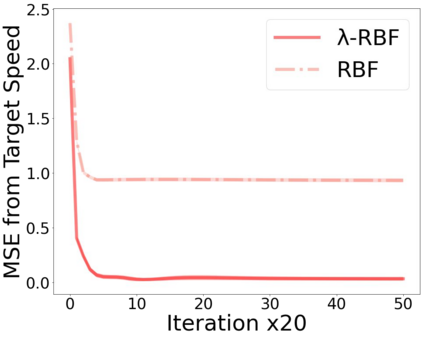

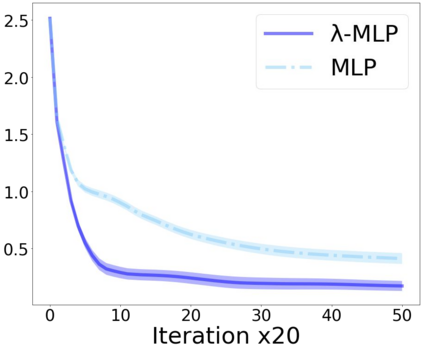

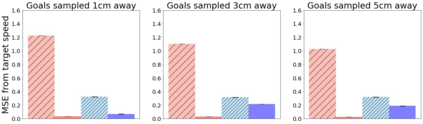

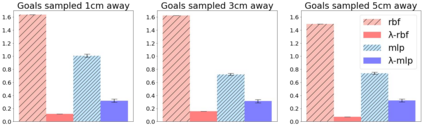

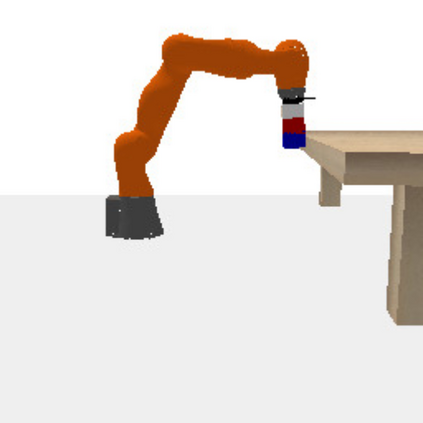

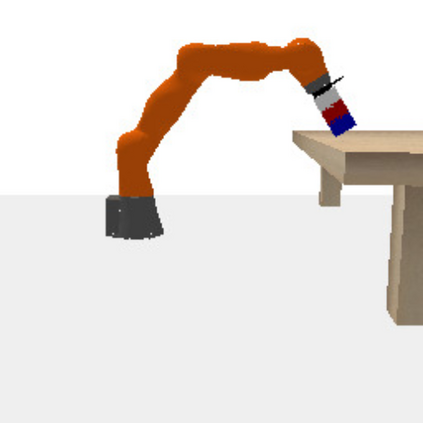

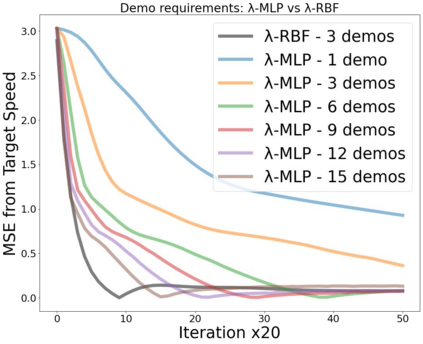

Inverse reinforcement learning is a paradigm motivated by the goal of learning general reward functions from demonstrated behaviours. Yet the notion of generality for learnt costs is often evaluated in terms of robustness to various spatial perturbations only, assuming deployment at fixed speeds of execution. However, this is impractical in the context of robotics and building time-invariant solutions is of crucial importance. In this work, we propose a formulation that allows us to 1) vary the length of execution by learning time-invariant costs, and 2) relax the temporal alignment requirements for learning from demonstration. We apply our method to two different types of cost formulations and evaluate their performance in the context of learning reward functions for simulated placement and peg in hole tasks. Our results show that our approach enables learning temporally invariant rewards from misaligned demonstration that can also generalise spatially to out of distribution tasks.

翻译:反强化学习是一种范式,其动因是学习从所显示的行为中学习一般奖励功能;然而,对学习成本的一般性概念的评价往往只是从强于各种空间扰动的角度来进行,假设执行速度固定;然而,在机器人和建设时间差异解决方案方面,这样做不切实际,至关重要;在这项工作中,我们提议一种提法,允许我们(1) 通过学习时间变化成本来改变执行时间长度,(2) 放宽从演示中学习的时间调整要求。我们将我们的方法应用于两种不同的成本配置,并在模拟安置和插入洞中任务学习奖励功能的背景下评价其表现。我们的结果表明,我们的方法能够从不协调的演示中学习时间变化性回报,这些错误的演示也可以在空间上普遍地将分配任务排除在外。