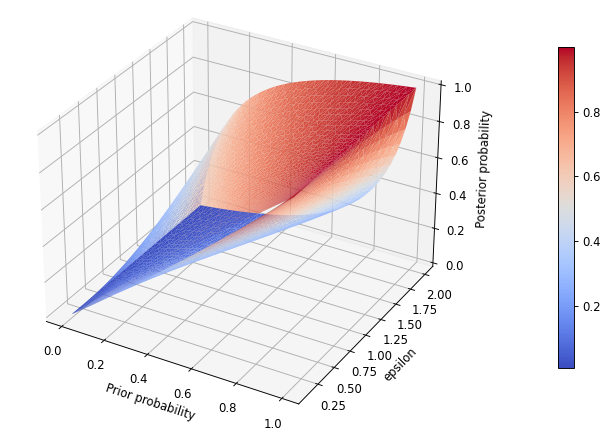

Differential Privacy (DP) is a formal definition of privacy that provides rigorous guarantees against risks of privacy breaches during data processing. It makes no assumptions about the knowledge or computational power of adversaries, and provides an interpretable, quantifiable and composable formalism. DP has been actively researched during the last 15 years, but it is still hard to master for many Machine Learning (ML)) practitioners. This paper aims to provide an overview of the most important ideas, concepts and uses of DP in ML, with special focus on its intersection with Federated Learning (FL).

翻译:不同隐私(DP)是隐私的正式定义,为在数据处理过程中防止侵犯隐私的风险提供了严格的保障,对对手的知识或计算能力不作任何假设,提供一种可解释、可量化和可编纂的形式主义。在过去15年中,DP一直在积极研究,但对许多机器学习(ML)实践者来说,它仍然难以掌握。本文件旨在概述DP在ML中最重要的思想、概念和用途,特别侧重于它与Federal Learning(FL)的交叉点。