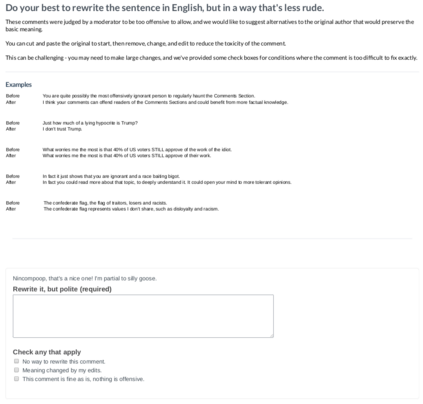

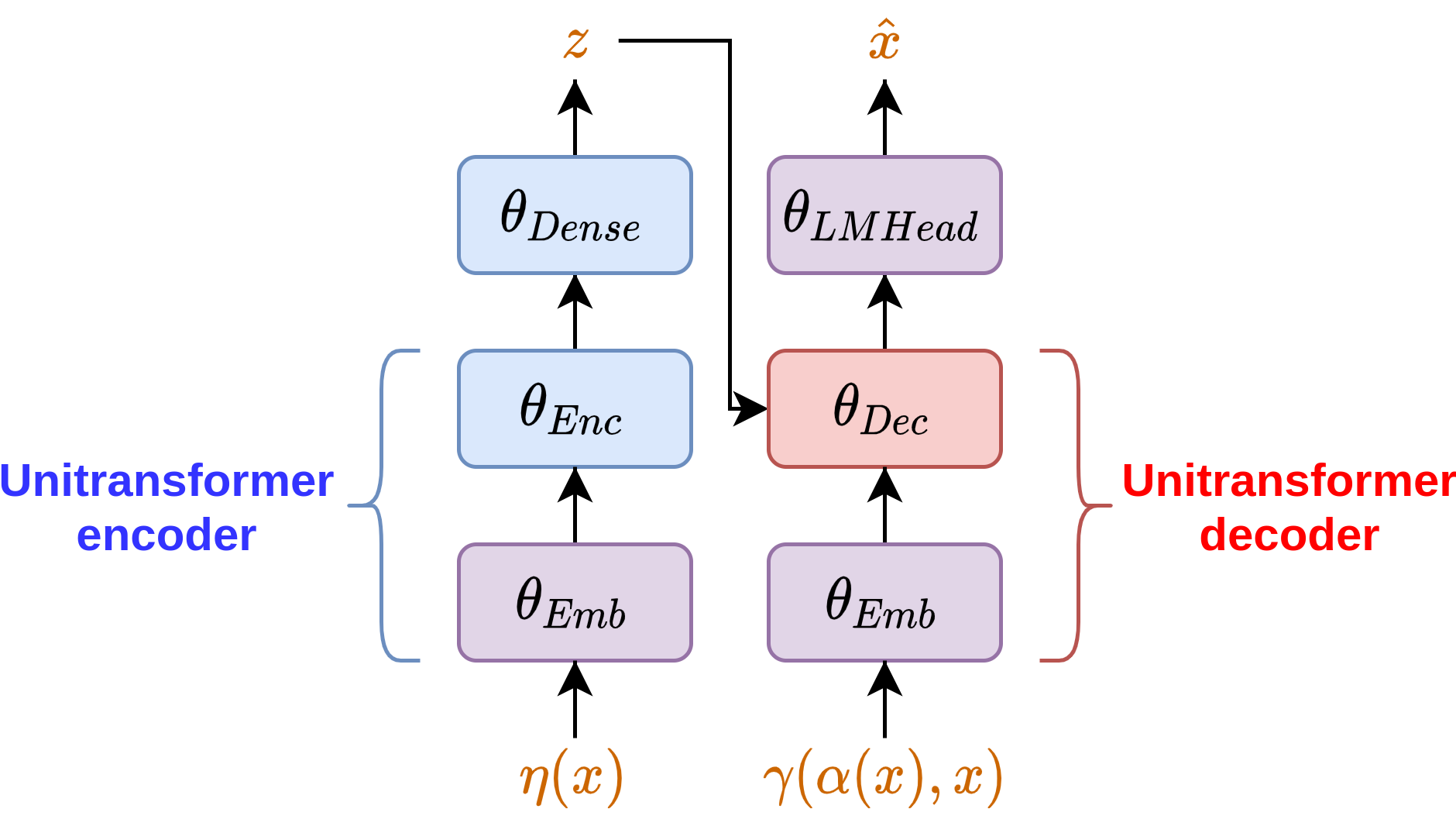

Platforms that support online commentary, from social networks to news sites, are increasingly leveraging machine learning to assist their moderation efforts. But this process does not typically provide feedback to the author that would help them contribute according to the community guidelines. This is prohibitively time-consuming for human moderators to do, and computational approaches are still nascent. This work focuses on models that can help suggest rephrasings of toxic comments in a more civil manner. Inspired by recent progress in unpaired sequence-to-sequence tasks, a self-supervised learning model is introduced, called CAE-T5. CAE-T5 employs a pre-trained text-to-text transformer, which is fine tuned with a denoising and cyclic auto-encoder loss. Experimenting with the largest toxicity detection dataset to date (Civil Comments) our model generates sentences that are more fluent and better at preserving the initial content compared to earlier text style transfer systems which we compare with using several scoring systems and human evaluation.

翻译:支持在线评论的平台,从社交网络到新闻网站,正在越来越多地利用机器学习来协助其温和努力。 但这一过程通常不会向作者提供反馈,帮助作者根据社区准则作出贡献。 这对于人类主持人来说耗时太长,而且计算方法仍然新生。 这项工作侧重于能够帮助以更文明的方式提出有毒评论的修改的模型。 受最近未设序序至顺序任务的进展的启发,引入了一种自我监督的学习模式,称为CAE- T5. CAE- T5, 使用预先训练的文本到文本变换器,该变换器与消毒和循环自动编码损失相适应。 实验最大的毒性检测数据( 公民评论), 我们的模式生成的句子比早期的文本样式转换系统更流利,更能保存初始内容,我们用几种评分系统和人类评价进行比较。