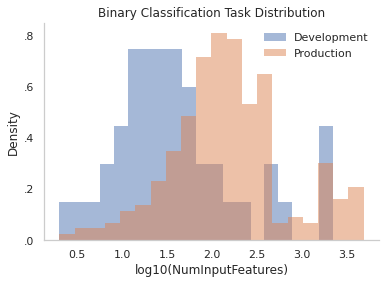

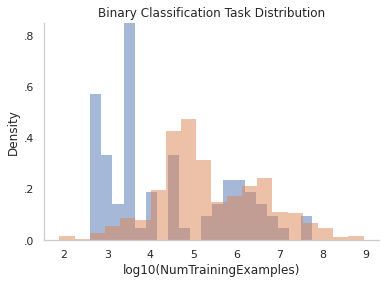

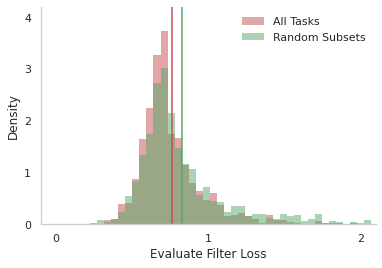

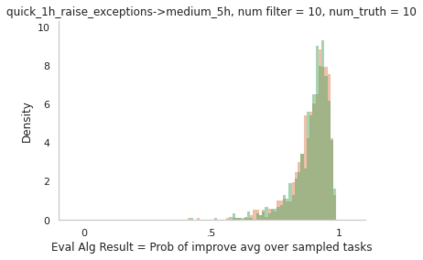

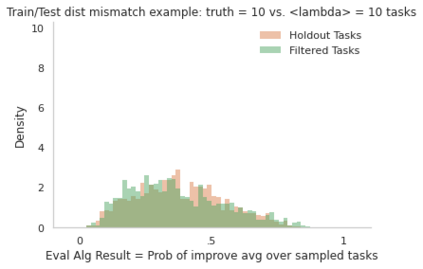

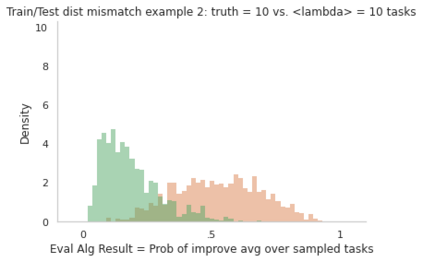

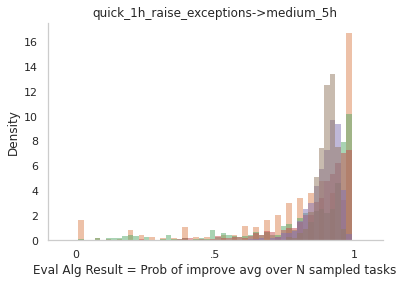

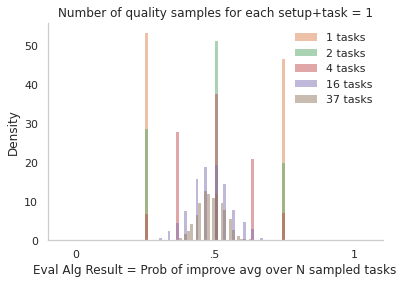

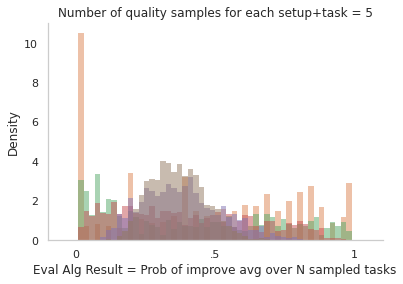

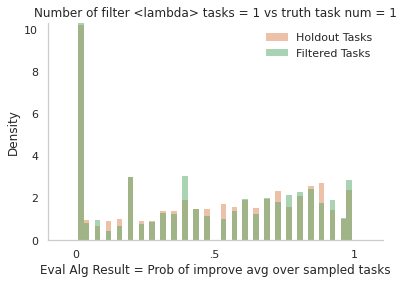

Our goal is to assess if AutoML system changes - i.e., to the search space or hyperparameter optimization - will improve the final model's performance on production tasks. However, we cannot test the changes on production tasks. Instead, we only have access to limited descriptors about tasks that our AutoML system previously executed, like the number of data points or features. We also have a set of development tasks to test changes, ex., sampled from OpenML with no usage constraints. However, the development and production task distributions are different leading us to pursue changes that only improve development and not production. This paper proposes a method to leverage descriptor information about AutoML production tasks to select a filtered subset of the most relevant development tasks. Empirical studies show that our filtering strategy improves the ability to assess AutoML system changes on holdout tasks with different distributions than development.

翻译:我们的目标是评估自动ML系统的变化(即搜索空间或超参数优化)是否会改进最后模型在生产任务方面的性能。 但是,我们不能测试生产任务的变化。 相反,我们只能对我们的自动ML系统以前执行的任务,例如数据点或特征的数量,有有限的描述符。 我们还有一套开发任务来测试变化,例如,从 OpenML 中抽样,没有使用限制。然而,开发和生产任务的分配不同,导致我们追求只能改进开发而不是生产的变化。本文建议了一种方法,利用自动ML生产任务的说明性信息来选择最相关的发展任务的筛选子。经验性研究表明,我们的过滤战略提高了评估在不执行比开发不同的分配任务方面进行不执行的自动ML系统变化的能力。