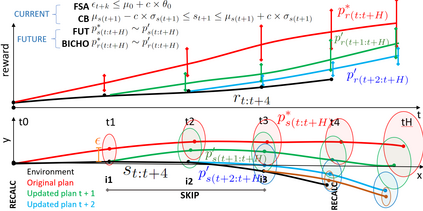

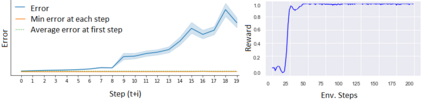

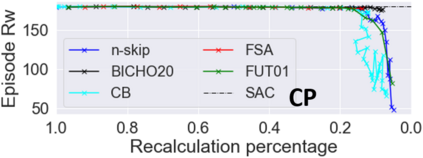

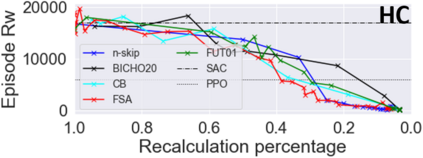

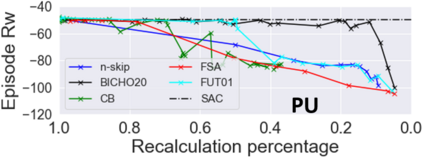

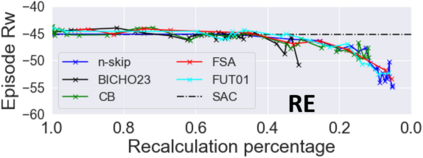

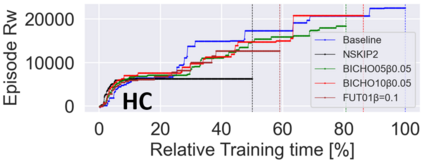

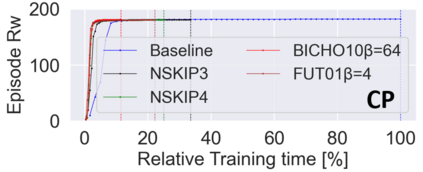

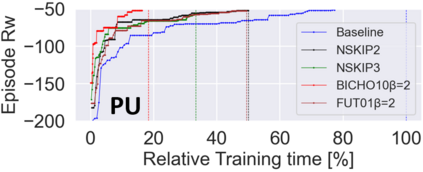

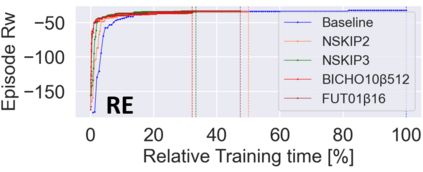

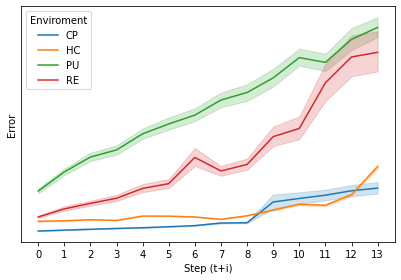

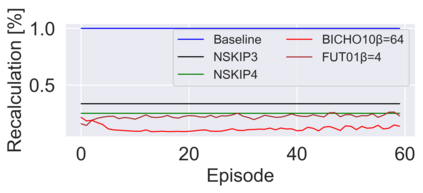

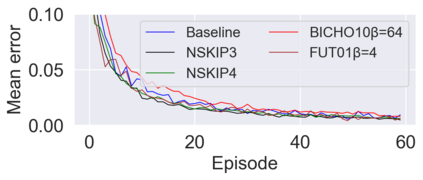

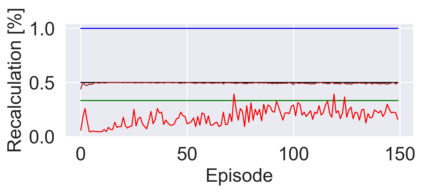

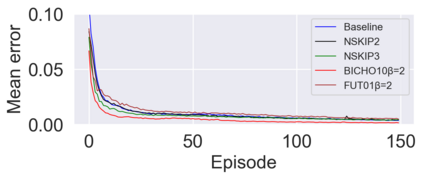

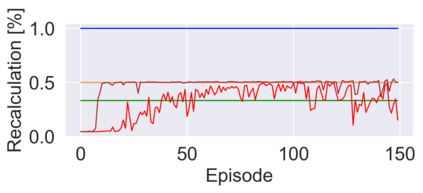

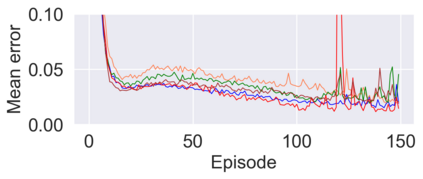

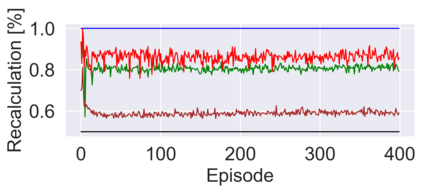

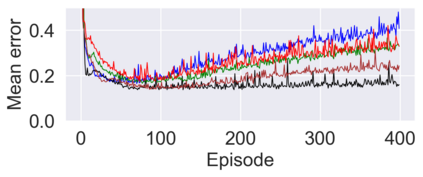

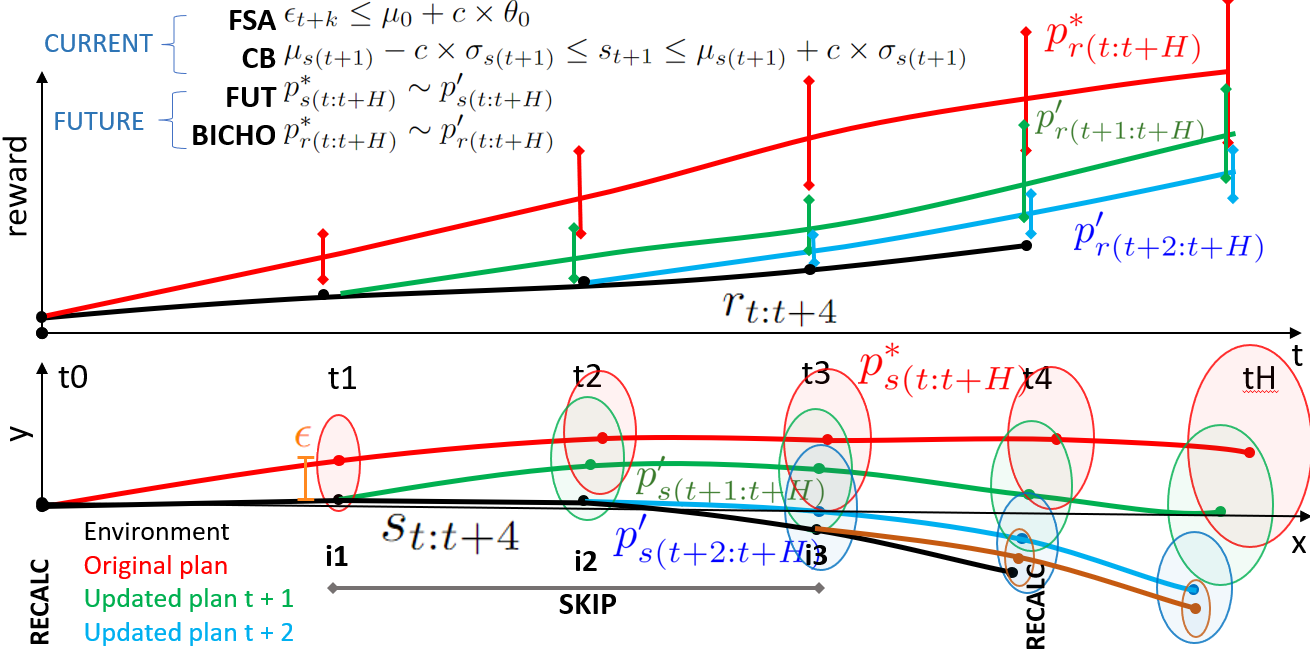

Model based reinforcement learning (MBRL) uses an imperfect model of the world to imagine trajectories of future states and plan the best actions that maximize a given reward. These trajectories are imperfect and MBRL attempts to overcome this by relying on model predictive control (MPC) to continuously re-imagine trajectories from scratch. Such re-generation of imagined trajectories carries the major computational cost and increasing complexity in tasks with longer receding horizon. We investigate how far in the future the imagined trajectories can be relied upon while still maintaining acceptable reward. After taking each action, information becomes available about its immediate effect and its impact on outcomes expected of future actions. Hereby, we propose four methods for deciding whether to trust and act upon imagined trajectories: i) looking at recent errors with respect to expectations, ii) comparing the confidence in an action imagined against its execution, iii) observing the deviation in projected future states iv) observing the deviation in projected future rewards. An experiment analyzing the effects of acting upon imagination shows that our methods reduce computation by at least 20\% and up to 80\%, depending on the environment, while retaining acceptable reward.

翻译:以模型为基础的强化学习(MBRL)使用一种不完善的世界模型模型,以想象未来国家的轨迹,并规划最佳行动,以最大限度地提高给定的奖励。这些轨迹是不完善的,而MBRL试图通过依靠模型预测控制(MPC)从零开始不断重新想象轨迹来克服这一点。这种想象的轨迹的再生成将带来重大的计算成本,并且随着时间的延长而使任务更加复杂。我们调查未来在保持可接受的奖励的同时,能够依赖想象的轨迹有多远。每次行动之后,都会获得关于其直接影响及其对未来行动预期结果的影响的信息。我们提出了决定是否信任和对想象轨迹采取行动的四种方法:一) 审视有关期望的最近错误,二) 比较所想象的行动与执行之间的信心,三) 观察预测未来状态的偏差,四) 观察预测未来奖励的偏差。分析想象力的效果的实验表明,我们的方法将减少至少20°C和80°C的计算,同时保留环境。